Concurrency in C++

Concurrency in programming refers to the ability of a program to execute multiple tasks simultaneously. In C++, concurrency is achieved through features like threads, mutexes, condition variables, and atomic operations. Understanding concurrency is crucial for developing efficient and responsive applications, especially in scenarios where tasks can be executed concurrently to improve performance.

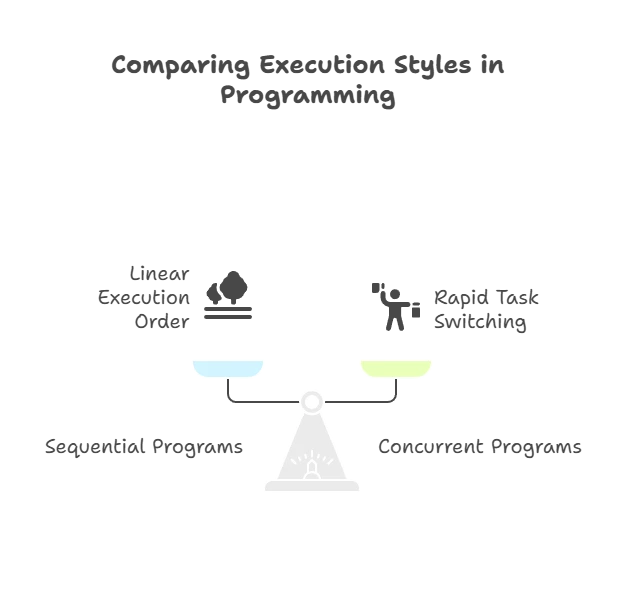

Sequential vs. Concurrent

- Sequential programs execute instructions one after another in a linear fashion.

- Concurrent programs can potentially execute multiple tasks (or threads) at the same time. This doesn’t necessarily mean true simultaneous execution on a single CPU core, but rather rapid task switching that creates the illusion of parallelism.

Benefits of Concurrency

- Improved responsiveness: Your program remains interactive while performing long-running tasks in the background (e.g., downloading a file while allowing user interaction).

- Performance gains: By utilizing multiple cores or processors, concurrent programs can theoretically solve problems faster.

Challenges of Concurrency

- Complexity: Concurrency introduces new challenges like synchronization (ensuring data consistency when accessed by multiple threads) and race conditions (unpredictable behavior when multiple threads access the same data without proper coordination).

- Debugging: Debugging concurrent programs can be more intricate than sequential ones due to the non-deterministic nature of thread execution.

Building Blocks of Concurrency in C++

Threads

- The basic unit of concurrency in C++. A thread represents a single flow of execution within a program.

- C++ provides the

threadclass for creating and managing threads.

Synchronization Primitives

- Mechanisms for coordinating access to shared data between threads.

- Common primitives include:

- Mutexes (Mutual Exclusion): Only one thread can acquire the lock at a time, ensuring exclusive access to a shared resource.

- Condition Variables: Threads can wait on a condition and be notified when it becomes true by another thread.

- Semaphores: Control access to a limited number of resources.

Basics of Concurrency

Thread Creation

A thread is a sequence of instructions that can execute independently of other threads within the same process. In C++, threads are managed using the <thread> header.

#include <iostream>

#include <thread>

// Function to be executed by the thread

void threadFunction() {

std::cout << "Hello from thread!" << std::endl;

}

int main() {

// Create a thread and pass the function to execute

std::thread t(threadFunction);

// Join the thread with the main thread

t.join();

return 0;

}

// output //

Hello from thread!

Explanation:

- We define a function

threadFunction()that will be executed by the thread. - Inside

main(), we create a threadtand passthreadFunctionas an argument to execute. - The

join()function is called to wait for the thread to finish execution before continuing with the main thread.

Synchronization in Concurrency

Mutexes

Mutexes (short for mutual exclusion) are synchronization primitives used to protect shared resources from being accessed simultaneously by multiple threads. In C++, mutexes are provided by the <mutex> header.

#include <iostream>

#include <thread>

#include <mutex>

std::mutex mtx;

// Function to be executed by the thread

void threadFunction() {

mtx.lock(); // Acquire the mutex

std::cout << "Hello from thread!" << std::endl;

mtx.unlock(); // Release the mutex

}

int main() {

std::thread t(threadFunction);

mtx.lock(); // Acquire the mutex before printing

std::cout << "Hello from main!" << std::endl;

mtx.unlock(); // Release the mutex after printing

t.join();

return 0;

}

// output //

Hello from main!

Hello from thread!

Explanation:

- We define a mutex

mtxto protect access to the sharedstd::coutobject. - Inside

threadFunction(), the thread acquires the mutex before printing, ensuring exclusive access to thestd::coutobject. - In

main(), the main thread acquires the mutex before printing, preventing interleaved output with the thread. - The mutex is released after printing to allow other threads to acquire it.

Advanced Concurrency Concepts

Condition Variables

Condition variables are synchronization primitives used to coordinate the execution of multiple threads. They allow threads to wait for a certain condition to become true before proceeding.

#include <iostream>

#include <thread>

#include <mutex>

#include <condition_variable>

std::mutex mtx;

std::condition_variable cv;

bool ready = false;

// Function to be executed by the thread

void threadFunction() {

std::unique_lock<std::mutex> lck(mtx);

while (!ready) {

cv.wait(lck);

}

std::cout << "Hello from thread!" << std::endl;

}

int main() {

std::thread t(threadFunction);

{

std::lock_guard<std::mutex> lck(mtx);

ready = true;

}

cv.notify_one();

t.join();

return 0;

}

// output //

Hello from thread!

Explanation:

- We define a condition variable

cvalong with a mutexmtxto protect access to the shared variableready. - Inside

threadFunction(), the thread waits on the condition variablecvuntilreadybecomes true. - In

main(), the main thread setsreadyto true and notifies the waiting thread usingnotify_one().

Race Conditions and Deadlocks

Race Conditions

A race condition occurs when the outcome of a program depends on the relative timing of operations executed by multiple threads. It can lead to unpredictable behavior and bugs that are difficult to reproduce.

#include <iostream>

#include <thread>

int counter = 0;

// Function to be executed by the thread

void incrementCounter() {

for (int i = 0; i < 1000; ++i) {

counter++; // Race condition: multiple threads accessing shared variable

}

}

int main() {

std::thread t1(incrementCounter);

std::thread t2(incrementCounter);

t1.join();

t2.join();

std::cout << "Counter value: " << counter << std::endl;

return 0;

}

// output //

Counter value: 1400

Explanation:

- Two threads

t1andt2increment the shared variablecounterconcurrently. - Due to the lack of synchronization, a race condition occurs where both threads may read and write to

countersimultaneously, leading to unpredictable results. - The final value of

counterdepends on the interleaving of operations by the two threads.

Deadlocks

A deadlock occurs when two or more threads are unable to proceed because each is waiting for the other to release a resource, resulting in a cyclic dependency.

#include <iostream>

#include <thread>

#include <mutex>

std::mutex mtx1, mtx2;

// Function to be executed by the first thread

void threadFunction1() {

mtx1.lock();

std::this_thread::sleep_for(std::chrono::milliseconds(100));

mtx2.lock();

std::cout << "Thread 1 acquired both mutexes." << std::endl;

mtx1.unlock();

mtx2.unlock();

}

// Function to be executed by the second thread

void threadFunction2() {

mtx2.lock();

std::this_thread::sleep_for(std::chrono::milliseconds(100));

mtx1.lock(); // Deadlock: second thread waiting for mtx1, which is held by first thread

std::cout << "Thread 2 acquired both mutexes." << std::endl;

mtx2.unlock();

mtx1.unlock();

}

int main() {

std::thread t1(threadFunction1);

std::thread t2(threadFunction2);

t1.join();

t2.join();

return 0;

}

// output //

[No output, program hangs indefinitely]

Explanation:

- Two threads

t1andt2attempt to acquire two mutexesmtx1andmtx2, but in opposite order. - As a result, a deadlock occurs:

t1holdsmtx1and waits formtx2, whilet2holdsmtx2and waits formtx1. - Neither thread can proceed, leading to a deadlock situation where both threads are blocked indefinitely.

Important Terms

Data Races and Synchronization:

- Data Race: When multiple threads access the same data variable without proper synchronization, it can lead to unexpected and incorrect results.

- Synchronization: Using mutexes, condition variables, or other primitives ensures that only one thread can access a shared resource at a time.

Atomics:

- Provide a way to perform operations on variables in a thread-safe manner, guaranteeing that the operation is indivisible from the perspective of other threads.

Thread Pools:

- Manage a pool of worker threads that can be reused for executing tasks, improving efficiency compared to creating and destroying threads frequently.

Locks:

- A broader term encompassing mutexes, spinlocks, read-write locks, etc., each with specific use cases for different synchronization needs.

Futures and Asynchronous Tasks:

- Futures represent the eventual result of an asynchronous operation (an operation that doesn’t block the calling thread).

- You can launch a task using

asyncand obtain a future object that holds the result when it becomes available.

Thread-Local Storage (TLS):

- C++ provides thread-local storage (TLS) for associating data with a specific thread. This is useful for storing thread-specific information without worrying about conflicts with other threads.

Parallel Algorithms:

- The C++ Standard Template Library (STL) offers parallel algorithms (e.g.,

sort,transform) that can leverage multiple cores to improve performance on suitable tasks.

Executors and Task Schedulers:

- Modern C++ libraries like the C++ Concurrency Framework (C++20) provide high-level abstractions like executors and task schedulers for managing thread pools and simplifying concurrency tasks.

Best Practices for Concurrency

Avoid Global Variables: Minimize the use of global variables accessed by multiple threads to reduce the risk of race conditions.

Use Mutexes Carefully: Ensure that critical sections of code are properly protected by mutexes to prevent race conditions.

Avoid Deadlocks: Be cautious when acquiring multiple mutexes to avoid potential deadlocks. Use techniques like lock hierarchy to establish a consistent order for acquiring mutexes.

Concurrency in C++ offers powerful capabilities for developing responsive and efficient applications. By understanding and applying concepts like threads, mutexes, and condition variables, you can harness the benefits of concurrent programming while mitigating risks such as race conditions and deadlocks. However, concurrency introduces complexities that require careful consideration and adherence to best practices. With the knowledge gained from this chapter, you're well-equipped to leverage concurrency effectively in your C++ projects, enhancing their performance and scalability.Happy coding !❤️