Parallel Computing with Dask

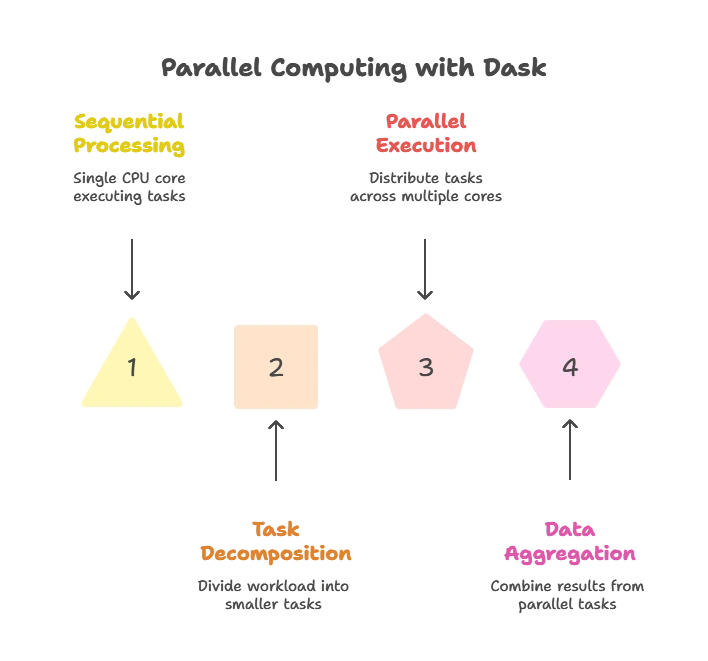

Parallel computing is a powerful technique for improving the performance and scalability of computational tasks by dividing them into smaller, independent parts that can be executed concurrently. In Python, the Dask library provides a flexible framework for parallel and distributed computing, enabling efficient processing of large datasets and complex computations.

Introduction to Parallel Computing

In this section, we’ll explore the concept of parallel computing and how it can be leveraged in Python using the Dask library. We’ll start with the basics and gradually delve into more advanced topics, providing examples along the way.

What is Parallel Computing?

Parallel computing is a computing technique where multiple calculations or processes are carried out simultaneously. This allows tasks to be completed faster by dividing the workload among multiple computing resources, such as CPUs or GPUs. Parallel computing is essential for handling large datasets, performing complex computations, and improving the performance of applications.

Introduction to Dask

Dask is a flexible library for parallel computing in Python that enables parallel execution of tasks across multiple cores or nodes in a cluster. It provides high-level abstractions for working with large datasets and performing parallel computations efficiently. Dask is built on top of popular libraries like NumPy, Pandas, and Scikit-learn, making it easy to integrate into existing workflows.

Getting Started with Dask

In this section, we’ll cover the basics of working with Dask, including installation, creating Dask arrays and dataframes, and performing basic operations.

Installation

To install Dask, you can use pip:

pip install dask

Dask can also be installed alongside other libraries like NumPy and Pandas to take advantage of its parallel computing capabilities.

Creating Dask Arrays

Dask arrays are distributed, parallel arrays that extend the functionality of NumPy arrays to larger-than-memory datasets. You can create Dask arrays using the dask.array module:

import dask.array as da

# Create a Dask array with random values

x = da.random.random((1000, 1000), chunks=(100, 100))

Dask arrays are lazily evaluated, meaning that computation is deferred until necessary.

Parallel Computing with Dask

In this section, we’ll dive deeper into parallel computing with Dask, exploring concepts such as task graphs, lazy evaluation, and parallel execution.

Task Graphs

Dask represents computations as task graphs, which are directed acyclic graphs (DAGs) that describe the relationships between tasks. Each node in the graph represents a task, and edges represent dependencies between tasks. Task graphs allow Dask to optimize and parallelize computations effectively.

Lazy Evaluation

Dask uses lazy evaluation to delay computation until necessary. Instead of executing operations immediately, Dask builds up a task graph representing the computation and evaluates it only when required. This allows Dask to optimize memory usage and parallelize computations efficiently.

Parallel Execution

Dask executes computations in parallel by distributing tasks across multiple cores or nodes in a cluster. It automatically handles data partitioning, task scheduling, and communication between workers to maximize performance. Parallel execution allows Dask to scale seamlessly from single machines to large clusters.

Advanced Topics in Dask

In this section, we’ll explore advanced topics in Dask, including custom workflows, distributed computing, and integration with other libraries.

Custom Workflows

Dask provides flexibility for creating custom workflows tailored to specific use cases. You can define custom operations, optimize task graphs, and fine-tune parallel execution to meet your requirements. Custom workflows enable you to harness the full power of Dask for complex computations and data processing tasks.

Distributed Computing

Dask supports distributed computing across multiple machines in a cluster, enabling scalability and fault tolerance for large-scale data processing. You can deploy Dask clusters using tools like Kubernetes, Yarn, or Dask’s built-in distributed scheduler. Distributed computing with Dask allows you to handle massive datasets and complex computations with ease.

Integration with Other Libraries

Dask seamlessly integrates with other Python libraries like NumPy, Pandas, and Scikit-learn, allowing you to parallelize existing workflows without significant changes to your code. You can use Dask arrays and dataframes as drop-in replacements for NumPy arrays and Pandas dataframes, enabling parallel computation with minimal effort.

In the above topic, we've covered the fundamentals of parallel computing with Dask, from basic concepts to advanced topics. We've explored how Dask enables parallel execution of tasks, lazy evaluation, and distributed computing, making it a powerful tool for scalable and efficient data processing in Python. Happy coding! ❤️