Web Scraping with Node.js

Web scraping is a technique used to extract data from websites, and Node.js is a popular choice for creating efficient web scrapers due to its asynchronous nature, vast package ecosystem, and ease of use.

Introduction to Web Scraping

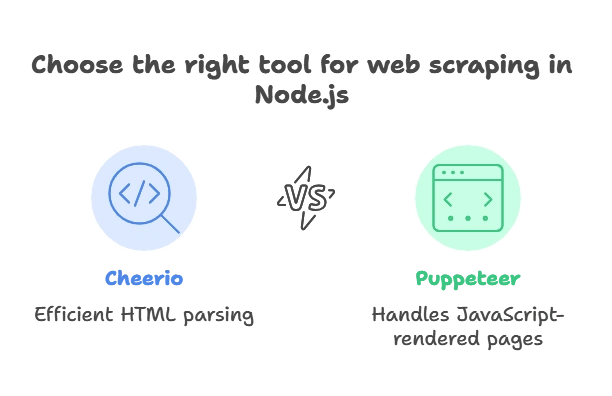

Web scraping is used to programmatically gather data from websites for various purposes, such as price comparison, content aggregation, market research, and more. In Node.js, web scraping tools are built around HTTP requests and libraries that help parse and analyze HTML data, with Cheerio being a popular choice for HTML parsing and Puppeteer for handling JavaScript-rendered pages.

Setting Up the Project

To start, let’s set up a basic Node.js project.

1. Create a new directory and initialize npm:

mkdir node-web-scraper

cd node-web-scraper

npm init -y

2. Install necessary packages: For this example, we’ll install axios, cheerio, and optionally puppeteer for handling dynamic content.

npm install axios cheerio puppeteer

Understanding HTTP Requests in Node.js

At the core of web scraping is making HTTP requests to retrieve web pages. Node.js provides modules like axios and request for handling these requests.

Example: Making a Basic HTTP Request with axios

const axios = require('axios');

axios.get('https://example.com')

.then(response => {

console.log(response.data); // Prints the HTML content of the page

})

.catch(error => {

console.error('Error fetching the page:', error.message);

});

Output:

<!doctype html><html>...Contents of example.com...</html>

Choosing and Using Modules for Web Scraping

For efficient web scraping, we need modules that help us make requests and parse the HTML responses.

Using axios

axios is a promise-based HTTP client that simplifies making requests to a server.

Example:

const axios = require('axios');

async function fetchPage(url) {

try {

const response = await axios.get(url);

return response.data;

} catch (error) {

console.error('Error fetching the page:', error.message);

}

}

fetchPage('https://example.com').then(html => console.log(html));

Using cheerio for HTML Parsing

cheerio is a lightweight library that parses HTML and provides a jQuery-like interface to navigate and manipulate HTML.

1. Example: Extracting the Title of a Page

const cheerio = require('cheerio');

const axios = require('axios');

async function scrapeTitle(url) {

const response = await axios.get(url);

const $ = cheerio.load(response.data);

const title = $('title').text();

console.log('Page Title:', title);

}

scrapeTitle('https://example.com');

Output:

Page Title: Example Domain

2. Extracting Specific Data (Links, Text, etc.)

const cheerio = require('cheerio');

const axios = require('axios');

async function scrapeLinks(url) {

const response = await axios.get(url);

const $ = cheerio.load(response.data);

$('a').each((index, element) => {

console.log($(element).attr('href'));

});

}

scrapeLinks('https://example.com');

Output:

/link1

/link2

https://externalwebsite.com

Handling JavaScript-Rendered Content with Puppeteer

Some websites rely on JavaScript to render content dynamically. Puppeteer is a headless browser library that lets us scrape these pages by controlling a real browser.

Example: Scraping JavaScript-Rendered Content

const puppeteer = require('puppeteer');

async function scrapeDynamicContent(url) {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto(url, { waitUntil: 'networkidle2' });

const content = await page.content();

console.log(content);

await browser.close();

}

scrapeDynamicContent('https://example.com');

This code opens a browser, navigates to the specified URL, and waits until the network is idle before capturing the page content.

Managing and Parsing HTML Content

With cheerio, we can extract text, attributes, and nested data. Here’s an example that retrieves both images and their alt text.

const cheerio = require('cheerio');

const axios = require('axios');

async function scrapeImages(url) {

const response = await axios.get(url);

const $ = cheerio.load(response.data);

$('img').each((index, element) => {

const src = $(element).attr('src');

const alt = $(element).attr('alt');

console.log('Image src:', src, 'Alt text:', alt);

});

}

scrapeImages('https://example.com');

Data Extraction and Storage

Storing scraped data is essential. Here’s how to save data to a JSON file using Node.js’s fs module.

Example: Saving Data to a JSON File

const fs = require('fs');

const data = {

title: 'Example Domain',

links: ['https://example.com', '/link2']

};

fs.writeFileSync('scrapedData.json', JSON.stringify(data, null, 2), 'utf-8');

console.log('Data saved to scrapedData.json');

Ethical and Legal Considerations in Web Scraping

It’s important to consider the legality and ethics of web scraping:

- Check the Website’s Terms of Service: Ensure that scraping isn’t prohibited.

- Use Rate Limiting: Avoid overloading the server by implementing delays.

- Respect

robots.txt: Many websites specify which parts are off-limits in theirrobots.txt.

Handling Challenges in Web Scraping

Web scraping can be challenging due to anti-scraping measures. Some tips:

- Implement Delays: Use

setTimeoutor async delays between requests. - Rotate User Agents: Change the user-agent headers periodically.

- Manage Cookies and Sessions: Some sites use cookies to track user sessions; libraries like

puppeteercan handle these.

Error Handling and Optimization

Errors are inevitable during scraping. Use try-catch blocks, log errors, and handle failed requests gracefully.

Example

async function safeScrape(url) {

try {

const response = await axios.get(url);

return response.data;

} catch (error) {

console.error('Failed to fetch data:', error.message);

}

}

Web scraping in Node.js is an essential skill for data-driven applications, providing the means to gather valuable information from web pages across the internet. Throughout this chapter, we’ve explored a comprehensive approach to web scraping, from setting up simple HTTP requests to managing complex JavaScript-rendered content, parsing HTML structures, and handling the inevitable challenges that arise during the process. Happy Coding!❤️