Scaling Node.js Applications

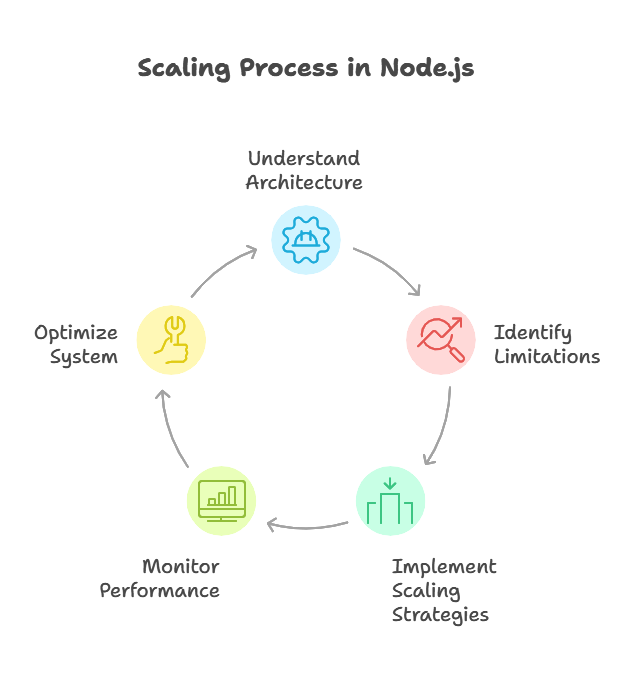

Scaling a Node.js application is essential for supporting more users, handling higher traffic, and improving performance.

Introduction to Scaling

Scaling refers to the process of enhancing a system to handle an increasing amount of load or accommodate growth. In Node.js, due to its single-threaded nature, understanding the underlying architecture and limitations is key to achieving effective scaling.

Understanding Single-Threaded Architecture

Node.js operates on a single-threaded, event-driven architecture, where it uses asynchronous operations to handle multiple requests concurrently. However, since it uses a single thread by default, CPU-intensive tasks can block the main thread, slowing down the application for all users.

Core Concepts:

- Non-Blocking I/O: Handles I/O operations asynchronously.

- Event Loop: Manages asynchronous operations, allowing multiple requests to be processed without creating new threads.

Horizontal vs. Vertical Scaling

There are two primary scaling approaches: vertical and horizontal scaling.

Vertical Scaling

This involves increasing the resources of a single server (CPU, memory) to handle more load. While simple, it has limitations as there’s a physical cap on how much a single machine can handle.

Horizontal Scaling

Horizontal scaling involves adding more servers to distribute the load across multiple instances, which is typically more scalable and resilient.

Using the Cluster Module for Scaling

Node.js’s Cluster module allows creating child processes (workers) that share the same server port, enabling the application to handle concurrent requests more effectively.

Example: Basic Cluster Setup

const cluster = require('cluster');

const http = require('http');

const numCPUs = require('os').cpus().length;

if (cluster.isMaster) {

for (let i = 0; i < numCPUs; i++) {

cluster.fork();

}

cluster.on('exit', (worker, code, signal) => {

console.log(`Worker ${worker.process.pid} died`);

cluster.fork(); // Restart worker

});

} else {

http.createServer((req, res) => {

res.writeHead(200);

res.end('Hello from worker ' + process.pid);

}).listen(8000);

}

Output: The Cluster module uses multiple worker processes to handle requests, leveraging multiple CPU cores and improving performance under heavy load.

Load Balancing

Load balancing distributes incoming traffic across multiple servers to ensure no single server is overwhelmed. Common tools for load balancing Node.js applications include Nginx, HAProxy, and cloud-based services like AWS Elastic Load Balancer.

Example: Nginx as a Load Balancer

An Nginx configuration to balance requests across multiple Node.js servers:

http {

upstream nodejs_servers {

server 127.0.0.1:8000;

server 127.0.0.1:8001;

server 127.0.0.1:8002;

}

server {

listen 80;

location / {

proxy_pass http://nodejs_servers;

}

}

}

Output: This configuration distributes requests across Node.js servers running on different ports, balancing the load and improving reliability.

Microservices Architecture

A microservices architecture involves splitting an application into smaller, independent services that communicate over a network. Each service is responsible for specific functionality, allowing the system to scale more efficiently.

Key Benefits

- Independent Scaling: Each service can scale independently.

- Fault Isolation: Issues in one service don’t affect others.

- Technology Flexibility: Different services can use different technologies if needed.

Example: Basic Microservices Setup

Suppose we have a monolithic application that we split into two services:

- User Service: Handles user data.

- Order Service: Manages orders.

Using Express and Axios for communication between services:

User Service:

const express = require('express');

const app = express();

app.get('/user', (req, res) => res.send({ id: 1, name: 'John Doe' }));

app.listen(3000, () => console.log('User service running on port 3000'));

Order Service:

const express = require('express');

const axios = require('axios');

const app = express();

app.get('/order', async (req, res) => {

const user = await axios.get('http://localhost:3000/user');

res.send({ orderId: 123, user: user.data });

});

app.listen(3001, () => console.log('Order service running on port 3001'));

Output: The two services communicate over HTTP, allowing the application to scale specific parts independently.

Containerization with Docker and Kubernetes

Docker and Kubernetes help in managing and deploying applications in a scalable and consistent way. Docker containers allow applications to run in isolated environments, while Kubernetes manages multiple containers across clusters.

Example: Dockerizing a Node.js Application

Create a Dockerfile to containerize a Node.js app:

# Dockerfile

FROM node:14

WORKDIR /app

COPY . .

RUN npm install

EXPOSE 3000

CMD ["node", "app.js"]

Output: Running this Dockerfile generates a container for the Node.js app, making it easy to deploy and scale across different environments.

Deploying with Kubernetes

Use a Kubernetes Deployment to manage replicas of the Node.js container, ensuring scalability and fault tolerance.

Optimizing Performance for Scalability

Performance optimization helps the application handle higher loads, making scaling efforts more effective. Key techniques include:

- Caching: Store frequently accessed data in-memory (e.g., Redis) to reduce database load.

- Database Optimization: Optimize queries, use indexes, and consider sharding in MongoDB or MySQL.

- Efficient Code: Ensure code is optimized to reduce CPU load, memory consumption, and latency.

Monitoring and Logging

Monitoring and logging are essential for scaling, as they help identify performance bottlenecks and understand system health.

Tools for Monitoring and Logging

- PM2: Process manager for Node.js applications with built-in monitoring.

- Loggly and ELK Stack: Solutions for centralized logging and analysis.

- New Relic and DataDog: Cloud-based monitoring for real-time insights into application performance.

Example: Monitoring with PM2

pm2 start app.js --name myapp

pm2 monit

Output: PM2 provides an interface to monitor CPU and memory usage, helping to maintain application health as it scales.

Scaling a Node.js application involves multiple techniques, from using the Cluster module for load distribution to employing advanced architectures like microservices and containerization. Implementing effective caching, load balancing, and efficient code practices prepares an application for high demand. Happy Coding!❤️