Deploying Go Application on Kubernetes

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It was originally developed by Google and is now maintained by the Cloud Native Computing Foundation (CNCF). Kubernetes provides a robust infrastructure for deploying and managing applications in a scalable and fault-tolerant manner.

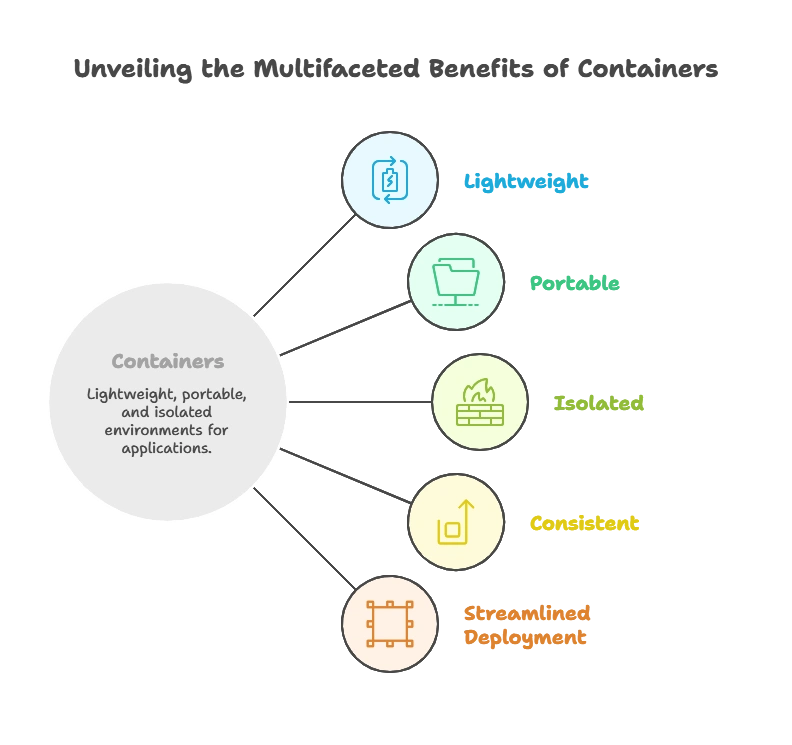

Understanding Containers

Before diving into Kubernetes, it’s essential to understand containers. Containers are lightweight, portable, and isolated environments that package an application and its dependencies. They ensure consistency across different environments and simplify the deployment process.

1. Containers are lightweight, leveraging the host system’s kernel for efficient resource utilization and faster startup times.

2. They are highly portable, encapsulating applications and dependencies, eliminating compatibility issues across different environments.

3. Containers provide isolated environments for applications, ensuring security and stability by preventing interference between them.

4. Ensuring consistency, containers package applications with dependencies, simplifying deployment and reducing deployment-related issues.

5. Containers streamline deployment with standardized packaging, allowing seamless deployment across various platforms and infrastructure environments.

Introduction to Kubernetes Components

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It comprises several key components that work together to provide a robust and flexible platform for running distributed systems. Here are the main components of Kubernetes:

1. Master Node:

– kube-apiserver: Exposes the Kubernetes API, which serves as the front end for the Kubernetes control plane.

– etcd: A distributed key-value store that stores the cluster’s configuration data, state, and metadata.

– kube-scheduler: Assigns nodes to newly created pods based on resource availability and other constraints.

– kube-controller-manager: Manages various controllers that regulate the state of the cluster, such as node and replication controllers.

– cloud-controller-manager: Integrates with cloud providers to manage resources such as load balancers and storage volumes.

2. Worker Node:

– kubelet: An agent that runs on each node and communicates with the Kubernetes master node. It manages the containers on the node, ensuring they are running and healthy.

– kube-proxy: Maintains network rules and performs load balancing across pods.

– Container Runtime: The software responsible for running containers, such as Docker or containerd.

3. Networking:

– Pod Networking: Kubernetes assigns each pod an IP address and manages networking between pods across nodes.

– Service Networking: Enables communication between pods within the cluster and exposes services to the outside world.

4. Add-ons:

– DNS: Provides DNS-based service discovery for pods.

– Dashboard: A web-based user interface for managing and monitoring the Kubernetes cluster.

– Ingress Controller: Manages external access to services within the cluster.

– Monitoring and Logging: Tools for monitoring cluster health, resource usage, and logging events.

These components work together to create a resilient and scalable platform for deploying and managing containerized applications. Kubernetes abstracts away the underlying infrastructure, allowing developers to focus on building and deploying applications without worrying about the operational complexities of managing the underlying infrastructure.

Preparing Your Go Application for Deployment

Before deploying a Go application on Kubernetes, you need to ensure that it’s properly configured and packaged. This section covers the steps required to prepare your Go application for deployment.

- Code Review and Testing

- Dependency Management

- Containerization

- Build Process

- Configuration Management

- Secret Management

- Health Checks and Readiness Probes

- Logging and Monitoring

Containerizing Your Go Application

To deploy a Go application on Kubernetes, you need to containerize it using Docker. Docker allows you to build, ship, and run applications in isolated containers. Here’s a basic Dockerfile for containerizing a Go application:

# Start with a base image containing Go runtime

FROM golang:latest

# Set the working directory inside the container

WORKDIR /app

# Copy the Go modules manifests

COPY go.mod .

COPY go.sum .

# Download dependencies

RUN go mod download

# Copy the source code into the container

COPY . .

# Build the Go application

RUN go build -o main .

# Expose port 8080 to the outside world

EXPOSE 8080

# Command to run the executable

CMD ["./main"]

- FROM: Specifies the base image with the Go runtime.

- WORKDIR: Sets the working directory inside the container.

- COPY: Copies the Go module manifests and source code into the container.

- RUN go mod download: Downloads Go module dependencies.

- RUN go build -o main .: Builds the Go application.

- EXPOSE: Exposes port 8080 for accessing the application.

- CMD: Specifies the command to run the application.

Once you have created a Dockerfile, you can build the Docker image using the docker build command.

Deploying Your Go Application on Kubernetes

Now that you have containerized your Go application, it’s time to deploy it on Kubernetes. This section explains how to create Kubernetes manifests and deploy your application to a Kubernetes cluster

Creating Kubernetes Deployment Manifest

A Kubernetes deployment is a resource object used to deploy and manage a containerized application. Below is an example Kubernetes deployment manifest for deploying a Go application:

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-app

spec:

replicas: 3

selector:

matchLabels:

app: go-app

template:

metadata:

labels:

app: go-app

spec:

containers:

- name: go-app

image: your-username/go-app:latest

ports:

- containerPort: 8080

- apiVersion: Specifies the API version.

- kind: Defines the type of Kubernetes resource (Deployment).

- metadata: Contains metadata such as the name of the deployment.

- spec: Specifies the desired state of the deployment.

- replicas: Specifies the number of replicas (pods) to run.

- selector: Defines how to select the pods controlled by this deployment.

- template: Defines the pod template.

- metadata: Contains labels to identify the pods.

- spec: Specifies the pod specification.

- containers: Specifies the containers within the pod.

- name: Specifies the name of the container.

- image: Specifies the Docker image to use.

- ports: Specifies the ports to expose.

- You can apply this deployment manifest using the

kubectl applycommand.

Exposing Your Application with Kubernetes Service

To access your Go application from outside the Kubernetes cluster, you need to create a Kubernetes service. A service provides networking capabilities to access an application deployed on Kubernetes. Below is an example Kubernetes service manifest:

apiVersion: v1

kind: Service

metadata:

name: go-app-service

spec:

selector:

app: go-app

ports:

- protocol: TCP

port: 80

targetPort: 8080

type: LoadBalancer

- apiVersion: Specifies the API version.

- kind: Defines the type of Kubernetes resource (Service).

- metadata: Contains metadata such as the name of the service.

- spec: Specifies the desired state of the service.

- selector: Defines which pods the service should target.

- ports: Specifies the ports to expose.

- type: Specifies the type of service (LoadBalancer).

- You can apply this service manifest using the

kubectl applycommand.

In this chapter, we covered the process of deploying a Go application on Kubernetes. We started by introducing Kubernetes and its key components. Then, we discussed the steps required to prepare a Go application for deployment, including containerizing it with Docker. Finally, we explained how to deploy the application on Kubernetes using deployment and service manifests. Happy coding !❤️