Python Data Science Libraries

"Python Data Science Libraries" delves into the rich ecosystem of Python libraries tailored for various facets of data science, from data manipulation and analysis to machine learning and deep learning. This topic provides an in-depth exploration of essential and advanced data science libraries, including NumPy, Pandas, Matplotlib, scikit-learn, TensorFlow, and PyTorch.

Introduction to Data Science Libraries in Python

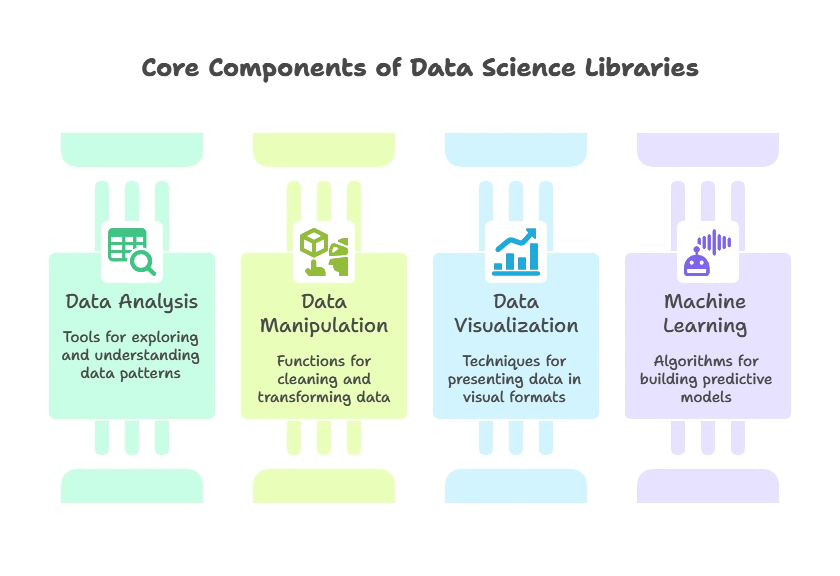

What are Data Science Libraries?

Data Science Libraries in Python are collections of tools and functions designed to facilitate various tasks involved in data analysis, manipulation, visualization, and machine learning. These libraries provide powerful capabilities for handling large datasets, performing complex computations, and building predictive models.

Importance of Data Science Libraries

Python has emerged as a dominant language in the field of data science due to its rich ecosystem of libraries tailored for different aspects of the data science workflow. These libraries enable data scientists and analysts to efficiently explore data, extract insights, and develop predictive models, thereby driving informed decision-making and innovation across industries.

Essential Data Science Libraries

NumPy

NumPy is the fundamental package for scientific computing in Python. It provides support for multi-dimensional arrays, mathematical functions, linear algebra operations, and random number generation.

import numpy as np

# Create a NumPy array

arr = np.array([1, 2, 3, 4, 5])

# Perform operations on the array

mean = np.mean(arr)

print("Mean:", mean)

Output:

Mean: 3.0

Explaination:

- We import NumPy as

np. - We create a NumPy array

arr. - Using NumPy’s

mean()function, we calculate the mean of the array.

Pandas

Pandas is a powerful library for data manipulation and analysis. It provides data structures like DataFrame and Series, along with methods for indexing, filtering, grouping, and joining data.

import pandas as pd

# Create a DataFrame

data = {'Name': ['Alice', 'Bob', 'Charlie'],

'Age': [25, 30, 35]}

df = pd.DataFrame(data)

# Display the DataFrame

print(df)

Output:

Name Age

0 Alice 25

1 Bob 30

2 Charlie 35

Explaination:

- We import Pandas as

pd. - We create a DataFrame

dffrom a dictionarydata. - The DataFrame is printed, displaying the data in tabular format.

Matplotlib

Matplotlib is a plotting library for creating static, interactive, and animated visualizations in Python. It provides a wide range of plotting functions for generating line plots, bar charts, histograms, scatter plots, and more.

import matplotlib.pyplot as plt

# Create a simple line plot

x = [1, 2, 3, 4, 5]

y = [2, 4, 6, 8, 10]

plt.plot(x, y)

plt.xlabel('X-axis')

plt.ylabel('Y-axis')

plt.title('Simple Line Plot')

plt.show()

Explaination:

- We import Matplotlib’s pyplot module as

plt. - We create lists

xandyrepresenting data points. - Using

plt.plot(), we create a line plot. - Additional functions are used to customize the plot’s appearance and add labels and a title.

- Finally,

plt.show()displays the plot.

Advanced Data Science Libraries

Scikit-learn

Scikit-learn is a versatile machine learning library in Python, providing tools for classification, regression, clustering, dimensionality reduction, and more. It offers a consistent API and a wide range of algorithms for building and evaluating machine learning models.

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

# Load the Iris dataset

iris = load_iris()

X, y = iris.data, iris.target

# Split the dataset into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Initialize and train a logistic regression model

model = LogisticRegression()

model.fit(X_train, y_train)

# Make predictions on the testing set

predictions = model.predict(X_test)

# Calculate the accuracy of the model

accuracy = accuracy_score(y_test, predictions)

print("Accuracy:", accuracy)

Output:

Accuracy: 0.9666666666666667

Explaination:

- We import necessary modules from scikit-learn.

- The Iris dataset is loaded using

load_iris(). - We split the dataset into training and testing sets using

train_test_split(). - A logistic regression model is initialized and trained on the training data.

- Predictions are made on the testing set using

predict(). - The accuracy of the model is calculated using

accuracy_score().

TensorFlow

TensorFlow is an open-source deep learning framework developed by Google. It provides a comprehensive ecosystem for building and deploying machine learning and deep learning models across a variety of platforms, including CPUs, GPUs, and TPUs.

import tensorflow as tf

# Define a simple neural network

model = tf.keras.Sequential([

tf.keras.layers.Dense(64, activation='relu', input_shape=(784,)),

tf.keras.layers.Dense(10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Train the model

model.fit(X_train, y_train, epochs=10, batch_size=32, validation_data=(X_test, y_test))

Explaination:

- We import TensorFlow as

tf. - A simple neural network model is defined using

SequentialAPI. - The model is compiled with appropriate optimizer, loss function, and evaluation metrics.

- Training is performed using

fit()method with specified number of epochs, batch size, and validation data.

PyTorch

PyTorch is another popular deep learning framework known for its flexibility and dynamic computation graph. It provides a Pythonic interface for building and training neural networks, making it easy to experiment with different architectures and algorithms.

import torch

import torch.nn as nn

import torch.optim as optim

# Define a simple neural network

class SimpleNN(nn.Module):

def __init__(self):

super(SimpleNN, self).__init__()

self.fc1 = nn.Linear(784, 64)

self.fc2 = nn.Linear(64, 10)

def forward(self, x):

x = torch.relu(self.fc1(x))

x = torch.softmax(self.fc2(x), dim=1)

return x

model = SimpleNN()

# Define loss function and optimizer

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

# Training loop

for epoch in range(10):

optimizer.zero_grad()

outputs = model(X_train)

loss = criterion(outputs, y_train)

loss.backward()

optimizer.step()

# Evaluation

with torch.no_grad():

outputs = model(X_test)

_, predicted = torch.max(outputs, 1)

accuracy = (predicted == y_test).sum().item() / len(y_test)

print("Accuracy:", accuracy)

Explaination:

- We import necessary modules from PyTorch.

- A simple neural network model is defined as a subclass of

nn.Module. - Loss function and optimizer are defined.

- Training loop is implemented to iterate over epochs, calculate loss, perform backpropagation, and update model parameters.

- Evaluation is performed on the testing set to calculate accuracy.

Intermediate Data Science Libraries

Seaborn

Seaborn is a statistical data visualization library built on top of Matplotlib. It provides a high-level interface for creating informative and attractive statistical graphics, including heatmaps, violin plots, pair plots, and more.

import seaborn as sns

import matplotlib.pyplot as plt

# Load a sample dataset

tips = sns.load_dataset("tips")

# Create a violin plot

sns.violinplot(x="day", y="total_bill", data=tips)

plt.title('Violin Plot of Total Bill by Day')

plt.show()

Explaination:

- We import Seaborn as

snsand Matplotlib asplt. - A sample dataset (

tips) is loaded usingsns.load_dataset(). - A violin plot is created using

sns.violinplot(), visualizing the distribution of total bill amounts by day.

Statsmodels

Statsmodels is a Python module that provides classes and functions for the estimation of many different statistical models, as well as for conducting statistical tests, and statistical data exploration.

import statsmodels.api as sm

# Load a sample dataset

iris = sns.load_dataset("iris")

X = iris["sepal_length"]

y = iris["petal_length"]

# Fit a linear regression model

X = sm.add_constant(X)

model = sm.OLS(y, X).fit()

# Print model summary

print(model.summary())

Explaination:

- We import Statsmodels as

sm. - A sample dataset (

iris) is loaded using Seaborn. - A simple linear regression model is fitted using

sm.OLS(). - The summary of the fitted model is printed using

model.summary().

Specialized Data Science Libraries

NetworkX

NetworkX is a Python library for creating, analyzing, and visualizing complex networks or graphs. It provides tools for studying the structure and dynamics of networks, including graph algorithms, centrality measures, and drawing functions.

import networkx as nx

import matplotlib.pyplot as plt

# Create a graph

G = nx.Graph()

# Add nodes

G.add_nodes_from([1, 2, 3])

# Add edges

G.add_edge(1, 2)

G.add_edge(2, 3)

G.add_edge(3, 1)

# Draw the graph

nx.draw(G, with_labels=True)

plt.title('Simple Graph')

plt.show()

Explaination:

- We import NetworkX as

nxand Matplotlib asplt. - A new graph

Gis created usingnx.Graph(). - Nodes and edges are added to the graph using

add_nodes_from()andadd_edge()functions, respectively. - The graph is drawn using

nx.draw()with labels.

Gensim

Gensim is a Python library for topic modeling, document similarity analysis, and other natural language processing tasks. It provides implementations of popular algorithms such as Latent Semantic Analysis (LSA), Latent Dirichlet Allocation (LDA), and Word2Vec.

from gensim.summarization import summarize

import requests

# Get text from a URL

url = "https://en.wikipedia.org/wiki/Python_(programming_language)"

text = requests.get(url).text

# Summarize the text

summary = summarize(text)

print(summary)

Explaination:

- We import the

summarizefunction from Gensim’ssummarizationmodule. - Text is retrieved from a Wikipedia page using the

requestslibrary. - The

summarize()function is applied to generate a summary of the text.

We've explored a wide range of Python data science libraries that empower developers and data scientists to perform various tasks across the data science workflow. From essential libraries like NumPy, Pandas, and Matplotlib to specialized tools like scikit-learn, TensorFlow, and NetworkX, each library plays a crucial role in enabling data analysis, manipulation, visualization, and machine learning. Happy coding! ❤️