Web Scraping with Python

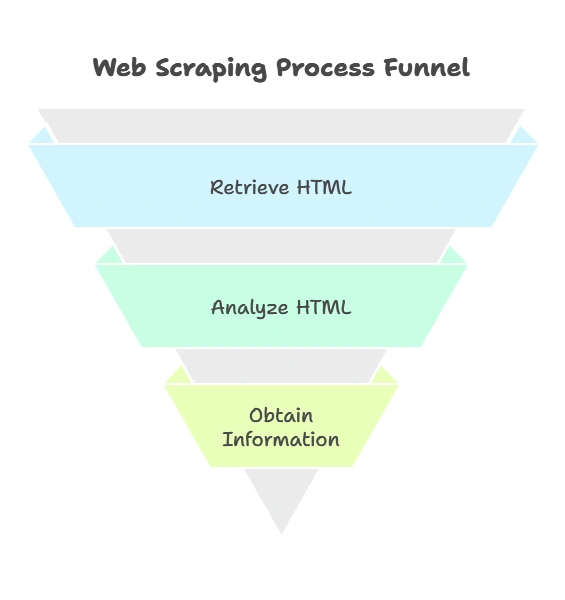

Web scraping is the process of extracting data from websites. It involves fetching HTML content from web pages and parsing it to extract the desired information. Python offers powerful libraries such as requests for fetching web pages and Beautiful Soup for parsing HTML, making web scraping easy and efficient.

Introduction to Web Scraping

What is Web Scraping?

Web scraping is the process of extracting data from websites. It involves fetching HTML content from web pages and parsing it to extract the desired information.

Why Web Scraping?

Web scraping allows us to gather data from websites for various purposes such as data analysis, research, and automation.

Basics of Web Scraping

Tools for Web Scraping

Python offers several libraries for web scraping, including requests for fetching web pages and Beautiful Soup for parsing HTML.

Installing Required Libraries

pip install requests beautifulsoup4

Fetching a Web Page

import requests

url = 'https://example.com'

response = requests.get(url)

html_content = response.text

Explaination:

In this code snippet, we use the

requestslibrary to make an HTTP GET request to the specified URL ('https://example.com'). The response object contains the HTML content of the web page, which we extract using thetextattribute and store in the variablehtml_content.

Parsing HTML with Beautiful Soup

Parsing HTML with Beautiful Soup

Explaination:

Here, we import the

BeautifulSoupclass from thebs4module. We create aBeautifulSoupobjectsoupby passing the HTML content (html_content) and the parser to use ('html.parser'). This object allows us to navigate and manipulate the HTML structure of the web page.

Extracting Data from Web Pages

Finding Elements by Tag Name

# Find all <a> tags

links = soup.find_all('a')

Explaination:

- Using the

find_all()method of thesoupobject, we extract all<a>tags from the HTML content. This returns a list ofTagobjects representing the anchor elements on the web page.

Finding Elements by Class or ID

# Find all elements with class 'header'

headers = soup.find_all(class_='header')

# Find element with id 'main-content'

main_content = soup.find(id='main-content')

Explaination:

- Similarly, we can use the

find_all()method to extract elements based on their class or id attributes. In the first example, we extract all elements with the class'header', and in the second example, we extract the element with the id'main-content'.

Advanced Techniques in Web Scraping

Handling Dynamic Content

For websites with dynamic content loaded via JavaScript, libraries like Selenium can be used.

Using Selenium for Dynamic Content

from selenium import webdriver

driver = webdriver.Chrome()

driver.get('https://example.com')

Explaination:

When websites use JavaScript to load content dynamically, traditional web scraping techniques may not work. In such cases, we can use the

Seleniumlibrary, which controls web browsers programmatically. Here, we instantiate a WebDriver object (driver) for the Chrome browser and navigate to the specified URL ('https://example.com').

Interacting with Web Elements

# Clicking a button

button = driver.find_element_by_xpath('//button[@id="submit"]')

button.click()

Explaination:

With Selenium, we can interact with web elements like buttons, input fields, etc. In this example, we locate a button using its XPath and then simulate a click on it.

Data Processing and Storage

Processing Extracted Data

Once data is extracted, it can be processed and manipulated using Python’s built-in functionalities or third-party libraries like pandas.

Storing Data

Extracted data can be stored in various formats such as CSV, JSON, or databases for further analysis or use.

import csv

# Write extracted data to a CSV file

with open('data.csv', 'w', newline='') as csvfile:

writer = csv.writer(csvfile)

writer.writerow(['Title', 'Link'])

for link in links:

writer.writerow([link.text, link.get('href')])

Explaination:

After extracting data from web pages, we often need to process and store it for further analysis. Here, we use Python’s built-in

csvmodule to write the extracted data to a CSV file nameddata.csv. We iterate over the list of links extracted earlier, writing each link’s text and href attributes to the CSV file.

Ethical and Legal Considerations

Respect Website Terms of Service

Ensure that web scraping activities comply with the terms of service of the websites being scraped.

Rate Limiting and Politeness

Implement rate limiting and politeness measures to avoid overloading servers and getting blocked.

Output Example:

Let’s demonstrate a simple web scraping example using requests and Beautiful Soup to extract links from a webpage:

import requests

from bs4 import BeautifulSoup

url = 'https://en.wikipedia.org/wiki/Main_Page'

response = requests.get(url)

html_content = response.text

soup = BeautifulSoup(html_content, 'html.parser')

links = soup.find_all('a')

for link in links[:10]: # Displaying first 10 links

print(link.get('href'))

# Output will contain URLs of the first 10 links found on the Wikipedia main page.

Explaination:

Importing Libraries: We import the

requestslibrary to make HTTP requests and theBeautifulSoupclass from thebs4module for HTML parsing.Fetching Web Page: We make an HTTP GET request to the specified URL (

'https://en.wikipedia.org/wiki/Main_Page') usingrequests.get(). The HTML content of the page is stored in thehtml_contentvariable.Parsing HTML: We create a BeautifulSoup object

soupby passing the HTML content and the parser ('html.parser'). This allows us to navigate and manipulate the HTML structure of the web page.Extracting Links: We use the

find_all()method of thesoupobject to extract all<a>tags (i.e., anchor elements) from the HTML content. This returns a list ofTagobjects representing the anchor elements.Displaying Links: We iterate over the list of links (limited to the first 10 for brevity) and use the

get()method to extract thehrefattribute value of each link. We print these values to the console.

Throughout the topic,we explored the basics of web scraping, including fetching web pages, parsing HTML, and extracting data. We also delved into more advanced techniques such as handling dynamic content with Selenium, processing extracted data with pandas, and handling authentication and cookies in web scraping. Happy coding! ❤️