Scrapy Framework for Web Crawling

Scrapy stands as a cornerstone in Python's arsenal for web crawling and scraping. It offers a comprehensive framework equipped with tools to navigate websites, extract data, and automate crawling tasks effortlessly.

Introduction to Scrapy

What is Scrapy?

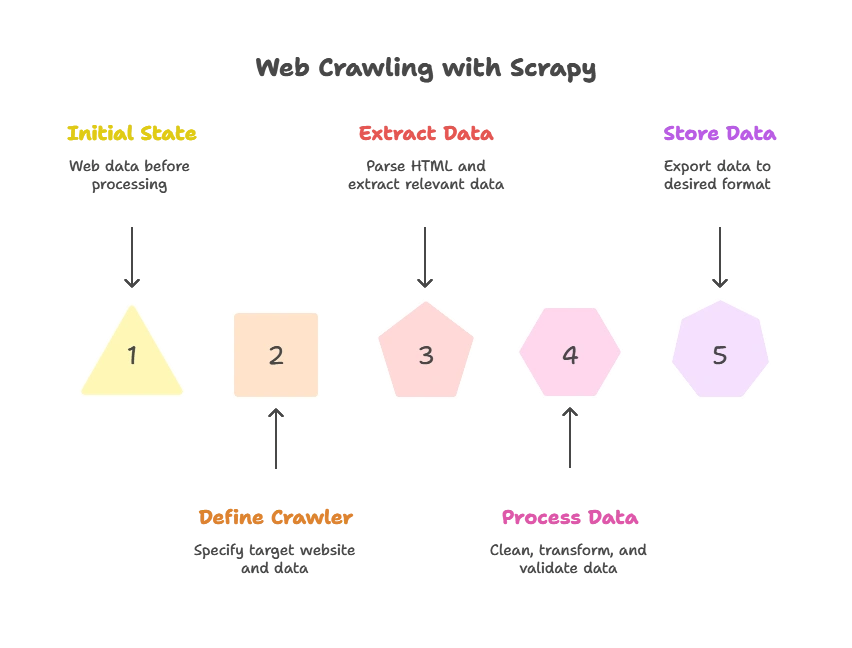

Scrapy is a powerful and extensible framework for web crawling and scraping in Python. It provides tools and structures for building web crawlers that navigate websites, extract data, and store it for further processing.

Why Scrapy?

Scrapy simplifies the process of building web crawlers by handling tasks such as managing requests, parsing HTML content, and following links automatically. It also offers features for handling concurrency, asynchronous operations, and exporting data to various formats.

Installation and Setup

Installing Scrapy

You can install Scrapy using pip, the Python package manager:

pip install scrapy

Creating a Scrapy Project

After installation, you can create a new Scrapy project using the scrapy startproject command:

scrapy startproject myproject

Basics of Scrapy

Spiders

Spiders are classes that define how to scrape a website. They specify how to perform requests, extract data from responses, and follow links.

Items

Items are simple containers for the data scraped by spiders. They define the fields that will be extracted from web pages.

Pipelines

Pipelines are components for processing scraped items. They perform tasks such as cleaning, validation, and storing data in databases or files.

Writing a Spider

Example Spider

Here’s an example of a simple spider that crawls a website and extracts data:

import scrapy

class MySpider(scrapy.Spider):

name = 'myspider'

start_urls = ['https://example.com']

def parse(self, response):

data = response.css('div.data').extract()

yield {'data': data}

Explaination:

- name: Name of the spider.

- start_urls: List of URLs to start crawling from.

- parse(): Method called with the response of each request. It extracts data using CSS selectors and yields items.

Advanced Techniques in Scrapy

Middleware

Middleware components allow you to manipulate requests and responses, add custom functionality, and handle errors.

Extensions

Extensions are components that extend Scrapy’s functionality. They can be used for monitoring, logging, and controlling the crawling process.

Scrapy emerges as a beacon of efficiency and reliability in the realm of web crawling and scraping. From its seamless installation process to its robust architecture, Scrapy simplifies the complexities of web crawling, offering a comprehensive framework for building scalable and efficient web scraping solutions. Happy coding! ❤️