Distributed Data Processing with Dask

Dask is a powerful Python library for parallel and distributed computing. It provides high-level abstractions for scalable data processing, allowing users to work with large datasets that exceed the memory capacity of a single machine. With Dask, developers can leverage the familiar Python ecosystem to perform distributed computing tasks efficiently and seamlessly.

Introduction to Dask

What is Dask?

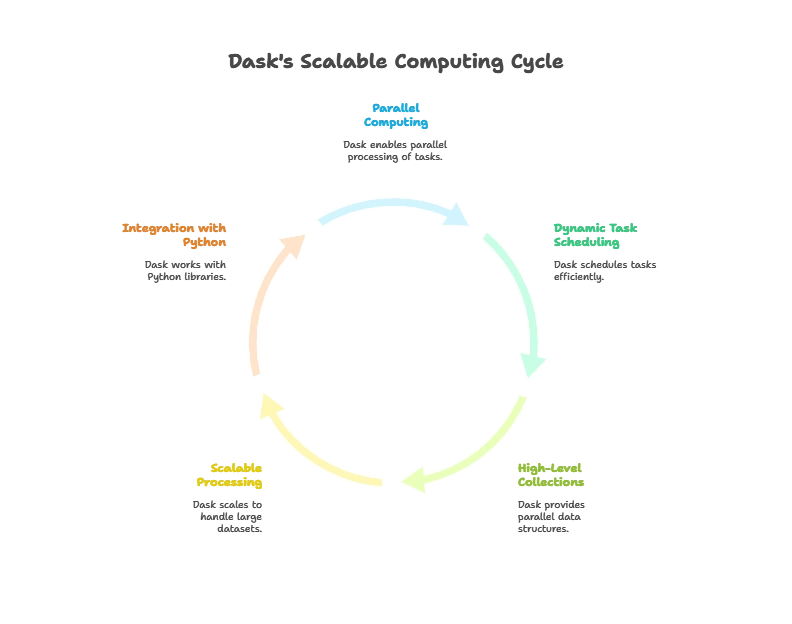

Dask is a flexible library in Python for parallel computing. It enables parallel and distributed computing by providing dynamic task scheduling and high-level parallel collections like Dask arrays, dataframes, and bags.

Why Dask?

Dask is designed to scale from single machines to large clusters, making it ideal for processing large datasets that do not fit into memory. It integrates seamlessly with the Python ecosystem and can be used alongside popular libraries like NumPy, pandas, and scikit-learn.

Basics of Dask

Dask Arrays

Dask arrays are distributed, parallel, and chunked arrays that extend the NumPy interface. They allow for out-of-core and parallel computation on large datasets that exceed the memory capacity of a single machine.

Dask Dataframes

Dask dataframes provide parallelized operations on larger-than-memory dataframes, similar to pandas dataframes. They allow for scalable data manipulation and analysis using familiar pandas syntax.

Getting Started with Dask

Installation and Setup

To get started with Dask, you can install it via pip:

pip install dask

Dask also provides support for distributed computing with Dask.distributed, which can be installed separately:

pip install dask distributed

Creating Dask Arrays

Let’s create a Dask array and perform some basic operations:

import dask.array as da

# Create a Dask array

x = da.ones((1000, 1000), chunks=(100, 100))

# Compute the mean along the rows

mean = x.mean(axis=0)

# Show the result

print(mean.compute())

Explanation:

- We create a Dask array of ones with shape (1000, 1000) and chunk size (100, 100).

- We compute the mean along the rows using the

mean()method. - We use the

compute()method to trigger the computation and print the result.

Creating Dask Dataframes

Let’s create a Dask dataframe and perform some basic operations:

import dask.dataframe as dd

# Create a Dask dataframe from a CSV file

df = dd.read_csv('data.csv')

# Calculate the mean of a column

mean = df['column'].mean()

# Show the result

print(mean.compute())

Explanation:

- We create a Dask dataframe by reading a CSV file using

dd.read_csv(). - We calculate the mean of a column using the

mean()method. - We use the

compute()method to trigger the computation and print the result.

Advanced Techniques in Dask

Custom Workloads with Dask Delayed

Dask delayed allows for parallelizing custom Python code by delaying function execution and constructing a task graph. This is useful for parallelizing non-array-based workloads or integrating with existing codebases.

Distributed Computing with Dask.distributed

Dask.distributed extends Dask’s capabilities to distributed computing across multiple machines or a cluster. It provides a distributed scheduler, fault tolerance, and support for large-scale data processing.

Real-World Applications of Dask

Big Data Analytics

Dask is widely used for big data analytics tasks such as data cleaning, preprocessing, exploratory data analysis (EDA), and machine learning model training on large datasets.

Scientific Computing

Dask is popular in the scientific computing community for scalable data analysis and simulation tasks in domains such as geospatial analysis, climate science, and computational biology.

In this topic, we've explored the power and versatility of Dask for distributed data processing in Python. From its core concepts like Dask arrays and dataframes to advanced techniques for distributed computing, Dask provides developers with a comprehensive toolkit for working with large datasets efficiently and effectively. Happy coding! ❤️