Building Distributed Systems with Go

A distributed system is a network of computers that work together to achieve a common goal. These computers, also known as nodes, communicate with each other by passing messages. Unlike a single monolithic system, a distributed system is decentralized, meaning there's no central authority controlling everything.

Why Distributed Systems?

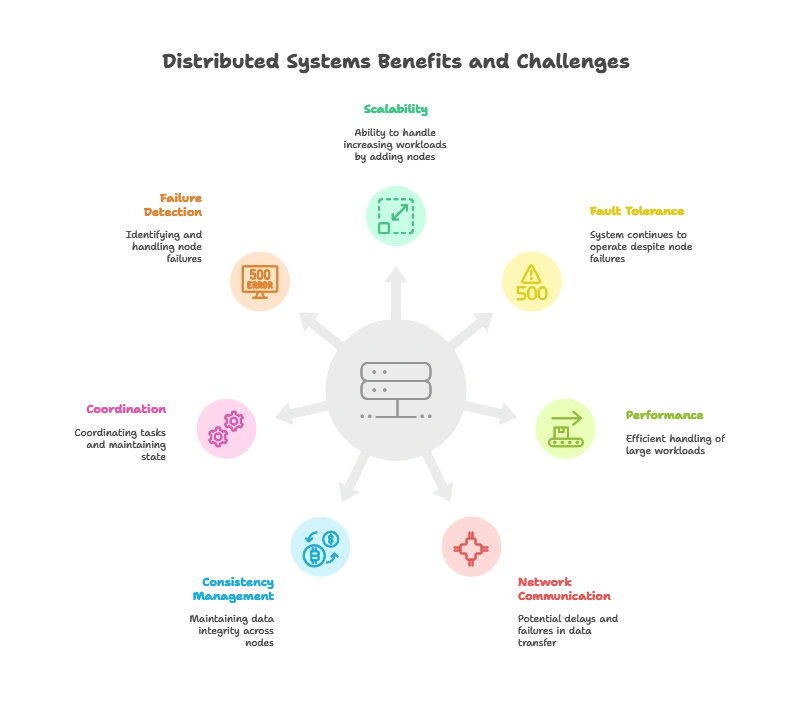

Distributed systems offer several benefits such as scalability, fault tolerance, and better performance. By distributing tasks across multiple machines, distributed systems can handle large workloads more efficiently and continue to operate even if some nodes fail.

Understanding Distributed Systems

1. Characteristics

- Concurrency: Multiple processes (goroutines) execute seemingly simultaneously.

- Scalability: Ability to handle increasing load by adding more nodes.

- Fault Tolerance: System continues to operate despite node failures.

- Transparency: Users unaware of the distributed nature (ideally).

2. Challenges

- Network Communication: Network delays, failures can impact performance.

- Consistency Management: Maintaining data consistency across nodes.

- Coordination: Coordinating tasks and maintaining state across nodes.

- Failure Detection and Recovery: Identifying and handling node failures

Remote Procedure Calls (RPC):

Remote Procedure Call (RPC) is a communication protocol that allows a process or application on one node to invoke a function or procedure on another node as if it were a local call. RPC abstracts away the details of network communication, allowing developers to focus on application logic rather than low-level networking code. Two common libraries for implementing RPC in distributed systems are gRPC and Apache Thrift.

Let’s delve into each of these libraries:

1. gRPC:

– Overview: gRPC is an open-source RPC framework developed by Google. It uses HTTP/2 for transport and Protocol Buffers (protobuf) for serialization, making it efficient, fast, and language-agnostic.

– Key Features:

– IDL: gRPC uses Protocol Buffers (protobuf) as its Interface Definition Language (IDL). Protobuf allows you to define the service interface and message types in a language-neutral way, and then generate code for different programming languages.

– Bidirectional Streaming: gRPC supports bidirectional streaming, allowing both the client and server to send a stream of messages to each other asynchronously.

– Middleware Support: gRPC provides built-in support for interceptors (middleware), enabling features such as logging, authentication, and monitoring.

– Usage: To use gRPC, you define a service interface and message types in a `.proto` file, compile it using the `protoc` compiler, and then implement the service on the server and client-side using generated code.

2. Apache Thrift:

– Overview: Apache Thrift is an RPC framework originally developed by Facebook. It supports multiple transport protocols (including HTTP, TCP, and UNIX domain sockets) and multiple serialization formats (including Binary, JSON, and Compact Protocol).

– Key Features:

– IDL:Thrift uses its own Interface Definition Language (also called Thrift) for defining services and data types. The Thrift compiler generates code for multiple programming languages, making it easy to develop cross-language RPC systems.

– Transport and Protocol Independence: Thrift allows you to choose different transport protocols and serialization formats independently, providing flexibility in designing RPC systems tailored to specific requirements.

– Versioning: Thrift supports versioning of service interfaces, allowing backward and forward compatibility between clients and servers.

– Usage: To use Apache Thrift, you define service interfaces and data types in a `.thrift` file, compile it using the `thrift` compiler, and then implement the service on the server and client-side using generated code.

Comparison:

– Protocol Buffers vs. Thrift IDL: gRPC uses Protocol Buffers for IDL, while Apache Thrift uses its own Thrift IDL. Both are powerful, but the choice may depend on existing infrastructure and preferences.

– Transport and Serialization: gRPC primarily uses HTTP/2 and Protocol Buffers, while Apache Thrift supports various transport protocols and serialization formats, providing more flexibility.

– Community and Ecosystem: Both gRPC and Apache Thrift have active communities and are used in production by many organizations. The choice may depend on factors such as existing codebase, language support, and specific requirements of the project.

In summary, gRPC and Apache Thrift are popular RPC frameworks for building distributed systems. They provide powerful abstractions for defining service interfaces and handling remote procedure calls, making it easier to build efficient, scalable, and language-agnostic RPC systems. The choice between them depends on factors such as language support, transport protocol requirements, and familiarity with the respective technologies.

Let’s take a simple example of it

package main

import (

"context"

"github.com/golang/protobuf/proto"

"github.com/grpc/go/grpc"

"google.golang.org/protobuf/types/known/emptypb"

)

// Define service and message types in a separate `.proto` file

type GreeterServer struct{}

func (s *GreeterServer) SayHello(ctx context.Context, in *HelloRequest) (*HelloResponse, error) {

return &HelloResponse{Message: "Hello, " + in.Name}, nil

}

func main() {

lis, err := net.Listen("tcp", ":8080")

if err != nil {

log.Fatal(err)

}

s := grpc.NewServer()

RegisterGreeterServer(s, &GreeterServer{})

log.Println("Server listening on port 8080")

if err := s.Serve(lis); err != nil {

log.Fatal(err)

}

}

This code is a basic example of implementing a gRPC server in Go. gRPC is a high-performance, open-source remote procedure call (RPC) framework developed by Google. It uses Protocol Buffers (protobuf) as the interface definition language and HTTP/2 as the transport protocol. Let’s break down the code:

1. Import Statements:

– `context`: This package provides functionality for carrying deadlines, cancellation signals, and other request-scoped values across API boundaries.

– `github.com/golang/protobuf/proto`: This package provides support for marshaling and unmarshaling protocol buffer messages.

– `github.com/grpc/go/grpc`: This package implements the gRPC framework for Go.

– `google.golang.org/protobuf/types/known/emptypb`: This package provides a predefined type for an empty protobuf message.

2. GreeterServer Struct and Methods:

– `GreeterServer`: This struct is used to implement the gRPC service interface `GreeterServer`. It contains methods that handle RPC requests.

– `SayHello`: This method implements the `SayHello` RPC method defined in the gRPC service interface. It takes a context and a `HelloRequest` message as input and returns a `HelloResponse` message and an error.

3. Main Function:

– `main()`: This function is the entry point of the program.

– `lis, err := net.Listen(“tcp”, “:8080”)`: This line creates a TCP listener on port 8080 to accept incoming connections.

– `s := grpc.NewServer()`: This line creates a new gRPC server instance.

– `RegisterGreeterServer(s, &GreeterServer{})`: This line registers the `GreeterServer` struct as the implementation of the `GreeterServer` gRPC service interface on the gRPC server `s`.

– `log.Println(“Server listening on port 8080”)`: This line logs a message indicating that the server is listening on port 8080.

– `if err := s.Serve(lis); err != nil { log.Fatal(err) }`: This line starts the gRPC server, which listens for incoming connections on the listener `lis`. If an error occurs, it logs the error and exits the program.

Overall, this code sets up a gRPC server that listens for incoming connections on port 8080 and registers a service implementation (`GreeterServer`) to handle RPC requests for the `Greeter` service. When a client sends an RPC request to this server, the corresponding method (`SayHello`) in the `GreeterServer` struct will be invoked to process the request and generate a response.

Publish-Subscribe (Pub/Sub):

Publish-Subscribe (Pub/Sub) is a messaging pattern where messages, also known as events or notifications, are published by producers (publishers) to topics, and any interested subscribers (consumers) receive them. This pattern allows for loosely coupled communication between different parts of a system, enabling scalable and flexible architectures. Several messaging systems and frameworks facilitate Pub/Sub communication, including NATS, Kafka, and RabbitMQ. Let’s explore this pattern in more detail:

1. Components:

– Publishers (Producers): Entities responsible for generating and publishing messages to specific topics. Publishers do not need to know anything about the subscribers. They simply publish messages to the messaging system.

– Topics (Channels): Logical channels or subjects to which messages are published. Topics categorize messages based on their content or purpose. Subscribers can subscribe to one or more topics to receive relevant messages.

– Subscribers (Consumers): Entities interested in receiving messages from specific topics. Subscribers subscribe to topics and receive messages published to those topics. Subscribers can be distributed across different systems or processes.

2. Workflow:

– Publishing: Publishers publish messages to specific topics. Messages can contain any type of data, such as text, JSON, or binary payloads.

– Subscription: Subscribers subscribe to topics they are interested in receiving messages from. They specify the topics they want to subscribe to and define handlers or callbacks to process incoming messages.

– Message Delivery: When a message is published to a topic, the messaging system delivers the message to all subscribers subscribed to that topic.

– Consumption: Subscribers receive messages from subscribed topics and process them according to their application logic. Subscribers can perform tasks such as updating database records, triggering actions, or sending notifications to users.

3. Advantages:

– Loose Coupling: Pub/Sub decouples publishers from subscribers, allowing them to operate independently. Publishers and subscribers do not need to be aware of each other’s existence.

– Scalability: Pub/Sub systems can scale horizontally to handle large volumes of messages and accommodate a growing number of publishers and subscribers.

– Flexibility: Topics provide a flexible way to categorize messages, allowing subscribers to subscribe only to topics relevant to their interests.

– Reliability: Pub/Sub systems often provide features such as message persistence, delivery guarantees, and fault tolerance to ensure reliable message delivery.

4. Messaging Systems for Pub/Sub:

– NATS: NATS is a lightweight and high-performance messaging system designed for cloud-native applications. It provides simple Pub/Sub messaging semantics with low-latency message delivery.

– Kafka: Apache Kafka is a distributed streaming platform that supports Pub/Sub messaging patterns. It is highly scalable and durable, making it suitable for building real-time data pipelines and event-driven architectures.

– RabbitMQ: RabbitMQ is a feature-rich message broker that supports various messaging patterns, including Pub/Sub. It provides robust message queuing, routing, and delivery features, making it suitable for enterprise applications.

In summary, Pub/Sub is a messaging pattern that enables loosely coupled communication between publishers and subscribers. It promotes scalability, flexibility, and reliability in distributed systems by allowing messages to be published to topics and delivered to interested subscribers. Various messaging systems like NATS, Kafka, and RabbitMQ facilitate Pub/Sub communication, each with its own features and capabilities tailored to different use cases.

package main

import (

"fmt"

"github.com/nats-io/nats.go"

)

func main() {

nc, err := nats.Connect(nats.DefaultURL)

if err != nil {

log.Fatal(err)

}

// Subscribe

sub, err := nc.Subscribe("greetings")

if err != nil {

log.Fatal(err)

}

defer sub.Unsubscribe()

// Publish

msg := []byte("Hello, world!")

err = nc.Publish("greetings", msg)

if err != nil {

log.Fatal(err)

}

// Receive and process messages

for msg := range sub.Channel() {

fmt.Println(string(msg.Data))

}

}

This code demonstrates a basic example of using the NATS messaging system for pub/sub communication in Go. Let’s break it down step by step:

1. Package Imports:

fmt: This package provides functions for formatted printing, used here to print the received message to the console.github.com/nats-io/nats.go: This line imports the NATS Go client library, allowing you to interact with a NATS server.

2. main Function:

- The

mainfunction is the entry point of the program.

3. Connecting to NATS Server:

nc, err := nats.Connect(nats.DefaultURL): This line attempts to connect to a NATS server running on the default URL (usuallylocalhost:4222). It assigns the connection object (nc) and any potential error (err) to the respective variables.if err != nil { log.Fatal(err) }: This checks for connection errors. If an error occurs (erris not nil), the program terminates with a fatal message (log.Fatal).

4. Subscribing to a Topic:

sub, err := nc.Subscribe("greetings"): This line subscribes to a topic named"greetings". The subscription object (sub) and any error (err) are stored in the variables.if err != nil { log.Fatal(err) }: Similar to the connection step, this checks for subscription errors and terminates the program if one occurs.defer sub.Unsubscribe(): This line is a deferred function call. It ensures that the subscription is unsubscribed from the topic when the program exits, even if there are errors later.

5. Publishing a Message:

msg := []byte("Hello, world!"): This line creates a byte slice containing the message"Hello, world!". Messages in NATS are typically byte arrays.err = nc.Publish("greetings", msg): This line publishes the messagemsgto the same topic"greetings"used for subscription.

6. Receiving and Processing Messages:

for msg := range sub.Channel(): This line starts a loop that iterates over the channel (Channel()) associated with the subscription. Therangekeyword iterates over the messages received on this channel.fmt.Println(string(msg.Data)): Inside the loop, the code retrieves the message data from the current message (msg) and converts it back to a string usingstring(msg.Data). Finally, it prints the message to the console usingfmt.Println.

In summary, this code demonstrates:

- Connecting to a NATS server

- Subscribing to a topic

- Publishing a message to the same topic

- Receiving messages from the subscribed topic and printing them

Distributed Key-Value Stores (KV Stores):

A Distributed Key-Value Store (KV Store) is a type of distributed database that stores data as a collection of key-value pairs and spreads this data across multiple nodes in a network. Each key-value pair consists of a unique identifier (the key) and the associated data (the value). These stores are designed to provide high availability, fault tolerance, and scalability for applications that require fast access to data.

Here’s a breakdown of how a Distributed Key-Value Store works:

1. Data Distribution: In a distributed KV store, the data is partitioned and distributed across multiple nodes in the network. Each node is responsible for storing and managing a subset of the data. This distribution ensures that the workload is evenly distributed and allows the system to scale horizontally by adding more nodes as needed.

2. Replication: To ensure fault tolerance and high availability, data is often replicated across multiple nodes. This means that each piece of data is stored redundantly on multiple nodes. If one node fails, another node can take over the responsibility of serving the data, ensuring that the system remains operational.

3. Consistency: Maintaining consistency is crucial in distributed systems. KV stores typically offer different consistency models, such as eventual consistency or strong consistency, to ensure that all nodes eventually converge to the same state. Techniques like distributed consensus algorithms (e.g., Raft, Paxos) are used to coordinate updates and ensure consistency across nodes.

4. Scalability: Distributed KV stores are designed to scale horizontally, meaning that you can add more nodes to the system to handle increased workload and data volume. This allows the system to accommodate growing demands without sacrificing performance.

5. API: KV stores typically offer a simple API for storing, retrieving, and deleting key-value pairs. These APIs are usually lightweight and optimized for high throughput and low latency. Clients can interact with the KV store using these APIs to access and manipulate data.

Examples of popular Distributed Key-Value Stores include Apache Cassandra, Amazon DynamoDB, and etcd. These systems are widely used in various applications, including caching, session management, configuration management, and distributed coordination.

Let’s create a simple example of a distributed key-value store using Go. In this example, we’ll implement a basic in-memory distributed KV store where data is replicated across multiple nodes.

package main

import (

"fmt"

"sync"

)

// KVStore represents a distributed key-value store

type KVStore struct {

data map[string]string

mu sync.RWMutex // Mutex for thread-safe access to the data

}

// NewKVStore creates a new instance of KVStore

func NewKVStore() *KVStore {

return &KVStore{

data: make(map[string]string),

}

}

// Set inserts or updates a key-value pair in the store

func (kv *KVStore) Set(key, value string) {

kv.mu.Lock()

defer kv.mu.Unlock()

kv.data[key] = value

}

// Get retrieves the value associated with a key from the store

func (kv *KVStore) Get(key string) (string, bool) {

kv.mu.RLock()

defer kv.mu.RUnlock()

value, ok := kv.data[key]

return value, ok

}

func main() {

// Create multiple instances of KVStore to simulate a distributed system

kv1 := NewKVStore()

kv2 := NewKVStore()

// Set a key-value pair in the first node

kv1.Set("foo", "bar")

// Retrieve the value associated with the key from both nodes

value1, found1 := kv1.Get("foo")

value2, found2 := kv2.Get("foo")

// Display the results

fmt.Println("Node 1 - Value:", value1, "Found:", found1)

fmt.Println("Node 2 - Value:", value2, "Found:", found2)

}

In this code:

- We define a

KVStorestruct to represent our distributed key-value store. It contains a map to store key-value pairs and a mutex (sync.RWMutex) to ensure thread-safe access to the data. - The

Setmethod adds or updates a key-value pair in the store, while theGetmethod retrieves the value associated with a key. - We create two instances of

KVStore(kv1andkv2) to simulate two nodes in our distributed system. - We set a key-value pair in

kv1and then attempt to retrieve the value associated with the same key from bothkv1andkv2. - Finally, we print the results to see if the value was successfully retrieved from each node.

This example is simplified and runs in-memory within a single Go program. In a real-world scenario, you would replace the in-memory storage with a distributed data store like etcd or Redis and implement network communication for coordination between nodes.

Distributed Coordination (Leader Election):

Distributed coordination, especially leader election, plays a vital role in ensuring the stability and efficiency of distributed systems. In leader election, multiple nodes within a distributed network compete to select a leader, which then assumes the responsibility of coordinating and managing various activities within the system. Let’s delve deeper into the theory behind distributed coordination and leader election:

1. Importance of Distributed Coordination:

– In distributed systems, tasks are often distributed across multiple nodes to improve scalability, fault tolerance, and performance.

– However, coordinating these distributed tasks poses challenges such as maintaining consistency, ensuring fault tolerance, and managing concurrent access to shared resources.

– Distributed coordination mechanisms like leader election help in achieving consensus among nodes and ensuring coordinated execution of tasks.

2. Leader Election Process:

– In leader election, nodes within a distributed network initiate an election process to select a leader.

– The leader is responsible for making decisions, coordinating activities, and ensuring consistency across the distributed system.

– Nodes typically exchange messages and perform computations to determine the leader based on predefined criteria.

– Once a leader is elected, it informs other nodes of its leadership status, and they acknowledge the leader’s authority.

3. Criteria for Leader Election:

– Various criteria can be used to determine the suitability of a node for leadership, such as:

– Node ID: Nodes with higher or lower IDs may be preferred as leaders based on the specific requirements of the system.

– Availability: Nodes that are currently operational and reachable may be prioritized for leadership.

– Performance: Nodes with better performance characteristics (e.g., lower latency, higher throughput) may be favored as leaders.

4. Challenges in Leader Election:

– Network Partitioning: If the network is partitioned, nodes on different partitions may simultaneously elect different leaders, leading to inconsistencies.

– Node Failures: Nodes may fail during the election process, complicating the determination of a stable leader.

– Concurrency: Multiple nodes may initiate the election process concurrently, potentially leading to conflicts and race conditions.

5. Strategies for Leader Election:

– Consensus Algorithms: Distributed consensus algorithms like Paxos, Raft, and ZAB provide robust mechanisms for leader election and maintaining consistency across distributed systems.

– Fault Tolerance: Leader election algorithms should be designed to handle node failures and network partitions gracefully, ensuring that the system remains operational even in adverse conditions.

– Scalability: Leader election mechanisms should scale with the size of the distributed network, accommodating additional nodes without significant overhead.

6. Implementation in Go:

– Go’s concurrency features, such as goroutines and channels, make it well-suited for implementing distributed coordination mechanisms like leader election.

– Developers can leverage Go’s standard library and third-party packages to implement leader election algorithms and handle network communication efficiently.

– Example code (as provided earlier) demonstrates a simple leader election algorithm implemented in Go, where nodes compete based on their IDs.

In summary, distributed coordination, particularly leader election, is essential for ensuring the reliability, consistency, and efficiency of distributed systems. By understanding the theory behind leader election and implementing robust algorithms, developers can build scalable and fault-tolerant distributed systems using programming languages like Go.

package main

import (

"fmt"

"math/rand"

"sync"

"time"

)

// Node represents a participant in the leader election process

type Node struct {

ID int

isLeader bool

electionTerm int

leaderChan chan int

mutex sync.Mutex

}

// NewNode creates a new instance of Node

func NewNode(id int) *Node {

return &Node{

ID: id,

isLeader: false,

electionTerm: 0,

leaderChan: make(chan int),

}

}

// StartElection initiates the leader election process

func (n *Node) StartElection() {

n.mutex.Lock()

n.electionTerm++

currentTerm := n.electionTerm

n.mutex.Unlock()

fmt.Printf("Node %d starts election (Term %d)\n", n.ID, currentTerm)

// Simulate the election process (wait for a random duration)

time.Sleep(time.Duration(rand.Intn(300)) * time.Millisecond)

// Send the election result to the leader channel

n.leaderChan <- n.ID

}

// ElectLeader selects the leader among multiple nodes

func ElectLeader(nodes []*Node) {

// Create a wait group to wait for all nodes to complete the election

var wg sync.WaitGroup

wg.Add(len(nodes))

// Start the election process for each node concurrently

for _, node := range nodes {

go func(n *Node) {

defer wg.Done()

n.StartElection()

}(node)

}

// Wait for all nodes to complete the election

wg.Wait()

// Select the node with the highest ID as the leader

var leaderID int

for _, node := range nodes {

select {

case id := <-node.leaderChan:

if id > leaderID {

leaderID = id

}

}

}

// Set the elected leader

for _, node := range nodes {

if node.ID == leaderID {

node.isLeader = true

fmt.Printf("Node %d elected as the leader\n", node.ID)

} else {

node.isLeader = false

}

}

}

func main() {

// Create multiple nodes

nodes := []*Node{

NewNode(1),

NewNode(2),

NewNode(3),

NewNode(4),

}

// Elect the leader among the nodes

ElectLeader(nodes)

}

In this code:

- We define a

Nodestruct to represent a participant in the leader election process. Each node has an ID, a flag to indicate whether it’s the leader, an election term, and a channel (leaderChan) to communicate the election result. - The

StartElectionmethod initiates the election process for a node. It increments the election term, simulates the election process by waiting for a random duration, and then sends the node’s ID to theleaderChan. - The

ElectLeaderfunction coordinates the leader election among multiple nodes. It starts the election process for each node concurrently and waits for all nodes to complete the election. Then, it selects the node with the highest ID as the leader and updates theisLeaderflag accordingly. - In the

mainfunction, we create multiple nodes and then initiate the leader election process by calling theElectLeaderfunction.

This example demonstrates a simple leader election algorithm where nodes compete based on their IDs. In a real-world scenario, you might use more sophisticated algorithms like Paxos or Raft for distributed consensus and leader election.

Distributed Tasks and Work Queues:

Distributed tasks and work queues are essential components of distributed systems designed to distribute tasks across multiple worker nodes, enabling improved parallelism, scalability, and fault tolerance. This approach is particularly beneficial for applications with heavy computational workloads or tasks that can be processed independently in parallel. Let’s explore the concept of distributed tasks and work queues in more detail:

1. Overview of Distributed Tasks:

– Distributed tasks involve breaking down complex tasks or jobs into smaller, independent units of work that can be executed concurrently on multiple nodes.

– These tasks are typically designed to be idempotent, meaning they produce the same result regardless of how many times they are executed.

– By distributing tasks across multiple nodes, we can leverage the computational power of the entire system, leading to faster execution and improved scalability.

2. Work Queue Systems:

– Work queue systems serve as intermediaries between task producers (clients) and task consumers (workers).

– Producers enqueue tasks into the work queue, and workers dequeue tasks from the queue for execution.

– This decoupling of task submission and execution allows for asynchronous and parallel processing of tasks, as workers can process tasks independently and in parallel.

3. Key Components of Work Queue Systems:

– Task Producer: The component responsible for generating and submitting tasks to the work queue. Task producers may be part of the application logic or external clients interacting with the system.

– Work Queue: A data structure that stores the tasks awaiting execution. Work queues are typically implemented using messaging systems like RabbitMQ, Gearman, or Apache Kafka.

– Task Consumer (Worker):The component responsible for dequeuing tasks from the work queue and executing them. Workers can be distributed across multiple nodes, allowing for parallel processing of tasks.

4. Tools for Distributed Tasks and Work Queues:

– Celery with RabbitMQ: Celery is a distributed task queue for Python applications, while RabbitMQ is a messaging broker that Celery uses for task queuing. Celery allows developers to define tasks as functions or methods and execute them asynchronously across multiple worker nodes.

– Gearman: Gearman is an open-source distributed task queuing system that provides a simple and flexible solution for distributed computing. It supports multiple programming languages and can be used to distribute tasks across a cluster of worker nodes.

– Apache Spark:Apache Spark is a distributed computing framework that provides high-level APIs for parallel processing of large datasets. Spark’s resilient distributed datasets (RDDs) allow developers to perform distributed transformations and actions on data across a cluster of nodes.

5. Benefits of Distributed Tasks and Work Queues:

-Scalability: Distributed task queues enable applications to scale horizontally by adding more worker nodes to handle increased workload.

– Fault Tolerance: By decoupling task submission and execution, work queue systems can recover from worker failures and ensure that tasks are processed reliably.

– Parallelism:Work queue systems enable parallel processing of tasks, allowing multiple tasks to be executed simultaneously on different nodes, leading to faster execution times.

6. Implementation Considerations:

– When implementing distributed tasks and work queues, consider factors such as task granularity, communication overhead, fault tolerance, and monitoring/logging.

– Choose the appropriate messaging system and distributed computing framework based on your application requirements, scalability needs, and programming language preferences.

In summary, distributed tasks and work queues provide a powerful mechanism for distributing and executing tasks across multiple nodes in a distributed system. By leveraging tools like Celery with RabbitMQ, Gearman, or Apache Spark, developers can build scalable, fault-tolerant, and efficient distributed applications capable of handling complex computational workloads.

Let’s create a simple example of a distributed task queue system in Go using RabbitMQ as the message broker. We’ll define a task to calculate the sum of two numbers and enqueue it into the queue. Then, we’ll create worker nodes that consume tasks from the queue and process them asynchronously

First, ensure you have RabbitMQ installed and running on your system. Then, install the necessary Go package to interact with RabbitMQ:

go get github.com/streadway/amqp

Now, let’s create the Go code for the producer (task enqueuer) and consumer (worker):

1. producer.go:

package main

import (

"log"

"os"

"strconv"

"time"

"github.com/streadway/amqp"

)

func failOnError(err error, msg string) {

if err != nil {

log.Fatalf("%s: %s", msg, err)

}

}

func main() {

conn, err := amqp.Dial("amqp://guest:guest@localhost:5672/")

failOnError(err, "Failed to connect to RabbitMQ")

defer conn.Close()

ch, err := conn.Channel()

failOnError(err, "Failed to open a channel")

defer ch.Close()

q, err := ch.QueueDeclare(

"task_queue", // queue name

true, // durable

false, // delete when unused

false, // exclusive

false, // no-wait

nil, // arguments

)

failOnError(err, "Failed to declare a queue")

body := "4,6" // Task: Sum of 4 and 6

err = ch.Publish(

"", // exchange

q.Name, // routing key

false, // mandatory

false, // immediate

amqp.Publishing{

DeliveryMode: amqp.Persistent,

ContentType: "text/plain",

Body: []byte(body),

})

failOnError(err, "Failed to publish a message")

log.Printf(" [x] Sent %s", body)

}

2. worker.go:

package main

import (

"log"

"os"

"os/signal"

"syscall"

"time"

"github.com/streadway/amqp"

)

func failOnError(err error, msg string) {

if err != nil {

log.Fatalf("%s: %s", msg, err)

}

}

func main() {

conn, err := amqp.Dial("amqp://guest:guest@localhost:5672/")

failOnError(err, "Failed to connect to RabbitMQ")

defer conn.Close()

ch, err := conn.Channel()

failOnError(err, "Failed to open a channel")

defer ch.Close()

q, err := ch.QueueDeclare(

"task_queue", // queue name

true, // durable

false, // delete when unused

false, // exclusive

false, // no-wait

nil, // arguments

)

failOnError(err, "Failed to declare a queue")

msgs, err := ch.Consume(

q.Name, // queue

"", // consumer

false, // auto-ack

false, // exclusive

false, // no-local

false, // no-wait

nil, // args

)

failOnError(err, "Failed to register a consumer")

forever := make(chan bool)

go func() {

for d := range msgs {

log.Printf("Received a message: %s", d.Body)

// Simulate some work

time.Sleep(2 * time.Second)

// Acknowledge the message

d.Ack(false)

}

}()

log.Printf(" [*] Waiting for messages. To exit press CTRL+C")

sig := make(chan os.Signal, 1)

signal.Notify(sig, syscall.SIGINT, syscall.SIGTERM)

<-sig

log.Printf(" [*] Exiting...")

os.Exit(0)

}

In this code:

- producer.go: This program connects to RabbitMQ, declares a queue named “task_queue,” and publishes a task to the queue. The task, in this case, is to calculate the sum of two numbers (4 and 6). The message is marked as persistent to ensure it is not lost even if RabbitMQ crashes.

- worker.go: This program connects to RabbitMQ, declares the same queue (“task_queue”), and starts consuming messages from it. Upon receiving a message, it simulates some work (2-second sleep) and then acknowledges the message to remove it from the queue.

To run this example:

- Start RabbitMQ if it’s not already running.

- Run the worker by executing

go run worker.go. - Run the producer by executing

go run producer.go.

You should see the worker print the message “Received a message: 4,6” and simulate work for 2 seconds before acknowledging the message.

In conclusion, building distributed systems with Go offers numerous benefits, including simplicity, efficiency, and built-in support for concurrency. By mastering the fundamentals of Go programming and understanding the principles of distributed systems, developers can create robust and scalable distributed systems to meet the demands of modern applications. With careful planning and design, Go developers can tackle the challenges of building distributed systems and create reliable and efficient solutions. Happy coding !❤️