XML Data Handling in Hadoop Ecosystem

XML (Extensible Markup Language) is widely used for data interchange and representation, but managing XML data at scale presents unique challenges. The Hadoop ecosystem, with its powerful storage and processing capabilities, provides a solid framework for handling XML in big data environments. This chapter explores strategies for efficiently storing, processing, and analyzing XML data within the Hadoop ecosystem.

Understanding XML Data and Its Role in Big Data

What is XML?

XML is a flexible, structured format for data representation. It’s both machine- and human-readable and often used in data exchange between applications.

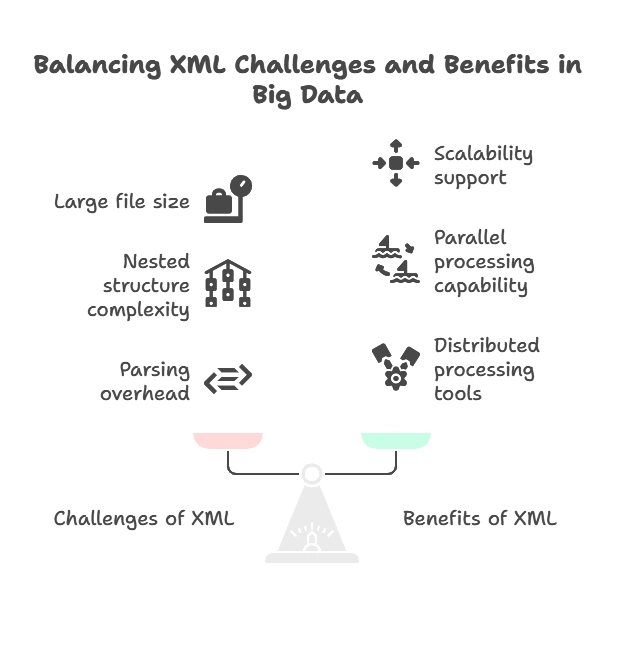

Challenges of XML in Big Data

- Size and Complexity: XML files are verbose and can become very large, impacting storage and processing speed.

- Nested Structure: XML data is hierarchical, making it harder to process in a row-column format.

- Parsing Overheads: Extracting data from XML requires parsing, which can be computationally intensive.

Benefits of Using XML with Hadoop

- Scalability: Hadoop’s distributed file system (HDFS) supports large XML files.

- Parallel Processing: Hadoop’s ecosystem tools enable distributed XML data processing.

Loading XML Data into Hadoop

Storing XML in HDFS

XML data files can be stored directly in the Hadoop Distributed File System (HDFS). Using HDFS commands:

hdfs dfs -put localpath/xmlfile.xml /user/hadoop/xmlfiles/

Using Hadoop’s XML Processing Libraries

Libraries such as Apache Hive and Apache Pig provide support for reading XML. Apache Hadoop’s XMLInputFormat allows splitting XML files across multiple nodes for parallel processing.

Example: Loading XML with Hive

Hive’s XPath functions help query XML:

CREATE TABLE xml_table (

data STRING

)

ROW FORMAT SERDE 'com.ibm.spss.hive.serde2.xml.XmlSerDe'

WITH SERDEPROPERTIES (

"column.xpath.data"="/root/data"

)

STORED AS INPUTFORMAT 'com.ibm.spss.hive.serde2.xml.XmlInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.IgnoreKeyTextOutputFormat';

This code sets up Hive to parse and query XML data.

Processing XML Data with Apache Pig

Using Pig’s XML Loader

Apache Pig provides XPathLoader for XML processing. This loader allows specific XML elements to be selected using XPath.

REGISTER piggybank.jar;

DEFINE XPathLoader org.apache.pig.piggybank.storage.XMLLoader('root/data');

data = LOAD 'hdfs:///user/hadoop/xmlfiles/data.xml' USING XPathLoader AS (field1:chararray, field2:chararray);

DUMP data;

Example Use Cases

- Data Extraction: Extract specific tags for analysis.

- Transformation: Convert XML data into a flattened format for compatibility with other Hadoop tools.

Converting XML Data for Analysis in Hadoop

Transforming XML to JSON

JSON is more efficient in Hadoop since it aligns better with key-value storage and processing:

- Use tools like

xml2jsonor a custom script in Python to convert XML to JSON.

Transforming XML to Parquet

Parquet, a columnar storage format, is efficient for analytics. Use libraries like Apache Drill or Spark:

from pyspark.sql import SparkSession

from pyspark.sql import DataFrame

spark = SparkSession.builder.appName("XMLToParquet").getOrCreate()

df = spark.read.format("com.databricks.spark.xml").options(rowTag="data").load("hdfs:///user/hadoop/xmlfiles/data.xml")

df.write.parquet("hdfs:///user/hadoop/xmlfiles/data.parquet")

This script reads XML data using Spark, converts it to a DataFrame, and saves it in Parquet format.

Querying XML Data in the Hadoop Ecosystem

Querying XML with Hive

Use Hive’s XPath functions:

SELECT xpath_string(data, '/root/data/field') AS field FROM xml_table;

Querying XML with Apache Drill

Apache Drill can handle nested data types directly, making it ideal for XML:

SELECT field1 FROM dfs.`/user/hadoop/xmlfiles/data.xml`;

Best Practices and Optimization Techniques

Optimizing Storage

- Compression: Use HDFS compression (e.g., Gzip) to reduce storage size.

- Partitioning: Break large XML files into smaller chunks for parallel processing.

Optimizing Query Performance

- Limit Data with XPath: Use XPath to query specific XML sections, reducing parsing load.

- Use Columnar Formats: Store transformed data in Parquet or ORC formats for faster querying.

XML Data Governance

- Schema Validation: Ensure XML schema compliance before loading into Hadoop.

- Data Quality Checks: Implement rules to verify XML content accuracy and integrity.

Handling XML data in the Hadoop ecosystem requires an understanding of XML’s inherent structure and challenges. By leveraging tools like Hive, Pig, Spark, and Drill, you can efficiently store, transform, and query XML data. Converting XML to more Hadoop-compatible formats, such as JSON or Parquet, often results in better performance and easier analysis, making XML data management scalable and effective in big data environments. Happy coding !❤️