Rate Limiting and Throttling

Introduction to Rate Limiting and Throttling In modern web applications, controlling the flow of requests is critical to ensuring both the security and performance of your server. Two common techniques to achieve this are rate limiting and throttling. Both techniques help prevent abuse and overload of APIs and web services by restricting the number of requests a user can make within a given period. In this chapter, we'll dive into the concepts of rate limiting and throttling, their importance, and how to implement them effectively in Node.js.

What is Rate Limiting?

Definition:

Rate limiting is a technique used to control the number of requests a client can make to a server in a specific time frame. This is important for:

- Preventing Denial of Service (DoS) attacks.

- Avoiding overloading the server with too many requests.

- Ensuring fair usage of resources among multiple users.

Common Use Cases for Rate Limiting:

- APIs to prevent overuse by a single client.

- Protecting authentication endpoints from brute force attacks.

- Ensuring that server resources are fairly distributed.

Basic Concept of Rate Limiting:

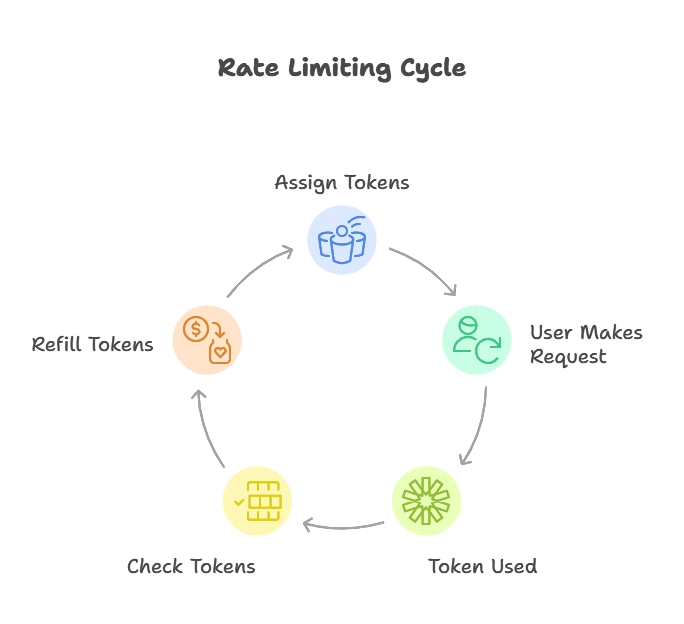

Rate limiting is typically implemented using a token bucket or leaky bucket algorithm. The server assigns a “bucket” of tokens to each user, where each token allows one request. When the user makes a request, a token is “used up.” If there are no tokens left, the server rejects the request until the tokens are refilled after a set period.

Implementing Rate Limiting in Node.js

In Node.js, rate limiting is most commonly implemented using middleware, particularly with Express.js, one of the most popular web frameworks. We will use the express-rate-limit package to achieve this.

Example: Basic Rate Limiting with express-rate-limit

const express = require('express');

const rateLimit = require('express-rate-limit');

const app = express();

// Define the rate limiter

const limiter = rateLimit({

windowMs: 15 * 60 * 1000, // 15 minutes

max: 100, // Limit each IP to 100 requests per windowMs

message: 'Too many requests from this IP, please try again after 15 minutes',

});

// Apply the rate limiter to all requests

app.use(limiter);

app.get('/', (req, res) => {

res.send('Hello, World!');

});

app.listen(3000, () => {

console.log('Server is running on port 3000');

});

Explanation:

- The

windowMsoption defines the time window, in this case, 15 minutes. - The

maxoption sets the maximum number of requests that can be made in that window. - When the request limit is exceeded, the user will receive a response with a custom message.

What is Throttling?

Definition:

Throttling is a technique used to control the rate of execution of requests by delaying them. Unlike rate limiting, which outright blocks requests, throttling slows down the flow of requests if they exceed a certain rate, ensuring that the server is not overwhelmed.

Key Differences Between Rate Limiting and Throttling:

- Rate Limiting: Restricts the number of requests that can be made in a given time period. If exceeded, requests are blocked.

- Throttling: Slows down requests if they exceed the allowed rate, but they are not completely blocked.

Use Cases for Throttling:

- APIs where you don’t want to block users but want to ensure they are sending requests at a manageable pace.

- Streaming services where throttling can help manage the flow of data over a period.

Implementing Throttling in Node.js

While there is no built-in middleware in Express.js for throttling, you can achieve this with custom logic or by using packages like bottleneck.

Example: Implementing Throttling with bottleneck

const express = require('express');

const Bottleneck = require('bottleneck');

const app = express();

// Create a new limiter with custom options

const limiter = new Bottleneck({

maxConcurrent: 1, // Only one request at a time

minTime: 500, // 500 ms between requests

});

// Apply the throttling function to a route

app.get('/', (req, res) => {

limiter.schedule(() => {

res.send('Throttled response');

});

});

app.listen(3000, () => {

console.log('Server running on port 3000');

});

Explanation:

- We use the

Bottleneckpackage to throttle requests. - The

maxConcurrentoption limits the number of simultaneous requests. - The

minTimeoption ensures that there is at least a 500-millisecond delay between requests.

Advanced Rate Limiting Techniques

IP-based Rate Limiting:

This is one of the most common forms of rate limiting where each user’s IP address is tracked to enforce limits.

const limiter = rateLimit({

windowMs: 10 * 60 * 1000, // 10 minutes

max: 50, // limit each IP to 50 requests per window

keyGenerator: (req) => req.ip, // Use the user's IP as the key

});

User-based Rate Limiting:

Instead of limiting based on IP, you can limit based on user accounts. This is useful in scenarios like API authentication where rate limits are imposed per authenticated user.

const limiter = rateLimit({

windowMs: 15 * 60 * 1000,

max: (req) => {

const user = req.user; // Assuming you have user authentication

return user.isPremium ? 500 : 100; // Higher limits for premium users

},

});

Dynamic Rate Limits:

Rate limits can be dynamic based on different factors like API usage, user role, or time of day.

Handling Burst Traffic with Rate Limiting

Some applications experience “burst traffic,” where there may be short periods of intense request loads. A more flexible solution like the leaky bucket or token bucket algorithm can be used to allow for temporary bursts while still maintaining an overall rate limit.

const limiter = rateLimit({

windowMs: 1 * 60 * 1000, // 1 minute window

max: 10, // Start blocking after 10 requests

skipFailedRequests: true, // Ignore failed requests in the limit

});

Combining Rate Limiting and Throttling

In some cases, you may want to combine both rate limiting and throttling. For instance, you might allow a limited number of requests and then throttle requests that exceed that limit.

const express = require('express');

const rateLimit = require('express-rate-limit');

const Bottleneck = require('bottleneck');

const app = express();

// Create a rate limiter

const rateLimiter = rateLimit({

windowMs: 10 * 60 * 1000, // 10 minutes

max: 50, // Limit to 50 requests per window

});

// Create a throttler

const throttler = new Bottleneck({

maxConcurrent: 1, // Only one request at a time

minTime: 500, // 500 ms between requests

});

// Apply both rate limiter and throttler

app.use(rateLimiter);

app.get('/', (req, res) => {

throttler.schedule(() => {

res.send('Rate limited and throttled response');

});

});

app.listen(3000, () => {

console.log('Server is running on port 3000');

});

Rate limiting and throttling are essential techniques for managing traffic to your Node.js applications. They provide a robust solution to protect your server from abuse, overload, and denial-of-service attacks. By using middleware like express-rate-limit for rate limiting and bottleneck for throttling, you can implement these features quickly and efficiently.In this chapter, we covered the basics and advanced usage of rate limiting and throttling, explored common use cases, and demonstrated how to implement them in Node.js using real code examples. Whether you're building APIs, web services, or user-facing applications, rate limiting and throttling help ensure that your application remains secure, performant, and scalable.