Natural Language Processing (NLP) Integration with Node.js

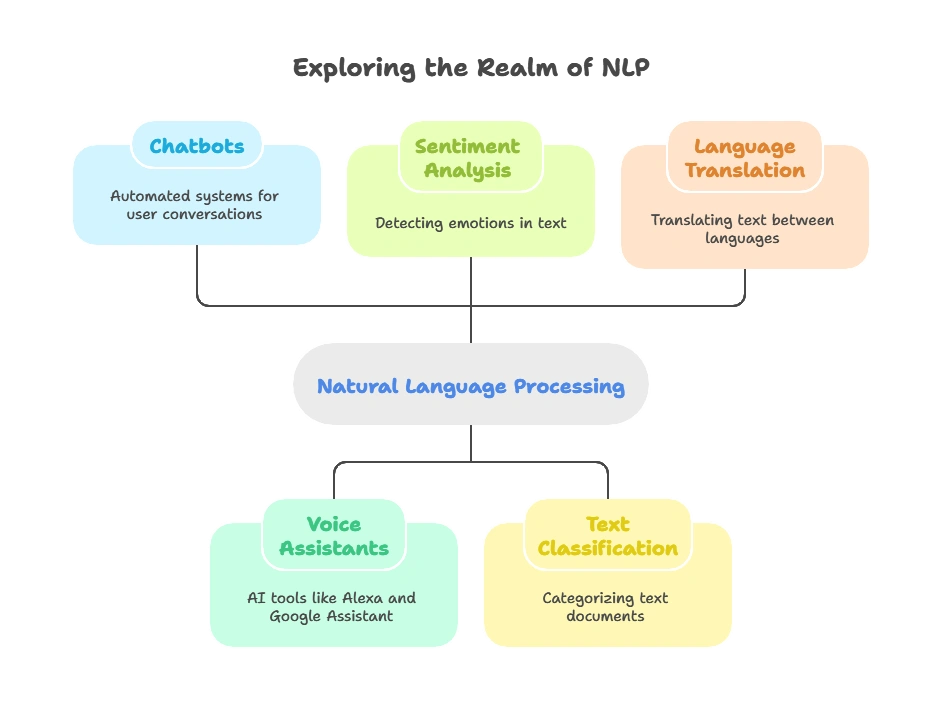

Natural Language Processing (NLP) enables computers to understand, interpret, and generate human language. It plays a critical role in applications such as chatbots, voice assistants, sentiment analysis, translation services, and text classification. This chapter will explore how to integrate NLP into your Node.js applications, covering everything from basic concepts to advanced use cases, with detailed code examples to guide you along the way.

What is NLP?

Natural Language Processing (NLP) is a branch of artificial intelligence that deals with the interaction between computers and human (natural) languages. NLP involves various processes such as understanding text, recognizing sentiment, extracting meaningful information, and responding to queries in a human-like manner.

Common Use Cases of NLP:

- Chatbots: Automated systems that can hold conversations with users.

- Voice Assistants: Like Amazon Alexa, Google Assistant.

- Sentiment Analysis: Detecting positive, negative, or neutral emotions in text.

- Text Classification: Categorizing emails, documents, or social media posts.

- Language Translation: Translating text between languages (Google Translate).

Why Integrate NLP with Node.js?

Node.js is known for handling asynchronous tasks, making it perfect for real-time communication and APIs. The integration of NLP with Node.js opens the door to creating highly interactive and intelligent applications. Whether it’s building chatbots, customer support systems, or even recommendation engines, Node.js allows smooth integration with NLP libraries and cloud services.

Advantages of Using Node.js for NLP:

- Scalability: Handle large-scale applications with minimal performance impact.

- Numerous Libraries: A vast array of packages like

natural,compromise, and APIs such as Google’s NLP API make the integration seamless. - Real-Time Applications: Non-blocking I/O allows real-time processing, which is crucial for interactive conversations and immediate text analysis.

Setting Up the Node.js Environment for NLP

Before diving into NLP, you need to set up your Node.js development environment.

Steps:

- Install Node.js: Ensure that you have Node.js installed by downloading it from the official Node.js site.

- Initialize Project:

mkdir blockchain-nodejs

cd blockchain-nodejs

npm init -y

amkdir nlp-integration

cd nlp-integration

npm init -y

Install Required Libraries:

- natural: A popular NLP library for text analysis in Node.js.

- compromise: A lightweight library for natural language processing.

- axios: For making HTTP requests to external NLP services.

npm install natural compromise axios

Basic NLP with Node.js Using natural Library

natural Overview:

natural is one of the most commonly used NLP libraries in Node.js. It supports tasks like tokenization, stemming, classification, and more.

Example: Tokenization

Tokenization refers to splitting text into individual words (tokens). This is a key step in many NLP tasks.

const natural = require('natural');

const tokenizer = new natural.WordTokenizer();

const text = "Natural Language Processing is fascinating!";

const tokens = tokenizer.tokenize(text);

console.log(tokens);

Output:

[ 'Natural', 'Language', 'Processing', 'is', 'fascinating' ]

Code Explanation:

- Tokenizer: The

WordTokenizersplits the text into an array of words (tokens). - Text Analysis: This prepares the text for further analysis such as classification or sentiment analysis.

Example: Stemming

Stemming is the process of reducing words to their base or root form (e.g., “running” becomes “run”)

const natural = require('natural');

const stem = natural.PorterStemmer.stem;

console.log(stem("running")); // Output: "run"

console.log(stem("fascinating")); // Output: "fascin"

Code Explanation:

- Porter Stemmer: The

PorterStemmeris a popular algorithm used for stemming in English. - Word Reduction: This reduces inflected words to their base form, which is useful for normalization in text analysis.

Advanced NLP with compromise Library

compromise is another popular library for handling text data in JavaScript, known for being lightweight and efficient.

Example: Named Entity Recognition (NER)

Named Entity Recognition (NER) involves identifying and classifying entities such as people, places, and organizations in a text.

const nlp = require('compromise');

const doc = nlp('John Smith is traveling to New York City next Monday.');

const places = doc.places().out('array');

const people = doc.people().out('array');

console.log('Places:', places); // Output: ['New York City']

console.log('People:', people); // Output: ['John Smith']

Code Explanation:

- NER: This example shows how to extract named entities like people and places from a sentence.

- Place and People Extraction: The

places()andpeople()methods return all the recognized entities in the text.

Example: Sentiment Analysis

Sentiment analysis determines whether the emotion behind a given text is positive, negative, or neutral.

const nlp = require('compromise');

const doc = nlp('This movie is absolutely wonderful!');

const sentiment = doc.sentiment().out('number');

console.log('Sentiment Score:', sentiment);

Code Explanation:

- Sentiment Score: Positive sentiments return a higher score, while negative sentiments return a lower score. This can be used to analyze user feedback, product reviews, or social media posts.

Integrating with External NLP APIs

There are several powerful NLP APIs that you can integrate with Node.js to handle advanced tasks like speech recognition, text classification, and language translation. Some popular ones include:

- Google Cloud NLP API

- IBM Watson NLP API

- Microsoft Azure Text Analytics API

Example: Using Google Cloud NLP API

Set Up Google Cloud NLP: Create a Google Cloud account, and enable the Cloud Natural Language API.

Install

@google-cloud/language:

npm install @google-cloud/language

3 Example Code for Analyzing Sentiment:

const language = require('@google-cloud/language');

const client = new language.LanguageServiceClient();

async function analyzeSentiment(text) {

const document = {

content: text,

type: 'PLAIN_TEXT',

};

const [result] = await client.analyzeSentiment({ document });

const sentiment = result.documentSentiment;

console.log(`Sentiment score: ${sentiment.score}`);

}

analyzeSentiment('The product is amazing and I love it!');

Code Explanation:

- Google Cloud Client: The

LanguageServiceClientcommunicates with the NLP API to analyze the text. - Sentiment Analysis: The API returns a sentiment score indicating whether the input text is positive, negative, or neutral.

Building a Chatbot with NLP

Chatbots are a common application of NLP. Let’s build a simple chatbot using Node.js and integrate an NLP service for text understanding.

Example: Chatbot with NLP

const express = require('express');

const bodyParser = require('body-parser');

const nlp = require('compromise');

const app = express();

app.use(bodyParser.json());

app.post('/chat', (req, res) => {

const userMessage = req.body.message;

const doc = nlp(userMessage);

if (doc.has('weather')) {

res.json({ reply: "Sure, I can tell you about the weather!" });

} else if (doc.has('news')) {

res.json({ reply: "Here are the latest news headlines!" });

} else {

res.json({ reply: "Sorry, I didn't understand that." });

}

});

app.listen(3000, () => console.log('Chatbot server running on port 3000'));

Code Explanation:

- NLP Integration: The chatbot uses the

compromiselibrary to detect user intent. - Contextual Responses: Based on the identified keywords (like “weather” or “news”), the bot provides appropriate responses.

Advanced Techniques: Training a Custom NLP Model

For more control, you may want to train a custom NLP model. Services like TensorFlow.js or Hugging Face allow you to create models that can be integrated into your Node.js application.

Steps to Train a Custom NLP Model:

- Data Collection: Gather training data based on your use case (e.g., labeled customer support logs).

- Preprocessing: Tokenize and clean the data to prepare it for training.

- Model Training: Use frameworks like TensorFlow.js to build and train your model.

- Deploying the Model: Integrate the trained model into your Node.js application.

Natural Language Processing is an essential technology for modern applications. With Node.js, you can integrate NLP seamlessly, whether you're using libraries like natural and compromise or APIs like Happy coding !❤️