Handling SEO in React Applications

Search Engine Optimization (SEO) is the practice of optimizing your website to increase its visibility for search engines like Google, Bing, or Yahoo. The better visibility your site has, the more likely it is to attract traffic. Traditional server-rendered websites have an advantage in SEO, but single-page applications (SPAs) like those built with React can face challenges because content is often rendered dynamically on the client side. This chapter will walk you through strategies and techniques to optimize SEO in React applications from basic to advanced.

What is SEO and Why is it Important for React Applications?

Search Engine Optimization (SEO) is a set of practices aimed at improving the visibility and ranking of web pages on search engines. Good SEO practices ensure that:

- Your web pages are indexed by search engines.

- Your content is easily discoverable by users searching for related terms.

- You gain organic traffic, reducing the need for paid advertisements.

For React applications, particularly Single Page Applications (SPAs), SEO can be more challenging since much of the content is rendered dynamically on the client-side after the page loads. Search engine crawlers might not wait for JavaScript to execute, meaning they may miss indexing important content.

Challenges of SEO in Single-Page React Applications

Single-Page Applications (SPAs) load a single HTML page and dynamically update content using JavaScript. While this approach provides a fast and smooth user experience, it can be problematic for SEO:

- JavaScript Rendering: Not all search engines can render JavaScript, meaning they may not see the actual content of your app.

- Dynamic Routing: URLs dynamically change without loading new HTML files, which can confuse search engines about the actual content of different pages.

Server-Side Rendering (SSR) with Next.js for Better SEO

Server-Side Rendering (SSR) is one of the most effective ways to improve SEO in React applications. With SSR, the content of a page is generated on the server and sent to the browser, ensuring search engines can index the fully rendered HTML.

Using Next.js for SSR:

Next.js is a popular React framework that supports SSR out of the box.

Basic Example of SSR with Next.js:

import React from 'react';

function HomePage({ data }) {

return (

<div>

<h1>{data.title}</h1>

<p>{data.description}</p>

</div>

);

}

export async function getServerSideProps() {

const res = await fetch('https://api.example.com/data');

const data = await res.json();

return {

props: { data }, // Passed to the page component as props

};

}

export default HomePage;

In this example, data is fetched on the server, and the fully rendered HTML is sent to the browser. This ensures that the content is visible to search engines from the start.

Static Site Generation (SSG) in React

Static Site Generation (SSG) is another approach that builds the HTML files at build time. This is useful for pages with content that doesn’t need to change frequently.

Example of SSG with Next.js:

import React from 'react';

function BlogPost({ post }) {

return (

<div>

<h1>{post.title}</h1>

<p>{post.content}</p>

</div>

);

}

export async function getStaticPaths() {

const res = await fetch('https://api.example.com/posts');

const posts = await res.json();

const paths = posts.map((post) => ({

params: { id: post.id.toString() },

}));

return { paths, fallback: false };

}

export async function getStaticProps({ params }) {

const res = await fetch(`https://api.example.com/posts/${params.id}`);

const post = await res.json();

return { props: { post } };

}

export default BlogPost;

Here, getStaticPaths generates paths for all blog posts, and getStaticProps fetches data at build time for each page. This ensures all pages are pre-rendered with content, improving SEO.

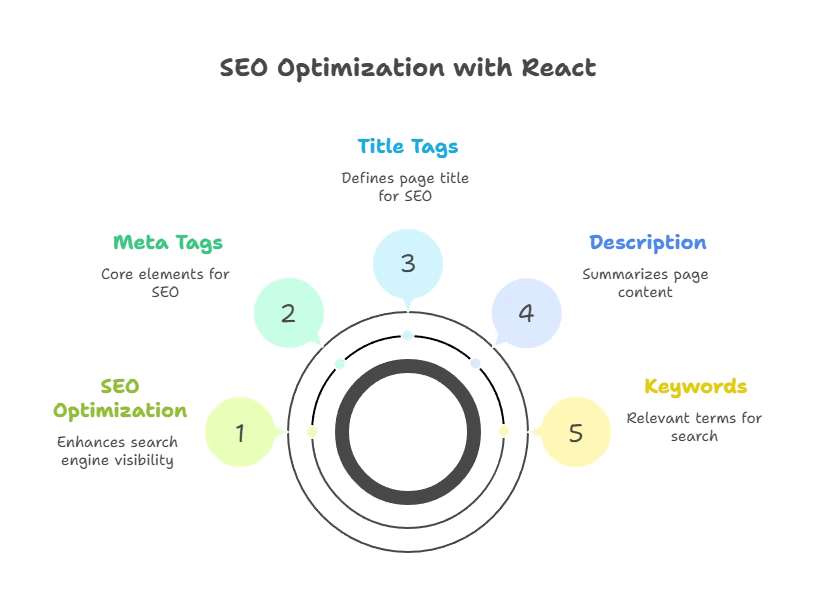

Meta Tags, Title Tags, and Dynamic Metadata in React

Meta tags, such as the title and description, are critical for SEO as they help search engines understand the content of the page.

Adding Meta Tags in React:

Using react-helmet (a popular library for managing the document head) allows you to easily add and update meta tags dynamically.

Example:

import { Helmet } from 'react-helmet';

function HomePage() {

return (

<div>

<Helmet>

<title>Home Page</title>

<meta name="description" content="This is the home page of our website." />

<meta name="keywords" content="React, SEO, Home" />

</Helmet>

<h1>Welcome to Our Website</h1>

</div>

);

}

export default HomePage;

The above example dynamically sets the title and meta tags, which are important for SEO.

Handling Robots.txt and Sitemap.xml in React

A robots.txt file tells search engine crawlers which parts of your site they can or cannot access. A sitemap.xml helps search engines understand the structure of your site and discover all pages.

Example of robots.txt:

User-agent: *

Disallow: /private-page

Allow: /

Example of sitemap.xml:

You can generate a sitemap dynamically using libraries like next-sitemap in Next.js.

// next-sitemap.config.js

module.exports = {

siteUrl: 'https://www.example.com',

generateRobotsTxt: true,

};

Lazy Loading and its Impact on SEO

Lazy Loading is a performance optimization technique that loads content only when it is needed. However, improper lazy loading can hide content from search engines, impacting SEO negatively.

To avoid this, make sure essential content is always loaded first and avoid lazy loading content that is critical for SEO.

Example

const LazyComponent = React.lazy(() => import('./MyComponent'));

function App() {

return (

<React.Suspense fallback={<div>Loading...</div>}>

<LazyComponent />

</React.Suspense>

);

}

Structured Data and Schema Markup for SEO in React

Structured Data helps search engines understand the content of your page better. Schema.org is a commonly used format for structured data.

Adding JSON-LD Structured Data:

import { Helmet } from 'react-helmet';

function ProductPage({ product }) {

const productSchema = {

"@context": "https://schema.org",

"@type": "Product",

"name": product.name,

"image": product.image,

"description": product.description,

"sku": product.sku,

"offers": {

"@type": "Offer",

"priceCurrency": "USD",

"price": product.price,

}

};

return (

<div>

<Helmet> <script type="application/ld+json">{JSON.stringify(productSchema)}</script> </Helmet>

<h1>{product.name}</h1>

<p>{product.description}</p>

</div>

);

}

export default ProductPage;

p>

<button onClick={() => setCount(count + 1)}>Increment</button>

</div>

);

};

This ensures that your product information is structured and discoverable by search engines.

Optimizing React Application Performance for SEO

Page speed is a critical ranking factor. Slow loading times can lead to poor user experience and lower rankings.

Techniques to Optimize Performance:

- Code Splitting: Using React’s

lazy()andSuspenseto load code only when needed. - Minify JavaScript and CSS: Use tools like Webpack to compress files.

- Optimize Images: Use modern formats like WebP and lazy load images.

Example of Code Splitting:

const Home = React.lazy(() => import('./Home'));

function App() {

return (

<React.Suspense fallback={<div>Loading...</div>}>

<Home />

</React.Suspense>

);

}

Using Canonical URLs in React Applications

Canonical URLs prevent duplicate content issues by specifying the preferred version of a page. They help search engines avoid indexing multiple versions of the same content.

Example using react-helmet:

<Helmet>

<link rel="canonical" href="https://www.example.com/home" />

</Helmet>

Handling SEO in React applications requires careful consideration of rendering strategies, metadata, and performance. By utilizing techniques like server-side rendering (SSR), static site generation (SSG), optimizing meta tags, and following best practices for performance, React developers can ensure their applications are SEO-friendly. Ensuring good SEO helps your React application be more discoverable, driving traffic and improving overall user engagement. Happy coding !❤️