Resource Management and Optimization

This chapter covers resource management and optimization techniques in MongoDB to help administrators and developers maximize performance, reduce costs, and ensure smooth scaling. We will look into optimizing CPU, memory, storage, and network usage and explore techniques to make the best use of MongoDB’s built-in tools for resource allocation and performance tuning.

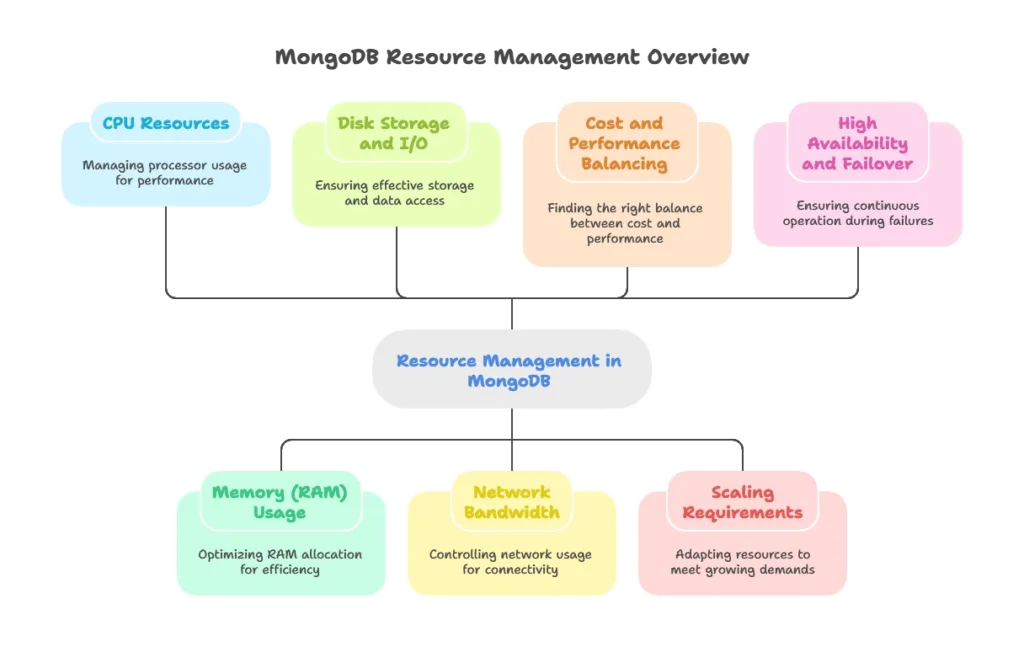

Introduction to Resource Management in MongoDB

Importance of Efficient Resource Management

Proper resource management is essential for MongoDB deployments to perform reliably under varied workloads, scale effectively, and avoid resource-related bottlenecks.

Overview of Resources in MongoDB

- CPU Resources

- Memory (RAM) Usage

- Disk Storage and I/O

- Network Bandwidth

Common Resource Management Challenges

- Balancing cost and performance

- Handling scaling requirements

- Managing high availability and failover scenarios

CPU Resource Management and Optimization

MongoDB’s CPU Requirements

MongoDB’s operations—especially indexing, querying, and replication—are CPU-intensive. Efficient CPU management is key for high throughput.

Monitoring CPU Usage

- Using MongoDB Monitoring: MongoDB Atlas provides CPU metrics.

- Command-line Monitoring: Use Linux tools like

toporhtop.

Optimizing CPU Utilization

- Index Optimization: Reduce CPU load by creating and maintaining efficient indexes.

- Query Optimization: Optimize queries to avoid CPU-intensive full collection scans.

- Distributing Workloads Across Replica Sets: Offload read operations to secondary replicas to reduce CPU strain on the primary node.

Example of an optimized query:

// Inefficient: Causes full collection scan

db.collection.find({ age: { $gt: 20 } }).sort({ age: 1 });

// Efficient: Uses index on "age" field for better performance

db.collection.createIndex({ age: 1 });

db.collection.find({ age: { $gt: 20 } }).sort({ age: 1 });

Memory Management and Optimization

Understanding MongoDB Memory Usage

- Working Set: MongoDB aims to keep frequently accessed data in memory.

- WiredTiger Cache: Memory allocation for real-time data handling.

Calculating the Working Set Size

- Using

db.stats(): Monitor the working set and index size. - Tools: Use monitoring tools like MongoDB Atlas to gauge memory usage.

Configuring WiredTiger Cache Size

- Custom Cache Allocation: Adjust cache size based on available server memory.

- Cache Size Configuration: Use the

--wiredTigerCacheSizeGBoption.

Example of setting WiredTiger cache size:

mongod --wiredTigerCacheSizeGB 4

Optimizing Indexes for Memory Efficiency

- Limit Index Creation: Only create indexes for frequently queried fields to conserve memory.

- Compound and Partial Indexes: Create compound indexes to optimize for multiple fields.

Example of compound and partial indexes:

// Compound Index

db.orders.createIndex({ status: 1, date: 1 });

// Partial Index

db.orders.createIndex({ date: 1 }, { partialFilterExpression: { status: "active" } });

Disk and Storage Optimization

Disk Requirements in MongoDB

MongoDB stores data, indexes, logs, and journal files on disk. Ensuring efficient disk usage is crucial for performance.

Using Compression

- Data Compression: MongoDB’s WiredTiger storage engine supports compression, reducing disk usage.

- Enabling Compression on Collections: Apply

zliborsnappycompression.

Example of setting up compression:

db.createCollection("example", { storageEngine: { wiredTiger: { configString: "block_compressor=zlib" } } });

Archiving Cold Data

Move rarely accessed (cold) data to cost-effective storage solutions like cloud storage, keeping only active data on MongoDB.

Managing Fragmentation with Compact Command

- Compacting Collections: Use the

compactcommand to reclaim fragmented space.

Example of running compact:

db.collection.runCommand({ compact: "orders" });

Network Resource Management

Managing Network Bandwidth Usage

Efficient network usage is essential for scaling, especially with replication and sharding setups.

Minimizing Data Transfer with Compression

- Enabling Data Compression: Reduce the amount of data transferred between replica sets and shards.

Efficient Data Retrieval with Projection

- Limiting Fields: Use projection to retrieve only necessary fields, reducing data transfer.

Example of using projection:

db.orders.find({ status: "shipped" }, { customerName: 1, orderAmount: 1 });

Using Read and Write Concerns

- Adjusting Read Preferences: Set read preferences based on data needs to reduce network load.

- Write Concerns: Customize write acknowledgments to manage network traffic.

Advanced Optimization Techniques

Load Balancing and Horizontal Scaling

- Sharding: Use MongoDB’s sharding capabilities to distribute data and requests across multiple nodes.

- Replication: Use replica sets for high availability and to distribute read workloads.

Query Profiling and Optimization

- Using

explainfor Query Analysis: Identify slow queries and optimize them. - Indexing Strategy: Adjust indexing based on query profiles.

Example of using explain:

db.orders.find({ status: "delivered" }).explain("executionStats");

Connection Pooling

- Optimizing Connections: Use connection pooling to manage concurrent requests efficiently.

Monitoring and Maintenance

Using MongoDB Monitoring Tools

- MongoDB Atlas Monitoring: Offers insights on memory, disk usage, CPU, and network metrics.

- Setting Up Alerts: Configure alerts based on resource usage thresholds.

Profiling Tools for Real-Time Analysis

- Using

mongostatandmongotop: Monitor CPU, memory, disk I/O, and network in real time.

Automated Resource Scaling in the Cloud

- MongoDB Atlas Autoscaling: Use MongoDB Atlas to dynamically adjust resources as load changes.

Resource Management Best Practices

Schema Design for Efficiency

- Field Size Optimization: Keep field names short, and avoid unnecessary nested documents.

- Referencing vs. Embedding: Use referencing to avoid bloated documents for high-read workloads.

Efficient Data Retention and Cleanup

- Using TTL Indexes: Automatically delete expired data to save storage space.

- Archival Strategy: Regularly archive old data to maintain a lean dataset

You could vertically partition this data into two collections:

- Store basic user information (

userId,name,email) in one collection. - Store large fields like

profilePictureandactivityLogin separate collections.

{

"userId": 123,

"name": "John Doe",

"email": "john@example.com",

"profilePicture": "...large binary data...",

"activityLog": [...large array of activities...]

}

Effective resource management and optimization in MongoDB enable high performance, scalability, and cost savings. By applying these strategies—monitoring CPU and memory, optimizing indexes, compressing data, and configuring storage settings—administrators can ensure MongoDB deployments are efficient and responsive to application demands. Happy coding !❤️