Optimizing Aggregation Pipelines for Performance

Aggregation in MongoDB allows for efficient and complex data processing. The aggregation pipeline, a sequence of stages where each stage processes and passes the data to the next, is MongoDB’s powerful data analysis tool.

Introduction to Aggregation in MongoDB

What is Aggregation?

Aggregation is a way of processing data records and returning computed results. It is particularly useful for operations like calculating sums, finding averages, or filtering records.

What is an Aggregation Pipeline?

An aggregation pipeline is a sequence of stages where each stage transforms the documents it receives, passing the transformed results to the next stage.

Example Pipeline Syntax:

db.collection.aggregate([

{ stage1 },

{ stage2 },

...

])

Each stage performs a specific operation, like $match, $group, or $sort. By chaining stages, we can perform complex data manipulations efficiently.

Key Aggregation Pipeline Stages

Common Pipeline Stages

$match: Filters documents.$group: Groups documents by a specified key and performs operations like sum or average.$project: Reshapes each document, including or excluding fields.$sort: Orders documents based on specified fields.$limit: Limits the number of documents in the output.$lookup: Performs a join with another collection.

Order of Stages for Optimization

The order of stages can impact performance significantly. For example:

- Place

$matchEarly: Filter data as soon as possible. - Use

$projectAfter$match: Reduce data size by limiting fields.

Example of Optimized Order:

db.sales.aggregate([

{ $match: { status: "complete" } },

{ $project: { _id: 0, customer: 1, total: 1 } },

{ $group: { _id: "$customer", totalSpent: { $sum: "$total" } } },

{ $sort: { totalSpent: -1 } },

{ $limit: 10 }

])

Strategies for Pipeline Optimization

Filtering with $match Early

The $match stage limits the number of documents that pass through the pipeline, which improves performance.

Example:

db.sales.aggregate([

{ $match: { year: 2024, status: "completed" } },

{ $group: { _id: "$customer", totalSpent: { $sum: "$amount" } } }

])

By filtering on year and status early, fewer documents enter the pipeline, making it faster.

Output Explanation: The filtered data is grouped by the customer, reducing computation load and optimizing resource use.

Projecting Only Necessary Fields with $project

Use $project to include only necessary fields, which minimizes data transfer and memory usage.

Example:

db.sales.aggregate([

{ $project: { customer: 1, amount: 1 } },

{ $group: { _id: "$customer", totalSpent: { $sum: "$amount" } } }

])

Output Explanation: By projecting only customer and amount, unnecessary fields are excluded, reducing memory and CPU consumption.

Limiting the Results with $limit Early

Use $limit in combination with $sort to optimize pipelines with sorted data. Limiting the data early reduces processing in subsequent stages.

Example:

db.sales.aggregate([

{ $sort: { amount: -1 } },

{ $limit: 5 },

{ $project: { customer: 1, amount: 1 } }

])

Output Explanation: This fetches only the top 5 largest amounts, reducing the size of data passed to later stages.

Optimizing $lookup for Joins

Using Local and Foreign Indexes in $lookup

Ensure indexes exist on both the local and foreign keys to speed up joins.

Example:

db.orders.aggregate([

{ $lookup: {

from: "customers",

localField: "customerId",

foreignField: "_id",

as: "customerDetails"

}},

{ $unwind: "$customerDetails" }

])

Explanation: Indexes on customerId in orders and _id in customers make this join operation faster.

Limiting Data Before a $lookup

Reduce the dataset before a $lookup to minimize data fetched from the foreign collection.

Example:

db.orders.aggregate([

{ $match: { status: "delivered" } },

{ $lookup: {

from: "customers",

localField: "customerId",

foreignField: "_id",

as: "customerDetails"

}},

{ $unwind: "$customerDetails" }

])

Explanation: The $match stage filters out non-delivered orders, so only the relevant data is joined.

Advanced Optimization Techniques

Using $merge for Reusable Results

Use $merge to store intermediate results in a collection for repeated use.

Example:

db.sales.aggregate([

{ $group: { _id: "$customerId", totalSpent: { $sum: "$amount" } } },

{ $merge: { into: "customerTotals", whenMatched: "replace" } }

])

Output Explanation: Stores the result in customerTotals collection, making it reusable without recomputation.

Parallel Aggregation in Sharded Clusters

In a sharded cluster, MongoDB can parallelize operations, distributing workload across shards for better performance.

Practical Examples of Optimized Aggregation Pipelines

Scenario: Calculating Total Sales Per Region

Calculate total sales for each region in a dataset of millions of transactions, optimized for performance.

db.transactions.aggregate([

{ $match: { year: 2024 } },

{ $group: { _id: "$region", totalSales: { $sum: "$amount" } } },

{ $sort: { totalSales: -1 } }

])

Explanation: By filtering with $match first and grouping by region, MongoDB efficiently processes only relevant data for 2024.

Scenario: Finding Top Customers

Retrieve the top 10 customers by purchase amount, using indexed fields to speed up the aggregation.

db.sales.aggregate([

{ $group: { _id: "$customerId", totalSpent: { $sum: "$amount" } } },

{ $sort: { totalSpent: -1 } },

{ $limit: 10 }

])

Monitoring and Debugging Aggregation Pipelines

Using the Aggregation Pipeline Optimizer

MongoDB’s aggregation pipeline optimizer automatically optimizes certain stages. Use the .explain("executionStats") method to inspect execution details.

Example:

db.sales.aggregate([ /* stages */ ]).explain("executionStats")

Identifying Bottlenecks

Check the executionTimeMillis value and adjust stages based on where time is most spent.

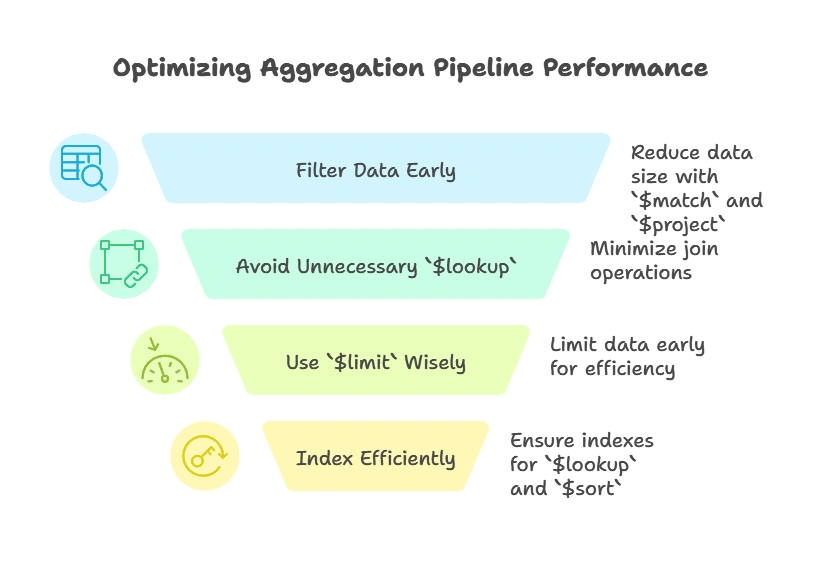

Best Practices for Aggregation Pipeline Optimization

- Filter Data Early: Use

$matchand$projectearly in the pipeline to minimize data size. - Avoid Unnecessary

$lookupOperations: Only use joins when essential. - Use

$limitWisely: Limit data early to optimize performance in subsequent stages. - Index Efficiently: Ensure indexes are in place for

$lookupand$sortfields.

Optimizing MongoDB’s aggregation pipelines is essential for handling large datasets effectively. By strategically placing stages like $match and $project early, reducing data size with $limit, and ensuring indexes are in place, MongoDB’s aggregation pipeline can deliver impressive performance for complex queries. Happy Coding!❤️