Integrating MongoDB with Cloud Storage Solutions

This chapter explores the different ways MongoDB can work with cloud storage solutions, detailing how to leverage cloud storage for backup, scaling, and enhanced data management. We’ll cover setup, best practices, and use cases to make cloud storage integration smooth and effective.

Introduction to MongoDB and Cloud Storage

What is Cloud Storage?

Cloud storage is a data storage model where digital data is stored in logical pools across multiple servers managed by a cloud provider. This offers benefits like scalability, flexibility, and remote access.

Why Integrate MongoDB with Cloud Storage?

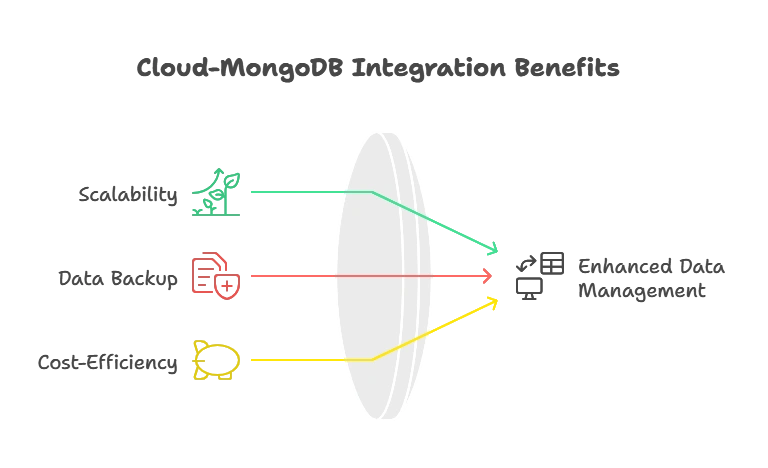

Using MongoDB with cloud storage combines the power of a NoSQL database with the flexibility of cloud resources. The main advantages are:

- Enhanced Scalability: Easily store large datasets with minimal infrastructure management.

- Data Backup and Disaster Recovery: Protect data with secure, off-site backups.

- Cost-Efficiency: Avoid hardware expenses and scale storage on-demand.

Major Cloud Storage Providers for MongoDB

The top providers compatible with MongoDB integration are:

- Amazon S3 (Simple Storage Service)

- Azure Blob Storage

- Google Cloud Storage (GCS)

Use Cases for MongoDB and Cloud Storage Integration

Use Case 1: Backup and Restore

Automate backups to a secure, scalable storage location to ensure data is recoverable in case of database corruption or accidental deletion.

Use Case 2: Archiving Large Files

Store large, rarely accessed files in cloud storage while keeping reference data in MongoDB. This is often used for media files, logs, or records.

Use Case 3: Large Data Sets for Analytics

For organizations processing large datasets for analytics, integrating cloud storage can handle data overflow efficiently without impacting MongoDB performance.

Integrating MongoDB with Amazon S3

Introduction to Amazon S3 and MongoDB

Amazon S3 is an object storage service by AWS that’s ideal for storing large amounts of unstructured data. Integrating MongoDB with S3 enables scalable, cost-effective storage for objects (like files) associated with MongoDB documents.

Setting Up Amazon S3 for MongoDB Integration

- Step 1: Create an AWS account and set up an S3 bucket.

- Step 2: Configure IAM roles with appropriate permissions to allow MongoDB access to S3.

- Step 3: Use the MongoDB application code to upload and retrieve files in S3 alongside data in MongoDB.

Code Example: Uploading Files to S3 with MongoDB

Here’s a code example in Node.js, showing how to upload a file to S3 and store its reference in MongoDB:

const AWS = require("aws-sdk");

const mongoose = require("mongoose");

const s3 = new AWS.S3();

const fileSchema = new mongoose.Schema({

fileName: String,

s3Url: String,

});

const File = mongoose.model("File", fileSchema);

// Function to upload file to S3 and save reference in MongoDB

async function uploadFile(filePath, fileName) {

const fileContent = fs.readFileSync(filePath);

const params = {

Bucket: "your-s3-bucket",

Key: fileName,

Body: fileContent,

};

const s3Response = await s3.upload(params).promise();

const newFile = new File({

fileName: fileName,

s3Url: s3Response.Location,

});

await newFile.save();

console.log("File uploaded successfully to S3 and MongoDB!");

}

uploadFile("path/to/file.jpg", "file.jpg");

Explanation

- AWS SDK: Used to handle S3 actions.

- Mongoose Schema: Stores file name and S3 URL in MongoDB, creating a reference to the uploaded file.

- Upload Function: Handles file reading, uploading to S3, and saving the file reference in MongoDB.

Integrating MongoDB with Azure Blob Storage

Introduction to Azure Blob Storage

Azure Blob Storage is Microsoft’s object storage solution for the cloud, optimized for storing large amounts of unstructured data.

Setting Up Azure Blob Storage for MongoDB

- Step 1: Set up an Azure Storage Account and create a Blob container.

- Step 2: Configure access keys or Shared Access Signatures (SAS) for secure integration.

- Step 3: Use MongoDB to manage metadata, while files are stored as blobs in Azure.

Code Example: Storing and Accessing Data in Azure Blob with MongoDB

Using Python with the Azure SDK to upload files to Azure Blob and store metadata in MongoDB:

from azure.storage.blob import BlobServiceClient

from pymongo import MongoClient

# Initialize MongoDB and Azure Blob Service

client = MongoClient("mongodb://localhost:27017/")

db = client["file_storage"]

blob_service_client = BlobServiceClient.from_connection_string("your_connection_string")

def upload_to_azure(file_path, blob_name):

# Upload to Azure Blob Storage

container_client = blob_service_client.get_container_client("my-container")

with open(file_path, "rb") as data:

container_client.upload_blob(name=blob_name, data=data)

# Save metadata in MongoDB

file_record = {

"fileName": blob_name,

"blobUrl": f"https://<your_account_name>.blob.core.windows.net/my-container/{blob_name}"

}

db.files.insert_one(file_record)

print("File uploaded and metadata saved.")

upload_to_azure("path/to/file.txt", "file.txt")

Integrating MongoDB with Google Cloud Storage

Introduction to Google Cloud Storage (GCS)

GCS is a scalable, secure, and durable object storage service offered by Google Cloud. MongoDB can work with GCS for cost-effective storage of large files.

Setting Up Google Cloud Storage with MongoDB

- Step 1: Create a GCS bucket and configure service account permissions.

- Step 2: Use MongoDB to manage metadata, allowing data retrieval when needed from GCS.

Code Example: Using GCS with MongoDB in Python

from google.cloud import storage

from pymongo import MongoClient

# Initialize MongoDB and Google Cloud Storage

mongo_client = MongoClient("mongodb://localhost:27017/")

db = mongo_client["file_storage"]

storage_client = storage.Client()

def upload_to_gcs(file_path, blob_name):

bucket = storage_client.get_bucket("your-gcs-bucket")

blob = bucket.blob(blob_name)

# Upload file to GCS

blob.upload_from_filename(file_path)

# Save metadata in MongoDB

file_record = {

"fileName": blob_name,

"gcsUrl": f"gs://your-gcs-bucket/{blob_name}"

}

db.files.insert_one(file_record)

print("File uploaded to GCS and metadata saved.")

upload_to_gcs("path/to/file.txt", "file.txt")

Syncing MongoDB Data with Cloud Storage Solutions

Backup Strategies for Data Syncing

- Incremental Backups: Frequently back up only changed data, reducing storage costs.

- Full Backups: Periodically create full backups in cloud storage for disaster recovery.

Code Example: Exporting MongoDB Data to Cloud Storage

exporting MongoDB collections to JSON files and storing in S3:

const exec = require("child_process").exec;

const AWS = require("aws-sdk");

function backupAndUploadToS3() {

exec("mongodump --archive=backup.gz --gzip --db=myDatabase", async (error) => {

if (error) throw error;

const s3 = new AWS.S3();

const fileContent = fs.readFileSync("backup.gz");

await s3.upload({

Bucket: "your-s3-bucket",

Key: "backup.gz",

Body: fileContent

}).promise();

console.log("Database backup uploaded to S3!");

});

}

backupAndUploadToS3();

Automating MongoDB and Cloud Storage Integration

Using Cloud Functions for Automation

Serverless functions from AWS Lambda, Google Cloud Functions, and Azure Functions can automate triggers for MongoDB data backups, file archiving, and more.

Code Example: Setting Up an Automated Backup Using AWS Lambda

- Set up an AWS Lambda function.

- Schedule the function to run the backup and upload files periodically to S3.

{

"userId": 123,

"name": "John Doe",

"email": "john@example.com",

"profilePicture": "...large binary data...",

"activityLog": [...large array of activities...]

}

You could vertically partition this data into two collections:

- Store basic user information (

userId,name,email) in one collection. - Store large fields like

profilePictureandactivityLogin separate collections.

Integrating MongoDB with cloud storage solutions enhances scalability, backup strategies, and data archiving, making MongoDB suitable for large datasets and cloud-native applications. This integration provides organizations with flexibility, resilience, and a robust foundation for managing growing data needs. Happy coding !❤️