Configuring Complex Replica Set Topologies in MongoDB

Configuring complex replica set topologies in MongoDB enables high availability, fault tolerance, and scaling for diverse needs. This chapter will walk you through everything from basic replica sets to advanced configurations with examples, detailed explanations, and best practices. By the end, you'll have a solid understanding of how to set up, configure, and manage complex replica sets tailored to your requirements.

Introduction to MongoDB Replica Sets

Overview of Replica Sets

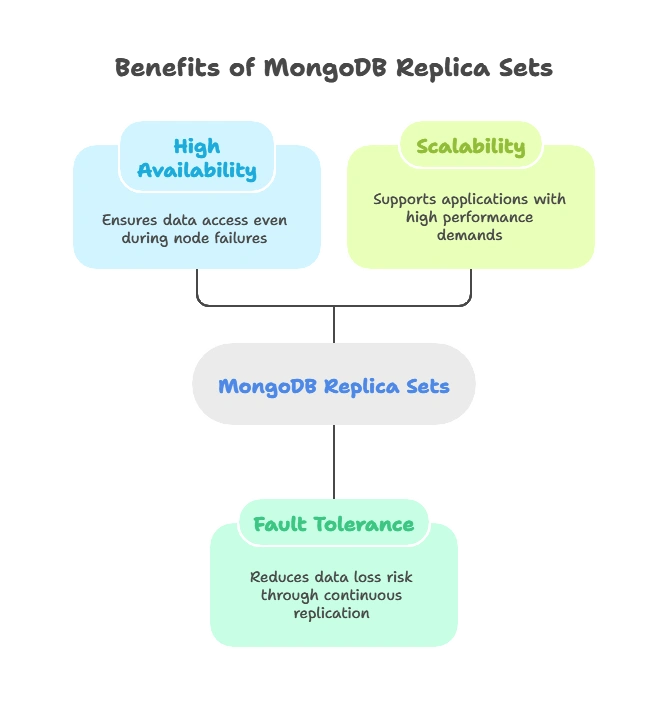

Replica sets in MongoDB are groups of mongod instances that maintain the same dataset, providing high availability, data redundancy, and fault tolerance.

Why Use Replica Sets?

Replica sets support:

- High Availability: Data is available even if a node fails.

- Fault Tolerance: Data is continuously replicated to reduce the risk of data loss.

- Scalability: Supports applications with demanding performance needs.

Basic Replica Set Configuration

Setting Up a Basic Replica Set

Create a three-member replica set to ensure high availability and redundancy.

Example: Initiating a Replica Set

// Start three MongoDB instances on different ports

mongod --replSet "rs0" --port 27017 --dbpath /data/db1

mongod --replSet "rs0" --port 27018 --dbpath /data/db2

mongod --replSet "rs0" --port 27019 --dbpath /data/db3

// Connect to one instance and initiate the replica set

mongo --port 27017

rs.initiate({

_id: "rs0",

members: [

{ _id: 0, host: "localhost:27017" },

{ _id: 1, host: "localhost:27018" },

{ _id: 2, host: "localhost:27019" }

]

})

Explanation

Each mongod instance is a node in the replica set, and rs.initiate() initializes it. Each member is defined by a unique _id and a host.

Advanced Replica Set Members

Types of Members in Replica Sets

- Primary: Receives all write operations.

- Secondary: Replicates data from the primary and can become primary during failover.

- Arbiter: Has no data; only votes in elections.

Hidden and Delayed Members

- Hidden Member: Not visible to clients, useful for backups or analytics.

- Delayed Member: Replicates data with a delay, helping in rollback scenarios.

Example: Adding an Arbiter and Hidden Member

rs.addArb("localhost:27020"); // Adding an arbiter

rs.add({

host: "localhost:27021",

priority: 0, // Prevents it from becoming primary

hidden: true

}); // Adding a hidden member

Replica Set Configuration Options

Priority and Votes

- Priority: Controls which members are more likely to become primary.

- Votes: Determines the voting weight of each member in elections.

Example: Configuring Priority and Votes

rs.reconfig({

_id: "rs0",

members: [

{ _id: 0, host: "localhost:27017", priority: 2 },

{ _id: 1, host: "localhost:27018", priority: 0, votes: 0 },

{ _id: 2, host: "localhost:27019", priority: 1 }

]

})

Understanding and Configuring Election Process

Overview of Elections

MongoDB elections occur when a primary node fails, ensuring high availability by promoting a secondary to primary.

Election Mechanism

Each node has a vote, and a majority is required for a successful election.

Avoiding Split-Brain Scenarios

To prevent multiple nodes from becoming primary:

- Use Arbiters.

- Ensure an odd number of nodes for quorum.

Common Replica Set Topologies

Single-Data Center Replica Set

The standard configuration within a single data center to ensure local redundancy.

Multi-Data Center Replica Set

Useful for disaster recovery and resilience in case of data center failures.

Geographically Distributed Replica Set

Configure cross-region replication while considering latency and consistency.

Sharding with Replica Sets

Purpose of Sharded Clusters

Sharding enables horizontal scaling by dividing large datasets across multiple replica sets.

Components of a Sharded Cluster

- Config Servers: Store metadata and routing info.

- Shards: Each shard is a replica set.

Example: Setting Up a Sharded Cluster with Replica Sets

// Start config server, shards, and a mongos instance

mongod --configsvr --replSet "configReplSet" --port 27019 --dbpath /data/configdb

mongod --shardsvr --replSet "rs0" --port 27017 --dbpath /data/shard1

mongos --configdb "configReplSet/localhost:27019" --port 27020

// Add shard replica sets to the cluster

mongo --port 27020

sh.addShard("rs0/localhost:27017")

Monitoring and Managing Replica Sets

Monitoring Replica Sets

- Mongostat and Mongotop: Tools for real-time monitoring.

- Ops Manager: Provides a GUI for advanced replica set and cluster monitoring.

Common Commands for Monitoring

rs.status(): Provides replica set status.rs.printReplicationInfo(): Displays replication lag data.

Handling Failover and Recovery

Automatic Failover

MongoDB ensures continuous availability by automatically electing a new primary if the current one fails.

Data Recovery Options

- Rollback Operations: Handle discrepancies when a failed primary rejoins.

- Manual Failover Testing: Test failover scenarios using

rs.stepDown().

Configuring complex replica set topologies in MongoDB optimizes reliability, scalability, and performance. By tailoring priority, votes, hidden members, and implementing sharding, administrators can meet specific business needs. Mastery of these configurations allows for robust, high-performing MongoDB deployments, ready to handle high availability and demanding workloads across various environments. Happy coding !❤️