Choosing the Right Partitioning in MongoDB

Partitioning in MongoDB, often referred to as "sharding," is the method of distributing data across multiple machines. Sharding is essential for scaling databases as the amount of data grows beyond the storage and processing capacity of a single machine. In this chapter, we will dive deep into the concepts, strategies, and techniques to help you choose the right partitioning approach in MongoDB. By the end, you will have a comprehensive understanding of how to manage large datasets effectively.

Introduction to Partitioning in MongoDB

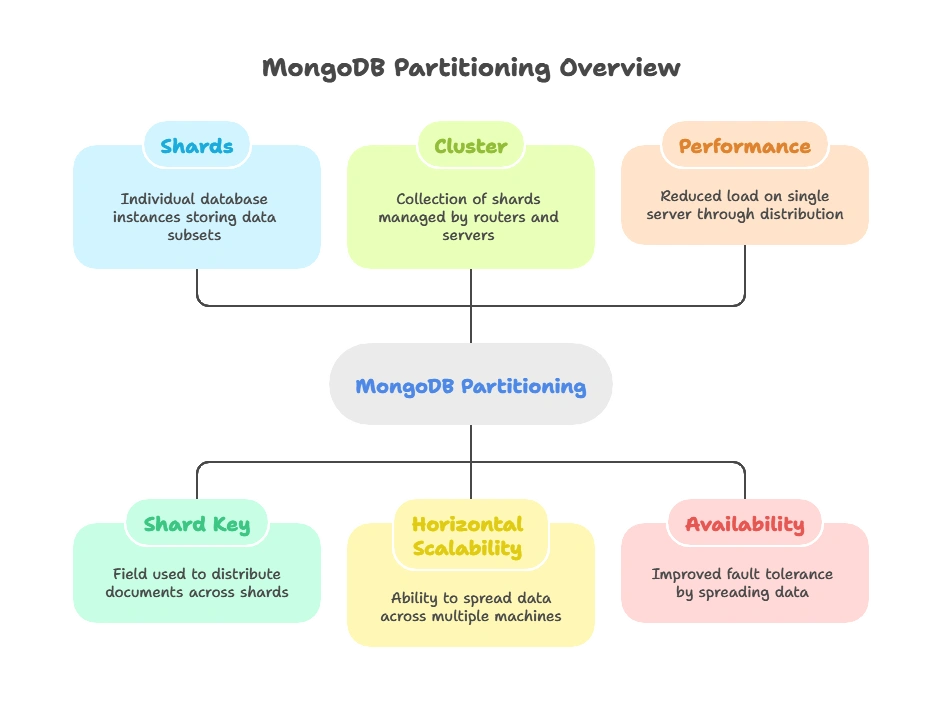

Partitioning, or sharding, is a way to horizontally scale your MongoDB deployment by distributing the data across multiple machines (nodes). This helps manage huge datasets and high-traffic applications by reducing the load on a single server.

MongoDB achieves partitioning through “shards,” which are individual databases responsible for storing a portion of the data. Each shard can be deployed on a different physical machine, allowing the system to balance the load.

Key Concepts:

- Shards: Individual database instances responsible for storing subsets of data.

- Shard Key: A field in your data that MongoDB uses to distribute documents across different shards.

- Cluster: A collection of shards, managed by MongoDB routers and configuration servers.

Why Partitioning is Important:

- Horizontal Scalability: Spread data across multiple machines, thus scaling out instead of scaling up.

- Performance: Reduce the load on a single server by distributing the load evenly.

- Availability: Data is spread across multiple servers, improving availability and fault tolerance.

How Partitioning Works in MongoDB

MongoDB uses the concept of a shard key to distribute data across shards. The choice of the shard key is crucial because it defines how the data is divided and impacts performance, scalability, and query efficiency.

Steps to Implement Sharding:

- Create a Sharded Cluster: This involves setting up multiple shards, configuration servers, and routers (mongos).

- Define the Shard Key: Choose a field in the document to be used as the shard key.

- Enable Sharding on the Collection: Once the shard key is defined, you can enable sharding on a collection.

- Data Distribution: MongoDB automatically distributes data across shards based on the shard key.

Example:

// Enable sharding on the database

sh.enableSharding("myDatabase");

// Define the shard key and shard the collection

sh.shardCollection("myDatabase.myCollection", { userId: 1 });

In this example, the userId field is used as the shard key. MongoDB will distribute documents with different userId values across different shards.

Choosing the Right Shard Key

The choice of the shard key is the most critical decision in partitioning your MongoDB database. A poor choice can lead to data skew, hotspots, and inefficient queries.

Factors to Consider:

- Cardinality: Choose a key with high cardinality (i.e., many distinct values). If a shard key has few distinct values, documents will be unevenly distributed, leading to skewed data storage.

- Distribution: Ensure that the shard key will evenly distribute data across shards.

- Query Pattern: Choose a shard key that matches your most common queries. This helps to ensure that queries can be routed efficiently to the correct shard, reducing unnecessary data scanning.

Good vs. Bad Shard Keys:

Bad Example: Boolean Field as Shard Key

{ isActive: true }

A boolean field like

isActivehas only two values (true/false), which leads to data being distributed to only two shards. This causes imbalanced data distribution.Good Example: UserID as Shard Key

{ userId: 12345 }

The

userIdis unique for every user, so data will be distributed more evenly across shards, avoiding the bottleneck of too many documents on a single shard.

Types of Partitioning in MongoDB

a. Hash-Based Partitioning

In hash-based partitioning, MongoDB applies a hash function to the shard key to distribute data evenly across the shards. This method is effective for avoiding hot spots but may result in slower range queries.

sh.shardCollection("myDatabase.myCollection", { userId: "hashed" });

Advantages:

- Even Distribution: Guarantees that documents are evenly spread across shards.

- Prevents Hotspots: Ideal for scenarios where there is no clear pattern in data distribution.

Disadvantages:

- Inefficient Range Queries: Since the data is hashed, range queries (e.g., find users within a certain range of IDs) can be inefficient, as the documents will be distributed across all shards.

b. Range-Based Partitioning

In range-based partitioning, documents are distributed based on the value range of the shard key. This is ideal for range queries but can lead to uneven data distribution if the shard key values are not uniformly distributed.

sh.shardCollection("myDatabase.myCollection", { age: 1 });

Advantages:

- Efficient Range Queries: Range queries can be executed more efficiently because documents with similar values are stored on the same shard.

Disadvantages:

- Data Skew: If your data is not evenly distributed across the shard key range, one shard might get overloaded while others remain underutilized.

Deep Dive into Partitioning Strategies

a. Shard Key Choices for Write-Heavy Applications

For write-heavy applications, it is crucial to choose a shard key that distributes writes evenly across shards. You want to avoid scenarios where all writes go to a single shard, leading to write hotspots.

Example: If you’re recording user activities in real-time, choosing a timestamp as the shard key would lead to all writes going to the same shard during a particular time frame.

b. Shard Key Choices for Read-Heavy Applications

In read-heavy applications, you want to ensure that the most frequent queries target specific shards instead of querying all shards (which increases the load).

Example: For a social media application, choosing userId as the shard key is beneficial because most read operations will be fetching data for individual users.

Balancing Data and Avoiding Hotspots

To keep your system running smoothly, MongoDB automatically performs balancing to redistribute data as needed when some shards are underused and others are overloaded.

Example of a Balancing Operation:

MongoDB automatically monitors the distribution of data and moves data between shards as necessary to maintain balance. This process is handled by the balancer, which you can enable or disable as needed.

sh.stopBalancer() // Stop the balancer if needed for maintenance

sh.startBalancer() // Start the balancer again

Managing Partitioned Clusters

a. Monitoring Sharded Clusters

MongoDB provides several tools to monitor the health and performance of your sharded cluster, such as mongostat and mongotop.

b. Backup and Restore in Sharded Clusters

Backing up sharded clusters requires special handling because data is distributed across multiple servers.

Common Challenges with Sharding and How to Solve Them

a. Data Skew:

Occurs when a poor shard key leads to uneven distribution of data. To solve this, you may need to re-shard the data by choosing a better shard key.

b. Hotspot Issues:

If too many requests are routed to the same shard, it creates a bottleneck. Hash-based partitioning can help mitigate this issue by spreading data more evenly across shards.

c. Slow Queries:

Queries that do not include the shard key can be inefficient because MongoDB will have to scan all shards. To resolve this, ensure that common queries are targeted to specific shards.

Best Practices for Partitioning

- Choose a high-cardinality shard key to ensure even data distribution.

- Monitor your cluster regularly to detect imbalances or hotspots early.

- Use range-based partitioning for range queries but be mindful of potential data skew.

- Hash partitioning is best for unpredictable workloads where even distribution is a priority.

Choosing the right partitioning strategy in MongoDB is crucial for building scalable and high-performance applications. By carefully selecting the appropriate shard key, understanding your query patterns, and balancing the workload, you can optimize your MongoDB cluster for both read and write operations. Happy coding !❤️