GridFS

GridFS is a specification for storing and retrieving large files, such as images, audio files, and videos, in MongoDB. By default, MongoDB documents have a size limit of 16 MB. When you need to store files larger than this limit, GridFS comes into play. Instead of storing the file as a single document, GridFS divides it into smaller chunks and stores each chunk as a separate document in the fs.chunks collection. The file's metadata, such as its filename and upload date, is stored in the fs.files collection.

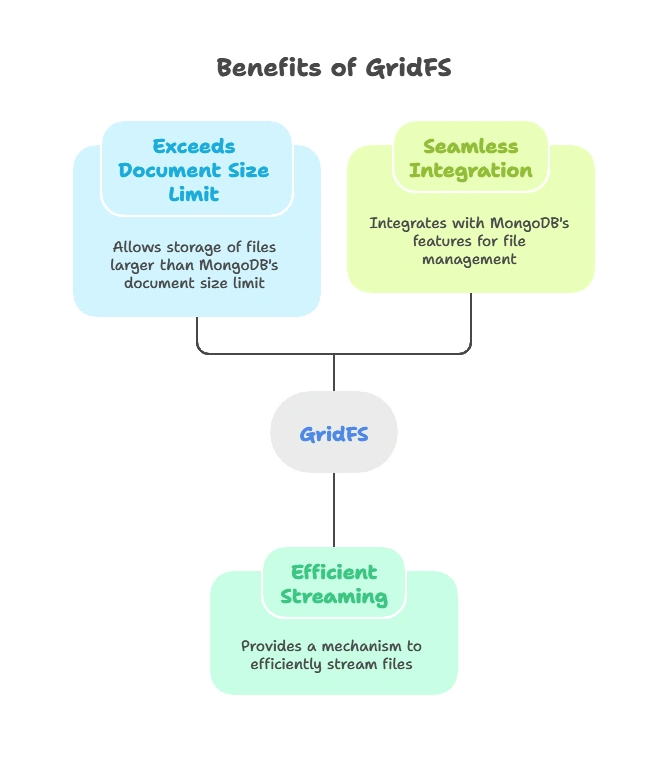

Why Use GridFS?

GridFS is useful when you need to store and retrieve files that exceed MongoDB’s document size limit. It also provides a mechanism to efficiently stream files, which is particularly useful for serving large files to clients, such as when building a video or audio streaming service. Additionally, GridFS integrates seamlessly with MongoDB, allowing you to leverage MongoDB’s indexing, querying, and other features to manage your files.

Setting Up the Environment

Prerequisites

To work with GridFS in Node.js, you’ll need:

- A basic understanding of MongoDB and Node.js.

- MongoDB installed on your system.

- Node.js and npm (Node Package Manager) installed.

Installation

First, let’s set up a new Node.js project and install the necessary packages:

Create a new directory for your project:

mkdir gridfs-nodejs

cd gridfs-nodejs

Initialize a new Node.js project:

npm init -y

Install the required packages:

mongodb: The official MongoDB driver for Node.js.multer: A middleware for handlingmultipart/form-data, which is primarily used for uploading files.gridfs-stream: A streaming API for GridFS.express: A minimal and flexible Node.js web application framework.

npm install mongodb multer gridfs-stream express

Connecting to MongoDB and Setting Up GridFS

To interact with GridFS in MongoDB using Node.js, we’ll use the official mongodb package along with gridfs-stream, which provides a Node.js-style streaming API.

1. Basic MongoDB Connection

const { MongoClient } = require('mongodb');

const uri = 'mongodb://localhost:27017';

const client = new MongoClient(uri);

async function connect() {

try {

await client.connect();

console.log('Connected to MongoDB');

} catch (err) {

console.error('Failed to connect to MongoDB', err);

}

}

connect();

Explanation:

- MongoClient: This is the main class through which we’ll connect to a MongoDB instance.

- uri: This specifies the connection string to the MongoDB instance.

- connect: This is an async function that attempts to connect to MongoDB. If successful, it logs a success message.

Setting Up GridFS

To use GridFS, we first need to set it up using the gridfs-stream module. GridFS uses two collections: fs.files and fs.chunks. The fs.files collection stores metadata about the file, and the fs.chunks collection stores the file’s binary data split into chunks.

const mongoose = require('mongoose');

const gridfsStream = require('gridfs-stream');

// Connect to MongoDB using Mongoose

mongoose.connect('mongodb://localhost:27017/gridfstest', {

useNewUrlParser: true,

useUnifiedTopology: true,

});

const connection = mongoose.connection;

let gfs;

connection.once('open', () => {

gfs = gridfsStream(connection.db, mongoose.mongo);

gfs.collection('uploads'); // Setting the collection name for GridFS

console.log('GridFS is ready to use');

});

Explanation:

- Mongoose: A popular ODM (Object Data Modeling) library for MongoDB and Node.js. It provides a schema-based solution to model your application data.

- gridfsStream: A module that allows you to work with GridFS using a streaming API.

- gfs.collection(‘uploads’): Sets the collection name for GridFS. Here, we use

uploadsinstead of the defaultfs.

Output:

When you run the code, you should see the following output:

Connected to MongoDB

GridFS is ready to use

Uploading Files to GridFS

Now that we have set up our connection to MongoDB and GridFS, let’s look at how to upload files.

1. Creating an Express Server

We’ll use Express to create a simple server that handles file uploads.

const express = require('express');

const multer = require('multer');

const app = express();

// Middleware to handle multipart/form-data

const storage = multer.memoryStorage();

const upload = multer({ storage });

// Route to handle file upload

app.post('/upload', upload.single('file'), (req, res) => {

const file = req.file;

// Create a write stream to GridFS

const writestream = gfs.createWriteStream({

filename: file.originalname,

});

// Write the file buffer to GridFS

writestream.write(file.buffer);

writestream.end();

writestream.on('close', (file) => {

res.json({ fileId: file._id, message: 'File uploaded successfully' });

});

});

app.listen(3000, () => {

console.log('Server is running on port 3000');

});

Explanation:

- multer.memoryStorage(): Configures multer to store the uploaded files in memory.

- upload.single(‘file’): This middleware handles the file upload. It expects a single file with the form field name

file. - writestream.write(file.buffer): Writes the uploaded file’s buffer to GridFS.

- writestream.end(): Ends the write stream, which triggers the

closeevent when the upload is complete. - res.json({ … }): Sends a JSON response with the file ID and a success message.

2. Testing the Upload

To test the upload, you can use a tool like Postman or curl to send a file to the /upload endpoint.

Output:

After a successful upload, you’ll receive a JSON response similar to:

{

"fileId": "60c72b2f4f1a4c23d8e7f7c5",

"message": "File uploaded successfully"

}

This confirms that the file has been stored in GridFS.

Retrieving Files from GridFS

Once files are stored in GridFS, you can retrieve them by their filename or ID.

1. Retrieving by Filename

app.get('/file/:filename', (req, res) => {

const { filename } = req.params;

// Create a read stream to GridFS

const readstream = gfs.createReadStream({ filename });

readstream.on('error', (err) => {

res.status(404).json({ error: 'File not found' });

});

readstream.pipe(res);

});

Explanation:

- gfs.createReadStream({ filename }): Creates a readable stream for the file with the specified filename.

- readstream.pipe(res): Pipes the file’s contents to the response, effectively sending the file to the client.

2. Retrieving by File ID

const { ObjectId } = require('mongodb');

app.get('/file/id/:id', (req, res) => {

const { id } = req.params;

// Create a read stream to GridFS

const readstream = gfs.createReadStream({ _id: ObjectId(id) });

readstream.on('error', (err) => {

res.status(404).json({ error: 'File not found' });

});

readstream.pipe(res);

});

Explanation:

- ObjectId(id): Converts the string ID to a MongoDB ObjectId.

- gfs.createReadStream({ _id: ObjectId(id) }): Creates a readable stream for the file with the specified ID.

Output:

When you make a GET request to either /file/:filename or /file/id/:id, the server will send the requested file back to the client.

Deleting Files from GridFS

You might also need to delete files from GridFS. This can be done using the file’s ID.

app.delete('/file/:id', (req, res) => {

const { id } = req.params;

gfs.remove({ _id: ObjectId(id), root: 'uploads' }, (err, gridStore) => {

if (err) return res.status(404).json({ error: 'File not found' });

res.json({ message: 'File deleted successfully' });

});

});

Explanation:

- gfs.remove(…): Deletes the file with the specified ID from GridFS.

- root: ‘uploads’: Specifies the collection name to search in.

Output:

After a successful deletion, you’ll receive a JSON response similar to:

{

"message": "File deleted successfully"

}

Advanced Topics

Handling Large Files

For very large files, you can optimize your upload and retrieval processes by streaming files directly from the client to the server and from the server to the client. This avoids loading the entire file into memory, which is crucial when working with files that are several gigabytes in size.

Streaming Large File Uploads:

Let’s modify the file upload code to handle large file uploads using streams.

app.post('/upload', (req, res) => {

const { filename } = req.query; // Assume the client provides the filename as a query parameter

// Create a write stream to GridFS

const writestream = gfs.createWriteStream({

filename: filename,

});

// Pipe the request (which contains the file) directly to GridFS

req.pipe(writestream);

writestream.on('close', (file) => {

res.json({ fileId: file._id, message: 'File uploaded successfully' });

});

});

Explanation:

- req.pipe(writestream): Streams the incoming file data directly into GridFS, bypassing memory storage.

- filename: filename: Uses the filename provided by the client as a query parameter.

Streaming Large File Downloads:

Similarly, for file retrieval, we can stream the file from GridFS to the client.

app.get('/download/:filename', (req, res) => {

const { filename } = req.params;

// Create a read stream to GridFS

const readstream = gfs.createReadStream({ filename });

readstream.on('error', (err) => {

res.status(404).json({ error: 'File not found' });

});

// Stream the file to the client

res.setHeader('Content-Disposition', 'attachment; filename=' + filename);

readstream.pipe(res);

});

Explanation:

- res.setHeader(‘Content-Disposition’, ‘attachment; filename=’ + filename): Sets the header to suggest a file download to the client.

- readstream.pipe(res): Streams the file content directly to the client, allowing them to download the file.

Output:

With these changes, both uploading and downloading large files become more efficient and scalable.

Working with Metadata

Sometimes, you might want to store additional metadata with your files in GridFS. Metadata can include information like the file’s uploader, description, or any other custom fields.

Storing Metadata:

app.post('/upload', upload.single('file'), (req, res) => {

const file = req.file;

const { uploader, description } = req.body;

// Create a write stream to GridFS with metadata

const writestream = gfs.createWriteStream({

filename: file.originalname,

metadata: {

uploader: uploader,

description: description,

},

});

// Write the file buffer to GridFS

writestream.write(file.buffer);

writestream.end();

writestream.on('close', (file) => {

res.json({ fileId: file._id, message: 'File uploaded successfully' });

});

});

Explanation:

- metadata: The

metadatafield allows you to attach custom data to the file in GridFS. - uploader, description: These fields are taken from the request body and stored as part of the file’s metadata.

Querying Files by Metadata:

You can also query files by their metadata using MongoDB’s powerful querying capabilities.

app.get('/files/uploader/:uploader', (req, res) => {

const { uploader } = req.params;

// Find files with the specified uploader

gfs.files.find({ 'metadata.uploader': uploader }).toArray((err, files) => {

if (!files || files.length === 0) {

return res.status(404).json({ error: 'No files found' });

}

res.json(files);

});

});

Explanation:

- gfs.files.find({ ‘metadata.uploader’: uploader }): Queries the

fs.filescollection for documents where the uploader matches the specified value. - toArray(): Converts the cursor returned by the query into an array of documents.

Output:

This endpoint will return a list of files uploaded by a specific user, allowing you to organize and retrieve files based on custom criteria.

Error Handling and Best Practices

Handling Errors Gracefully

Proper error handling is crucial in any application. With GridFS, you should be prepared to handle various errors, such as connection issues, file not found errors, and stream errors.

Basic Error Handling Example:

app.get('/file/:filename', (req, res) => {

const { filename } = req.params;

const readstream = gfs.createReadStream({ filename });

readstream.on('error', (err) => {

if (err.code === 'ENOENT') {

return res.status(404).json({ error: 'File not found' });

}

return res.status(500).json({ error: 'An error occurred while retrieving the file' });

});

readstream.pipe(res);

});

Explanation:

- if (err.code === ‘ENOENT’): Checks if the error code corresponds to a file not found error.

- res.status(500): Sends a 500 Internal Server Error status if an unexpected error occurs.

Security Considerations

When working with file uploads, it’s important to validate and sanitize user inputs to prevent security vulnerabilities, such as file path traversal attacks or denial of service (DoS) attacks.

Basic Validation Example:

const path = require('path');

app.post('/upload', upload.single('file'), (req, res) => {

const file = req.file;

const safeFilename = path.basename(file.originalname);

const writestream = gfs.createWriteStream({

filename: safeFilename,

});

writestream.write(file.buffer);

writestream.end();

writestream.on('close', (file) => {

res.json({ fileId: file._id, message: 'File uploaded successfully' });

});

});

Explanation:

- path.basename(file.originalname): Strips any directory path components from the filename, ensuring that only the base filename is used.

Performance Optimization

When dealing with high traffic or large files, you may want to consider additional performance optimizations.

- Use MongoDB Replica Sets: This ensures high availability and fault tolerance.

- Enable GridFS Indexes: Ensure that the default GridFS indexes (on

files_idandn) are in place and consider adding additional indexes based on your queries. - Chunk Size Tuning: The default chunk size in GridFS is 255 KB. Depending on your file sizes and access patterns, you may want to adjust this for better performance.

Custom Chunk Size Example:

const writestream = gfs.createWriteStream({

filename: safeFilename,

chunkSize: 1024 * 1024, // 1 MB chunks

});

Explanation:

- chunkSize: 1024 * 1024: Sets the chunk size to 1 MB, which may improve performance for larger files.

Key Takeaways:

- GridFS is ideal for handling files larger than MongoDB’s document size limit of 16 MB.

- Node.js provides a convenient way to interact with GridFS, especially when combined with Express for building web applications.

- Error handling and security are critical aspects to consider when working with file uploads and downloads.

- Performance optimization can significantly impact the efficiency of your application, especially at scale.

GridFS provides a robust and scalable solution for storing and retrieving large files in MongoDB. By leveraging GridFS in Node.js, you can build powerful applications that handle file storage efficiently, even when dealing with large datasets. This chapter has covered everything from setting up GridFS, uploading and retrieving files, handling metadata, to advanced topics like streaming large files and implementing best practices.Happy coding !❤️