Advanced Aggregation Pipeline Techniques

MongoDB's Aggregation Framework is an incredibly powerful tool for analyzing and transforming data, especially as you begin to use advanced features. This chapter explores techniques that enable sophisticated data operations through the Aggregation Pipeline, going beyond basic stages to cover advanced operators, specialized stages, and performance-optimizing techniques.

Introduction to Advanced Aggregation Techniques

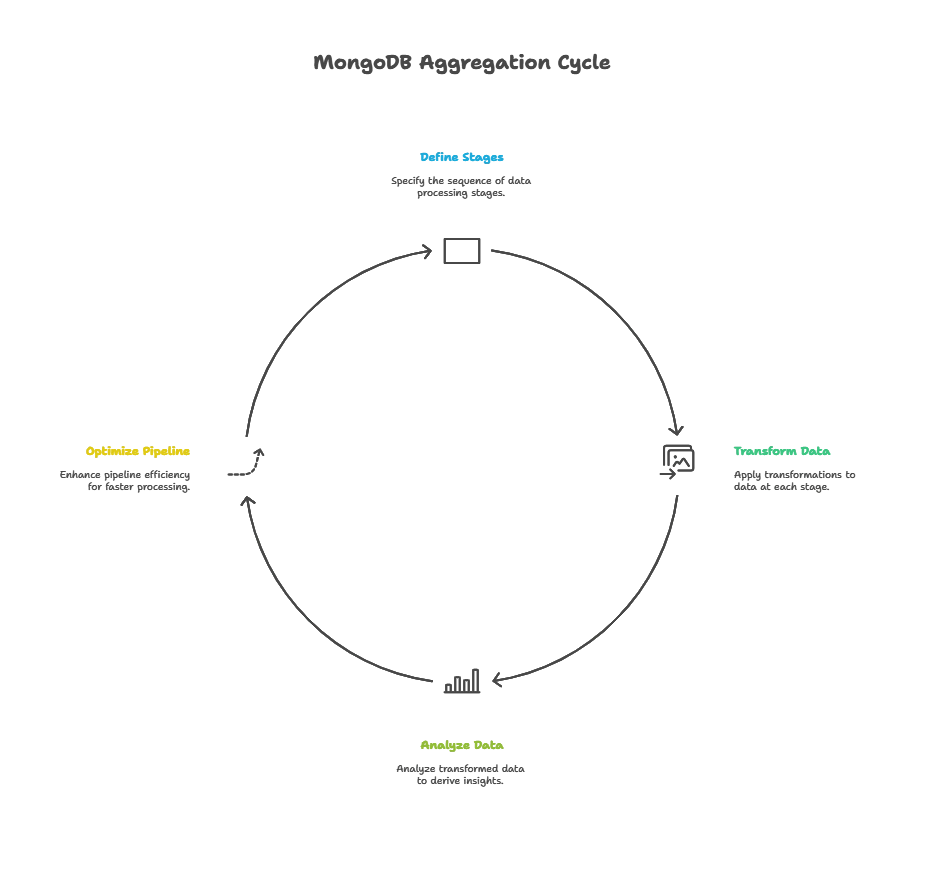

Overview of the Aggregation Framework

MongoDB’s Aggregation Framework allows you to process data using a sequence of stages in a pipeline. These stages transform and analyze data, producing aggregated results based on specified criteria.

Why Use Advanced Aggregation Techniques?

As data needs become more complex, advanced aggregation techniques enable you to:

- Perform complex calculations and data transformations.

- Conduct multi-stage processing.

- Optimize pipelines for faster data processing.

The $facet Stage for Multi-Faceted Analysis

The $facet stage enables you to perform multiple sub-pipelines in a single aggregation, allowing simultaneous analysis of the same data.

Using $facet for Multiple Results

Example:

db.sales.aggregate([

{

$facet: {

"highValueSales": [{ $match: { amount: { $gte: 1000 } } }],

"lowValueSales": [{ $match: { amount: { $lt: 1000 } } }],

"averageSale": [{ $group: { _id: null, avgAmount: { $avg: "$amount" } } }]

}

}

])

Explanation:

- The

highValueSalesfacet filters sales where the amount is 1000 or more. - The

lowValueSalesfacet filters sales where the amount is less than 1000. - The

averageSalefacet calculates the average sale amount.

Output: This structure provides each result in a separate array, allowing simultaneous calculations without rerunning the pipeline.

Exploring $bucket and $bucketAuto for Binning Data

Binning is a technique to group data into discrete intervals, allowing for effective categorization.

Using $bucket to Create Custom Bins

Example:

db.customers.aggregate([

{

$bucket: {

groupBy: "$age",

boundaries: [18, 30, 40, 50, 60, 70],

default: "Others",

output: {

"count": { $sum: 1 },

"averageIncome": { $avg: "$income" }

}

}

}

])

Explanation:

- Groups customers by age into custom intervals.

- Each bucket calculates the count and average income for that age range.

- Customers outside the specified boundaries fall under “Others.”

Using $bucketAuto for Automatic Binning

$bucketAuto automatically creates buckets based on data distribution and a specified bucket count.

Example:

db.sales.aggregate([

{

$bucketAuto: {

groupBy: "$amount",

buckets: 5,

output: { "count": { $sum: 1 }, "averageAmount": { $avg: "$amount" } }

}

}

])

Explanation: Creates 5 buckets with calculated boundaries, producing count and average for each bucket based on sales amount.

Applying $graphLookup for Recursive Searches

The $graphLookup stage allows for recursive lookups, useful for working with hierarchical or graph data.

Using $graphLookup for Hierarchical Data

$graphLookup enables traversing documents within a collection to return connected data.

Example:

db.employees.aggregate([

{

$graphLookup: {

from: "employees",

startWith: "$directReports",

connectFromField: "directReports",

connectToField: "employeeId",

as: "employeeHierarchy",

maxDepth: 3

}

}

])

Explanation:

- Retrieves hierarchical data up to 3 levels deep, starting from each employee’s direct reports.

- The output contains each employee with their entire reporting chain.

Improving Performance with $merge for Materialized Views

The $merge stage outputs pipeline results into another collection, allowing for efficient data processing and reusability.

Using $merge to Store Aggregated Data

Example:

db.orders.aggregate([

{

$group: {

_id: "$productId",

totalSales: { $sum: "$quantity" }

}

},

{

$merge: { into: "productSales", whenMatched: "merge", whenNotMatched: "insert" }

}

])

Explanation:

- Aggregates total sales by product.

- Stores the results in

productSales, either merging or inserting new data based on the match criteria.

Benefit: Reduces processing time for recurring queries by materializing views for repeated analysis.

Data Transformation with $replaceRoot and $replaceWith

These stages allow replacing an entire document with a sub-document or computed value.

Using $replaceRoot with Embedded Documents

Example:

db.orders.aggregate([

{ $match: { status: "delivered" } },

{ $replaceRoot: { newRoot: "$deliveryDetails" } }

])

Explanation: Replaces each document with the content of the deliveryDetails field, providing a streamlined output with only delivery-related data.

Using $replaceWith for Computed Values

$replaceWith allows complex expressions as the new root document.

Example:

db.customers.aggregate([

{

$replaceWith: {

fullName: { $concat: ["$firstName", " ", "$lastName"] },

contactInfo: { phone: "$phone", email: "$email" }

}

}

])

Explanation: Creates a simpler output structure with full name and contact details by replacing each document with a new structure.

Optimizing with Index-Aware $sort and $limit Stages

Combining $sort with $limit in indexed queries improves efficiency, especially in large datasets.

Using Indexes to Speed up Sorting

Example:

db.products.createIndex({ price: 1 })

db.products.aggregate([

{ $sort: { price: 1 } },

{ $limit: 10 }

])

Explanation:

- Creates an ascending index on

price. - Queries sorted results with

$limit, leveraging the index to minimize processing.

Performance Benefit: Using indexes with $sort and $limit drastically reduces load times by focusing only on relevant data.

Using the $setWindowFields Stage for Windowed Aggregations

The $setWindowFields stage enables calculations across a window of documents, useful for running totals, moving averages, etc.

Calculating Moving Averages with $setWindowFields

Example:

db.sales.aggregate([

{

$setWindowFields: {

partitionBy: "$region",

sortBy: { date: 1 },

output: {

averageSales: {

$avg: "$sales",

window: { documents: ["-1", "1"] }

}

}

}

}

])

Explanation: Calculates a 3-day moving average for sales within each region by partitioning and sorting by date.

Conditional Logic with $ifNull, $cond, and $switch

Handling Null Values with $ifNull

Example:

db.orders.aggregate([

{ $project: { shippingCost: { $ifNull: ["$shippingCost", 0] } } }

])

Explanation: Sets shippingCost to 0 if null, useful for calculations involving optional fields.

Using $cond for Conditional Calculations

Example:

db.sales.aggregate([

{

$project: {

priceCategory: {

$cond: { if: { $gte: ["$price", 1000] }, then: "High", else: "Low" }

}

}

}

])

Explanation: Classifies products as High or Low based on price.

Advanced Conditionals with $switch

Example:

db.orders.aggregate([

{

$project: {

shippingCategory: {

$switch: {

branches: [

{ case: { $gte: ["$weight", 20] }, then: "Heavy" },

{ case: { $gte: ["$weight", 10] }, then: "Medium" }

],

default: "Light"

}

}

}

}

])

Explanation: Categorizes shipping weights into Heavy, Medium, and Light.

The advanced techniques in MongoDB’s Aggregation Framework enable you to perform highly complex data transformations and analytics directly within the database. By leveraging stages like $facet, $graphLookup, $bucketAuto, and $setWindowFields, you can design pipelines that address nuanced data analysis and reporting needs. Happy Coding!❤️