Implementing Distributed Caching for Performance Improvement in Express.js

Caching is a fundamental technique for improving application performance by temporarily storing frequently accessed data. When building scalable applications, distributed caching becomes essential for managing large-scale traffic while maintaining low latency. This chapter dives deep into implementing distributed caching in Express.js, covering foundational concepts, practical steps, advanced patterns, and real-world examples.

Introduction to Caching

What is Caching?

Caching is the process of storing data in a temporary storage layer to serve future requests faster. Instead of fetching the data from a slow database or external API, it retrieves the data from a faster cache layer.

Why Use Caching?

- Reduces Latency: Speeds up response times.

- Minimizes Load: Decreases the load on databases and servers.

- Enhances Scalability: Handles large-scale traffic efficiently.

Understanding Distributed Caching

What is Distributed Caching?

Distributed caching refers to a caching system spread across multiple nodes or servers. Instead of relying on a single cache store, data is distributed among various nodes to ensure scalability and fault tolerance.

Benefits of Distributed Caching:

- Scalability: Handles more data and traffic by adding nodes.

- Fault Tolerance: Ensures availability by replicating data across nodes.

- Load Balancing: Distributes cache requests across multiple servers.

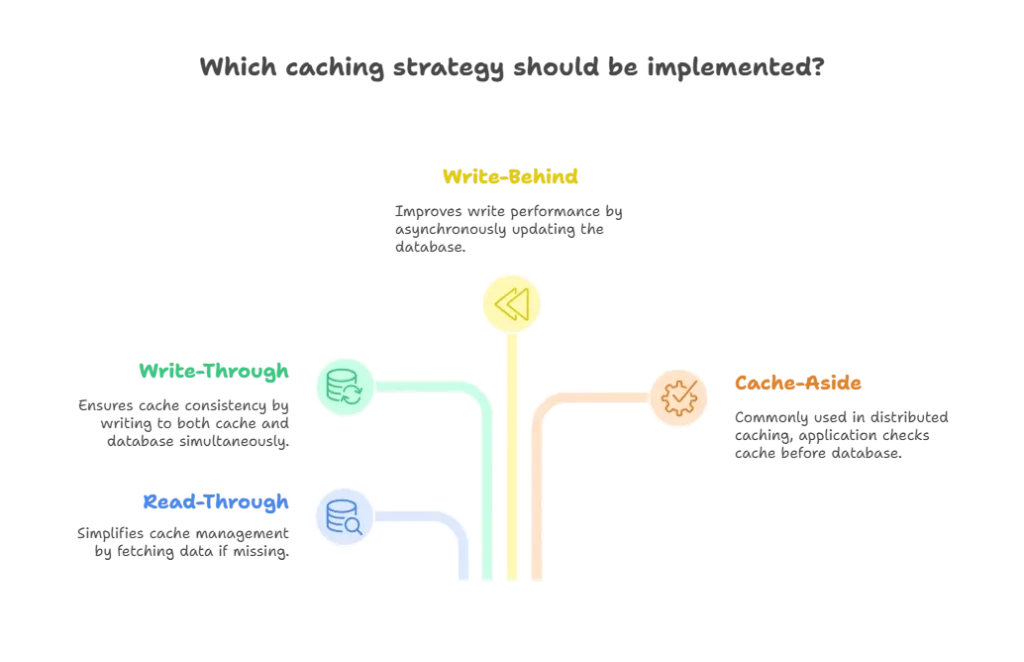

Caching Strategies

1. Read-Through

- Cache fetches data from the database if it is missing.

- Simplifies cache management.

2. Write-Through

- Writes data to the cache and database simultaneously.

- Ensures cache consistency but may add latency.

3. Write-Behind

- Writes data to the cache first, then asynchronously updates the database.

- Improves write performance but risks stale data during failures.

4. Cache-Aside

- Application logic explicitly checks the cache before accessing the database.

- Most commonly used pattern in distributed caching.

Setting up a Distributed Cache

Choosing a Distributed Caching System

Popular tools include:

- Redis: An in-memory data structure store with rich features.

- Memcached: A lightweight, high-performance distributed cache.

Installing Redis

Install Redis on your system or use a cloud-hosted Redis (e.g., AWS ElastiCache, Azure Cache for Redis).

Installation Command:

# Install Redis locally

sudo apt update

sudo apt install redis

Implementing Distributed Caching in Express.js

Simple Cache Example

Middleware for Caching

const cache = new Map(); // Simple in-memory cache

function cacheMiddleware(req, res, next) {

const key = req.originalUrl;

if (cache.has(key)) {

return res.send(cache.get(key)); // Serve from cache

}

res.sendResponse = res.send;

res.send = (body) => {

cache.set(key, body); // Save response in cache

res.sendResponse(body);

};

next();

}

const express = require('express');

const app = express();

app.use(cacheMiddleware);

app.get('/data', (req, res) => {

// Simulate slow database call

setTimeout(() => res.send({ data: 'Hello, World!' }), 2000);

});

app.listen(3000, () => console.log('Server running on port 3000'));

Explanation:

- The middleware checks if the response for a request URL is cached.

- If cached, it serves the response directly; otherwise, it caches the response after generating it.

Using Redis for Distributed Caching

Install Redis and Client Library

npm install redis

Redis Integration Example

const express = require('express');

const redis = require('redis');

const app = express();

const client = redis.createClient(); // Connect to Redis

client.on('error', (err) => console.log('Redis Error:', err));

// Middleware for Redis caching

function redisCache(req, res, next) {

const key = req.originalUrl;

client.get(key, (err, data) => {

if (err) throw err;

if (data) {

return res.send(JSON.parse(data)); // Serve from Redis cache

}

res.sendResponse = res.send;

res.send = (body) => {

client.setex(key, 3600, JSON.stringify(body)); // Cache for 1 hour

res.sendResponse(body);

};

next();

});

}

app.use(redisCache);

app.get('/data', (req, res) => {

setTimeout(() => res.send({ data: 'Hello, Redis!' }), 2000);

});

app.listen(3000, () => console.log('Server running on port 3000'));

Explanation:

- Redis

getfetches data from the cache. - Redis

setexstores data in the cache with an expiration time.

Advanced Distributed Caching Techniques

Cache Invalidation Strategies

- Time-Based Expiry: Automatically removes stale data after a set duration.

- Explicit Invalidation: Manually removes specific cache keys.

- Dependency Invalidation: Removes cache when related data changes.

Example:

client.del('/data'); // Manually invalidate cache

Cache Sharding

- Distributes cache data across multiple nodes for scalability.

- Redis Cluster supports built-in sharding.

Cache Consistency Models

- Strong Consistency: Guarantees updated data across all nodes.

- Eventual Consistency: Updates eventually propagate to all nodes.

Monitoring and Scaling Cache

Monitoring Cache Performance

- Use tools like RedisInsight or Prometheus for metrics.

- Monitor hit rate, memory usage, and latency.

Scaling Cache

- Add nodes to Redis Cluster for horizontal scaling.

- Use replication to ensure high availability.

Best Practices for Distributed Caching

- Use TTL (Time-to-Live) for cache keys to prevent stale data.

- Avoid overloading cache with unnecessary data.

- Monitor memory usage to prevent out-of-memory errors.

- Implement fallback mechanisms for cache failures.

Distributed caching is a powerful technique to enhance the performance and scalability of Express.js applications. By storing frequently accessed data in systems like Redis, applications can handle large-scale traffic with reduced latency. This chapter covered everything from the basics of caching to advanced topics like sharding and monitoring, equipping you with the knowledge to build robust caching solutions. Happy coding !❤️