Parallel and Distributed Computing

In this chapter, we will explore the fascinating world of parallel and distributed computing using the C language. Parallel and distributed computing are techniques used to solve complex problems by breaking them down into smaller tasks that can be executed simultaneously. By harnessing the power of multiple processors or computers, we can greatly improve the performance and efficiency of our programs.

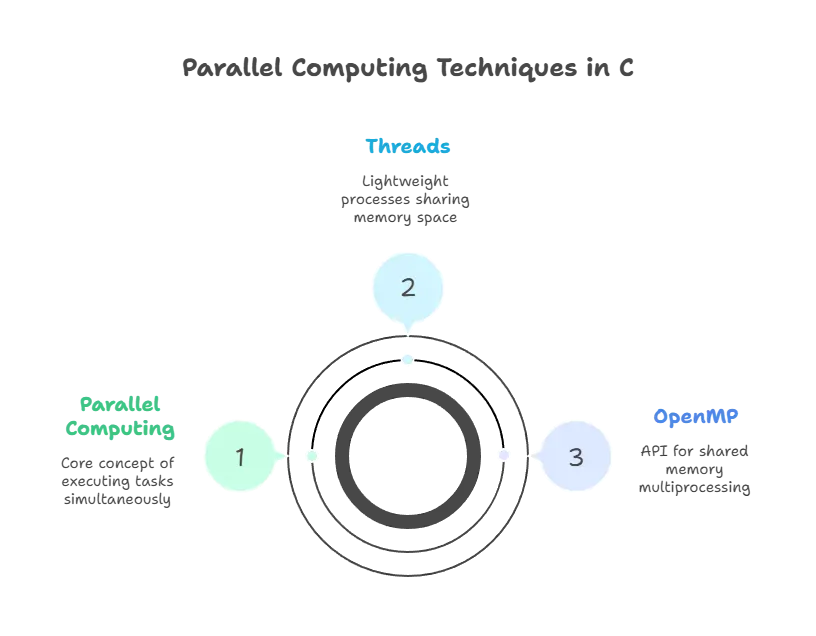

Basics of Parallel Computing

Parallel computing involves executing multiple tasks simultaneously to achieve faster results. In C, we can achieve parallelism using techniques like threads and OpenMP.

Threads

Threads are lightweight processes that can run concurrently within a single program. They share the same memory space, allowing them to communicate and synchronize with each other easily.

#include <stdio.h>

#include <pthread.h>

#define NUM_THREADS 5

void *thread_function(void *arg) {

int tid = *((int *)arg);

printf("Hello from thread %d\n", tid);

pthread_exit(NULL);

}

int main() {

pthread_t threads[NUM_THREADS];

int thread_args[NUM_THREADS];

int i;

for (i = 0; i < NUM_THREADS; i++) {

thread_args[i] = i;

pthread_create(&threads[i], NULL, thread_function, (void *)&thread_args[i]);

}

for (i = 0; i < NUM_THREADS; i++) {

pthread_join(threads[i], NULL);

}

return 0;

}

// output //

Hello from thread 0

Hello from thread 1

Hello from thread 2

Hello from thread 3

Hello from thread 4

Explanation: In this example, we create five threads using pthread_create() function. Each thread executes the thread_function() and prints its thread ID. Finally, we join all threads using pthread_join() to wait for their completion.

OpenMP

OpenMP is an API that supports multi-platform shared memory multiprocessing programming in C. It simplifies parallelism by providing compiler directives to specify parallel regions.

#include <stdio.h>

#include <omp.h>

#define N 10

int main() {

int i, sum = 0;

#pragma omp parallel for reduction(+:sum)

for (i = 0; i < N; i++) {

sum += i;

}

printf("Sum: %d\n", sum);

return 0;

}

// output //

Sum: 45

Explanation: In this example, we use OpenMP directive #pragma omp parallel for to parallelize the loop. The reduction(+:sum) clause ensures that each thread has its own private copy of sum variable, and finally, the partial sums are combined to compute the total sum.

Distributed Computing with MPI

Distributed computing involves coordinating tasks across multiple computers connected via a network. Message Passing Interface (MPI) is a popular library for writing distributed-memory parallel programs.

MPI Basics

MPI allows processes to communicate with each other by sending and receiving messages.

#include <stdio.h>

#include <mpi.h>

int main(int argc, char *argv[]) {

int rank, size;

MPI_Init(&argc, &argv);

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

MPI_Comm_size(MPI_COMM_WORLD, &size);

printf("Hello from process %d of %d\n", rank, size);

MPI_Finalize();

return 0;

}

// output //

Hello from process 0 of 4

Hello from process 1 of 4

Hello from process 2 of 4

Hello from process 3 of 4

Explanation: In this MPI program, each process gets its rank and total number of processes. Then, it prints a message with its rank and size of the MPI communicator.

MPI Collective Operations

MPI provides collective communication operations like broadcast, scatter, gather, and reduce to simplify data exchange among processes.

#include <stdio.h>

#include <mpi.h>

#define ROOT 0

int main(int argc, char *argv[]) {

int rank, size, data;

MPI_Init(&argc, &argv);

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

MPI_Comm_size(MPI_COMM_WORLD, &size);

if (rank == ROOT) {

data = 123;

}

MPI_Bcast(&data, 1, MPI_INT, ROOT, MPI_COMM_WORLD);

printf("Process %d received data: %d\n", rank, data);

MPI_Finalize();

return 0;

}

// output //

Process 0 received data: 123

Process 1 received data: 123

Process 2 received data: 123

Process 3 received data: 123

Explanation: In this example, process 0 broadcasts the value of data to all other processes using MPI_Bcast(). All processes receive the broadcasted data and print it.

Advanced Topics in Parallel and Distributed Computing

In this section, we’ll dive deeper into advanced topics related to parallel and distributed computing in C.

Parallel Algorithms

Parallel algorithms are designed to efficiently solve problems by breaking them down into smaller tasks that can be executed concurrently. Here’s an example of a parallel algorithm for matrix multiplication using OpenMP.

Parallel Matrix Multiplication with OpenMP

#include <stdio.h>

#include <omp.h>

#define N 3

int main() {

int A[N][N] = {{1, 2, 3}, {4, 5, 6}, {7, 8, 9}};

int B[N][N] = {{9, 8, 7}, {6, 5, 4}, {3, 2, 1}};

int C[N][N] = {0};

#pragma omp parallel for collapse(2)

for (int i = 0; i < N; i++) {

for (int j = 0; j < N; j++) {

for (int k = 0; k < N; k++) {

C[i][j] += A[i][k] * B[k][j];

}

}

}

printf("Resultant Matrix:\n");

for (int i = 0; i < N; i++) {

for (int j = 0; j < N; j++) {

printf("%d ", C[i][j]);

}

printf("\n");

}

return 0;

}

// output //

Resultant Matrix:

30 24 18

84 69 54

138 114 90

This example demonstrates parallel matrix multiplication using OpenMP. The collapse(2) clause parallelizes the nested loops by collapsing them into a single loop, allowing for efficient parallel execution.

Distributed File Processing

In distributed computing, processing large datasets distributed across multiple machines is a common scenario. Here’s an example of distributed file processing using MPI.

Distributed File Processing with MPI

#include <stdio.h>

#include <mpi.h>

#define FILENAME "data.txt"

int main(int argc, char *argv[]) {

int rank, size;

MPI_File file;

MPI_Offset filesize;

char buffer[100];

MPI_Init(&argc, &argv);

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

MPI_Comm_size(MPI_COMM_WORLD, &size);

MPI_File_open(MPI_COMM_WORLD, FILENAME, MPI_MODE_RDONLY, MPI_INFO_NULL, &file);

MPI_File_get_size(file, &filesize);

MPI_File_seek(file, rank * (filesize / size), MPI_SEEK_SET);

MPI_File_read(file, buffer, 100, MPI_CHAR, MPI_STATUS_IGNORE);

MPI_File_close(&file);

printf("Process %d read: %s\n", rank, buffer);

MPI_Finalize();

return 0;

}

Explanation: In this example, each process opens the same file (data.txt) using MPI file I/O functions. Processes read a portion of the file based on their rank, ensuring that each process handles a unique part of the file.

Parallel and distributed computing in C offer powerful techniques for solving computationally intensive problems efficiently. By leveraging parallelism and distributing tasks across multiple processors or machines, developers can achieve significant performance improvements. From basic concepts like threads and MPI to advanced topics like parallel algorithms and distributed file processing, mastering these techniques opens up a world of possibilities for tackling complex computing challenges.Happy coding!❤️