CAP Theorem

Distributed systems power the apps and services we use every day—Google Docs, WhatsApp, Amazon, banking systems, you name it. But designing them isn’t just about adding more servers. There are trade-offs, and one of the most important concepts that explains these trade-offs is the CAP theorem.

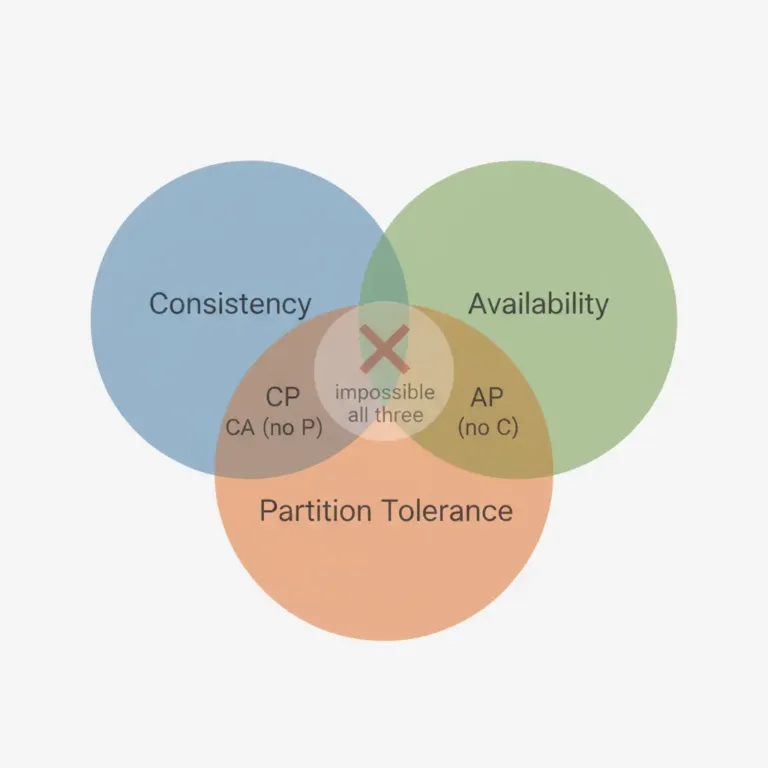

The CAP theorem says:

In a distributed system, you can guarantee only two of the following three properties at the same time: Consistency (C), Availability (A), and Partition Tolerance (P).

Sounds abstract, right? Let’s break it down with a story.

Starting of “Reminder”

Imagine you’re great at remembering things, and people often forget. One day you launch a service called Reminder.

The idea is simple:

A customer calls you at (+91)-9999-9999-88 and asks you to remember something.

You write it down in your notebook.

Later, when they forget, they call you back, and you read it out.

For example:

Customer: Can you store my neighbor’s birthday?

You: Sure, when is it?

Customer: January 2nd.

You: (writes it down) Stored!

Each call costs 1rs Business looks promising!

Scaling Up

Word spreads quickly. Calls flood in, and soon you can’t keep up. Customers hang up after waiting in long queues. When you fell sick one day, the business stopped completely.

So you hire your spouse to help. Now both of you have phones connected to the same number. Calls are routed randomly—whichever one is free picks up.

Problem solved? Not quite.

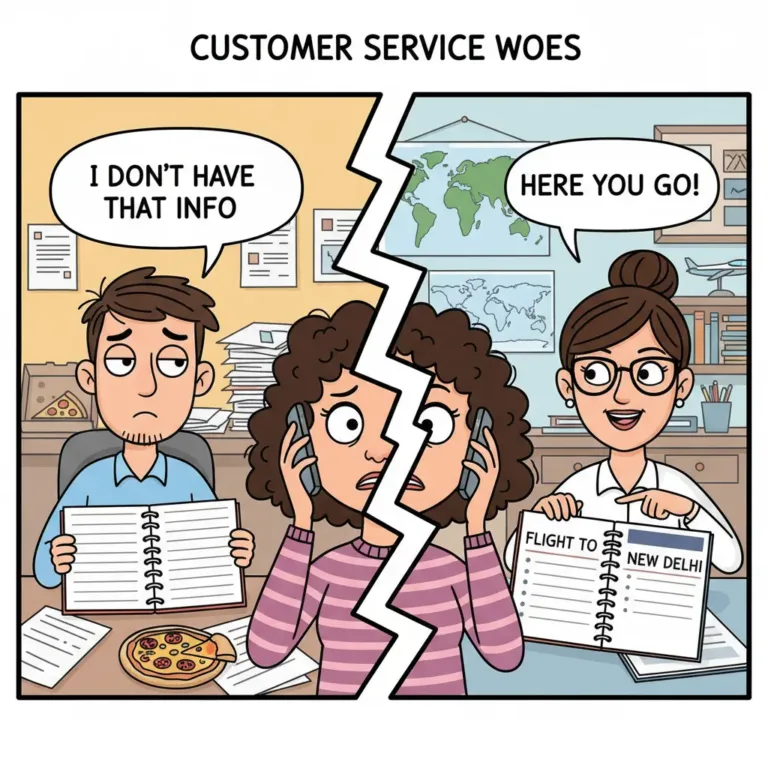

First Signs of Trouble

A loyal customer, Priya, calls you:

Priya: Hey, when’s my flight to New Delhi?

You: (checks notebook) Sorry, I don’t have that information.

Priya: What? I told you yesterday!

Later, you check your spouse’s notebook—and sure enough, the flight details are there.

Here’s the problem: your system is inconsistent. Depending on who picks up, Priya may get different answers.

Fixing Consistency

You brainstorm. Solution:

Whenever one of you gets an update (new info to remember), you immediately tell the other person.

Both of you write it down.

When a customer later asks for information, either of you can respond correctly.

Now, the system is consistent. Customers always get the same answer.

But… this creates another issue. Updates take longer because both of you must be involved before confirming the call. And if your spouse doesn’t come to work, you can’t process updates at all. That means the system is not fully available.

A Smarter Fix

You tweak the design again:

If both of you are working, you share updates instantly.

If one of you is absent, the other emails updates.

The absent person syncs their notebook with the emails before starting work the next day.

This seems brilliant. The system is both consistent and available. Customers are happy again.

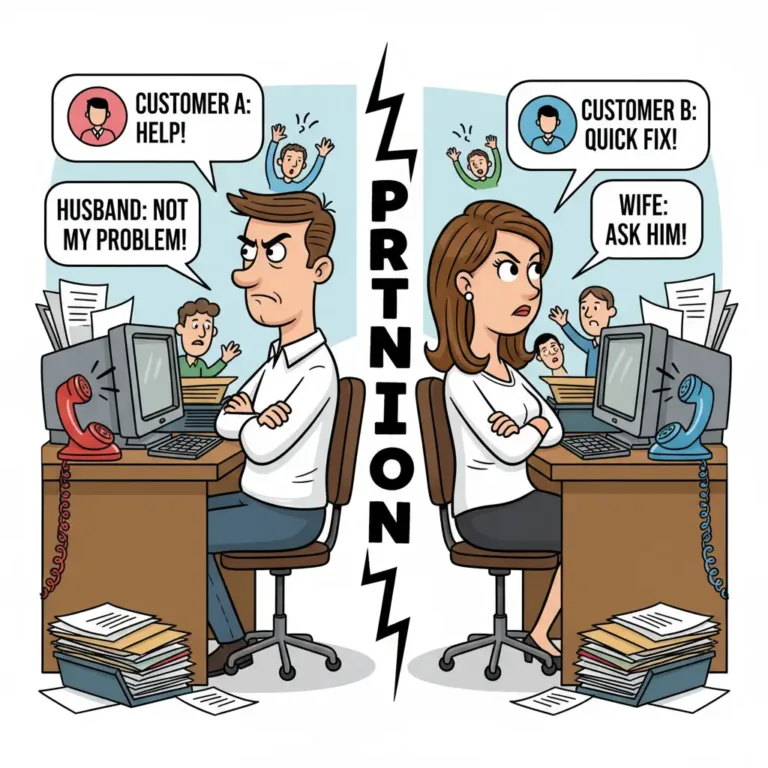

The Partition Problem

But life isn’t that simple. Imagine a fight with your spouse: both of you are at work but refuse to talk.

Now, updates made on one side never reach the other. Customers might again get different answers.

This is called a network partition in distributed systems: parts of the system can’t communicate due to failures or disconnections.

To deal with this, you have two options:

Stop taking update calls until communication is fixed (Consistency + Partition Tolerance → sacrifice Availability).

Keep taking calls but risk giving out different answers (Availability + Partition Tolerance → sacrifice Consistency).

Lets suppose in this case if we are available to users then in that case

Suppose user1 has updated data in node1 and for fetching that data after 10min request goes to node2 , then in that case user will see inconsistent data. because Databases are not in sync

If we choose consistency , then our system is not available to users until we fix the partition tolerance

Now question came to your mind

we can turn on only One Node and serve requests from that only but when a load balancer is connected to system it evenly distributes the loads on all the nodes available that is random and we can not control it. So to do so we have to change architecture completely that requires lot of time and effort and companies can not afford that changing system at production running stage.

This is the CAP trade-off in action.

Understanding the CAP Theorem

Let’s formalize what we’ve seen:

Consistency (C): Every customer should get the same, most recent data no matter who answers the call.

Availability (A): The service is always ready to answer calls, even if someone is absent.

Partition Tolerance (P): The system keeps running even if communication between parts breaks down.

The theorem (proven by computer scientist Eric Brewer) says:

You can’t guarantee all three at once in a distributed system. You must choose two out of three.

Real-World Examples

CP Systems (Consistency + Partition Tolerance, but not Availability):

Databases like HBase or MongoDB (in some configurations) may refuse to serve requests if nodes can’t agree on consistent data.AP Systems (Availability + Partition Tolerance, but not strict Consistency):

DynamoDB, Cassandra, and many NoSQL databases allow “eventual consistency.” They’ll always answer, but the answer may not be the latest.CA Systems (Consistency + Availability, but not Partition Tolerance):

This works only when the system doesn’t need to handle partitions—basically in single-server setups, not real distributed systems.

Bonus: Eventual Consistency

Some systems don’t aim for instant consistency. Instead, they use eventual consistency.

Imagine hiring a clerk whose only job is to sync your spouse’s notebook with yours in the background. If John calls you right after updating your spouse, he might get outdated info. But if he calls later, once syncing is done, he’ll always get the right answer.

This is exactly how many large-scale systems (like Amazon’s Dynamo or social media feeds) work. You may not see your friend’s “like” instantly, but eventually, everything matches.

C + A → No Partition Tolerance (idealized, works only on single node).

C + P → Sacrifice Availability (system halts on failure, but never gives wrong data).

A + P → Sacrifice Consistency (system stays online, but answers may briefly differ).

When building real systems, engineers choose based on business needs. Banking apps lean toward Consistency, while social media platforms often lean toward Availability.

The CAP theorem is not a limitation—it’s a design compass. It reminds us that distributed systems face trade-offs Happy coding ! ❤️