Background Jobs

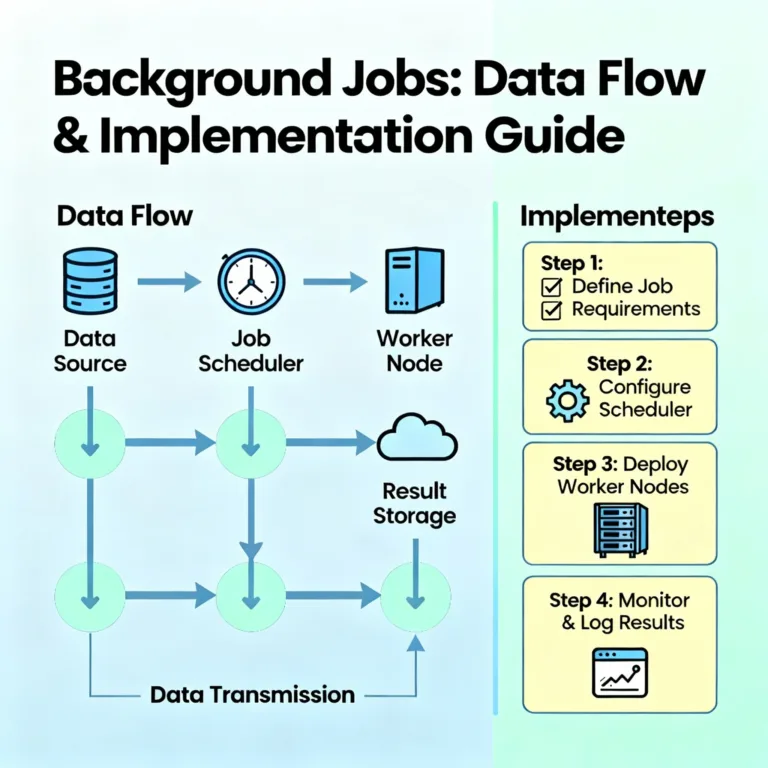

In modern applications, not all work needs (or should) be done immediately in the user’s interactive flow. Many tasks are suitable for being moved into the background, decoupled from the user interface (UI). These are called background jobs.

A background job is a task that runs independently of the UI or the caller, often asynchronously. The caller triggers it (or schedules it), and then continues doing other things without waiting. Once the job completes, it may update some data or send a notification, but it doesn’t block the user’s interaction.

Benefits of using background jobs

Responsiveness: The UI remains fast. Users don’t wait for long tasks (e.g. image processing, report generation) to finish.

Scalability: You can scale background processors differently from the UI tier. Heavy flows can be offloaded.

Reliability: If tasks fail or slow, they can be retried or queued without affecting the UI.

Batching / grouping: Related operations can be batched, scheduled, or aggregated over time.

Separation of concerns: You keep interactive logic separate from heavy or auxiliary logic.

Typical use cases include:

Generating thumbnails or processing images after upload

Sending confirmation emails or notifications

Aggregating analytics or reports nightly

Cleaning up stale data

Long-running workflows (e.g. provisioning, orchestrating multiple steps)

Data migration or indexing

Background jobs are tasks like CPU-intensive computations, I/O tasks, batch processing, long workflows, or operations involving sensitive data that you want to isolate from UI execution.

Types of triggers for background jobs

How does a background job start? Typically via one of two broad categories:

Event-driven triggers

Schedule-driven (timer) triggers

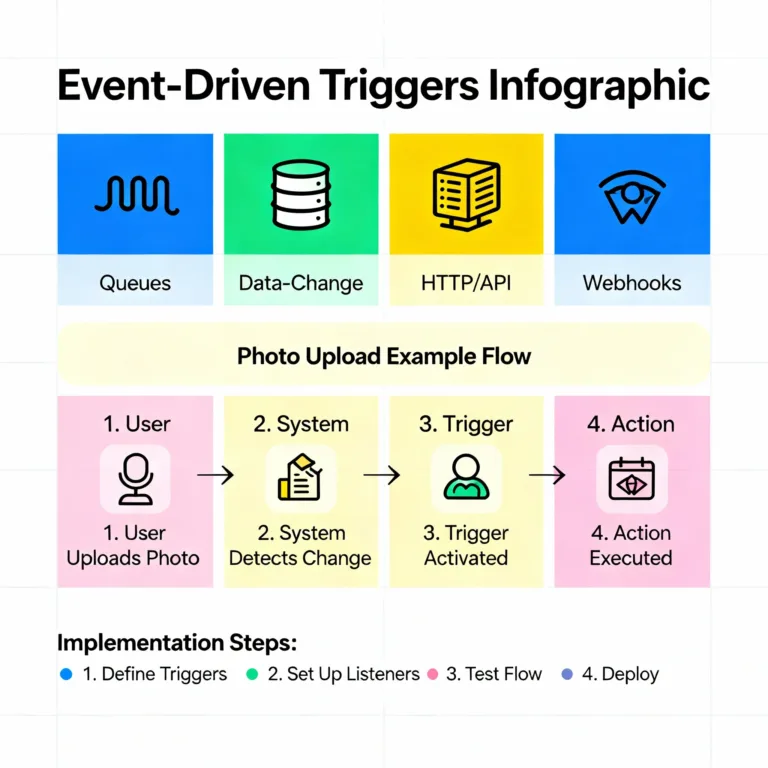

Event-driven triggers

Definition: An event (usually in response to a user action or system action) causes the background task to start immediately (or as soon as possible). The event acts as the “trigger.”

Think of event-driven triggers as “you did something → now process something in the background.” It’s reactive.

Common patterns for event triggers

Message queue / queue-based messaging:

The UI or a frontend component pushes a message to a queue (e.g. Azure Storage Queue, Azure Service Bus, RabbitMQ, AWS SQS, etc.). A background worker listens on the queue and processes messages as they arrive. This is asynchronous, decoupled communication.Change (data) triggers / event on storage or database:

For example, when a record is inserted or updated in a database or storage, that change emits an event (or triggers a change feed). The background job is triggered to act on that change.HTTP / API trigger:

The UI calls an API endpoint or function, passing data, and that API enqueues or triggers the background task. The API itself responds quickly, while the heavy work is deferred.Webhook or external event sources:

An external system sends an event to your system (webhook) which triggers background processing.

Advantages of event-driven triggers

Low latency: The job starts soon after the event, so you don’t have to wait until a scheduled time.

Decoupling: The producer (e.g. UI) and consumer (background job) are loosely coupled.

Elastic scaling: If many events arrive, you can scale more workers to process them.

Better resource usage: Jobs run when needed, not continuously or on fixed schedule.

Challenges and considerations

Ordering and consistency: Events might arrive out-of-order or duplicate. You must design for idempotence (i.e. processing the same event twice doesn’t break consistency). Azure queue systems often deliver “at-least-once” semantics.

Poison messages: If an event always causes failure (e.g. malformed), it can become a “poison message” that gets retried infinitely. You need mechanisms (dead-letter queues, retries with backoff) to isolate or reject such messages.

Retries and failures: You need retry logic to handle transient errors. But be careful with side effects if retried.

Concurrency & conflicts: If multiple workers process events, they may conflict on shared resources. Use locking or singleton patterns or partitioning.

Event storming / traffic bursts: Sudden surges of events can create spikes; queue-based buffering helps absorb them.

Visibility / progress / status tracking: Since jobs run asynchronously, you often need a way to notify or let the caller/UI check progress or completion (discussed later in “Returning results”).

Example of an event-driven background job

Suppose a user uploads a photo via a web UI. The flow:

UI uploads the photo file to blob storage.

After successful upload, UI (or a backend microservice) enqueues a message to a queue:

PhotoUploadedevent, containing the blob path and metadata.A background worker is listening on that queue. It picks up the

PhotoUploadedmessage, loads the image, resizes it to various resolutions, stores thumbnails, updates the metadata in DB, maybe sends a notification to the user.UI (or a frontend) might poll or query status, or get notified when thumbnail is ready.

This decouples the upload step (fast) from heavy image processing.

According to the Azure article, event-driven invocation is one of the primary trigger types, using queues, storage changes, or HTTP endpoints.

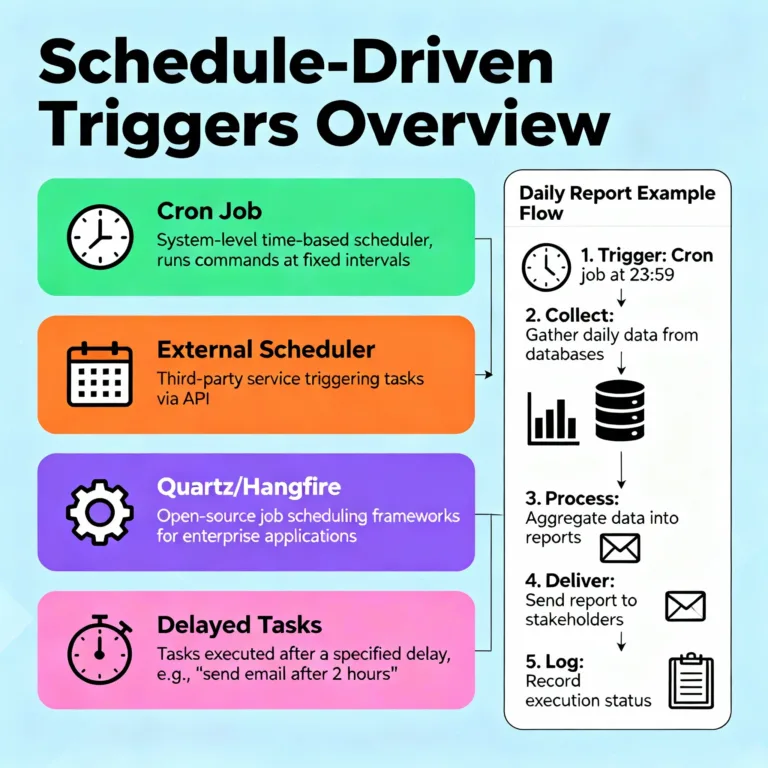

Schedule-driven (timer) triggers

Definition: A background job is invoked or triggered at specified times or intervals (e.g. every hour, daily, or once at a future time). This is not triggered by an external event but by a timer or scheduler.

Think of schedule-driven triggers as “do this every so often,” or “at this date/time do that task.”

Common patterns for schedule triggers

Timer within the application: A process includes a timer (e.g. cron expression,

System.Timers,ScheduledExecutorService, etc.) that wakes up periodically and runs tasks.External scheduler: Use a separate scheduling service (e.g.

cronjob, Azure Logic Apps, AWS CloudWatch Events, Azure Scheduler, or external orchestration) to call an API that triggers the background job.Dedicated scheduling engine or library: Use frameworks like Quartz, Hangfire, or built-in scheduler libraries.

One-off scheduling / delayed tasks: Queue a task with a delay, TTL, or “run-at” timestamp, so the task executes in the future.

Advantages of schedule-driven triggers

Simplicity for recurring tasks: For tasks that must run regularly (e.g. cleanup, daily reports), scheduling is straightforward.

Predictability: You know when the job will run (cron-style).

Reduced overhead: No continuous listening or polling; jobs run only when scheduled.

Challenges and caveats

Overlap / reentrancy: If a scheduled task takes longer to complete than its interval, you may have multiple overlapping runs. For example, if you schedule a task every 5 minutes, but one run takes 8 minutes, you might start a second instance before the first finishes. Prevent this via locks, checks, or ensuring idempotence. Azure documentation warns about tasks running longer than schedule intervals.

Multiple scheduler instances: If your application is scaled out (multiple instances), each might run the timer and cause duplicate scheduling. You need a mechanism so only one instance triggers the job (singleton scheduler, leader election, distributed lock).

Flexibility: Not as responsive to ad hoc events; delays may occur until next schedule.

Latency vs freshness: You trade immediacy for batching.

Azure’s guidance suggests tasks like batch jobs, index updates, analytics, data cleanup, and consistency checks are often well-suited for schedule-driven invocations.

Example of a schedule-driven background job

Suppose a system needs to generate daily summary reports every night at 2:00 AM:

A scheduler (cron job or Azure Logic App) fires at 2:00 AM daily.

It calls a backend API endpoint like

/runDailySummary.That endpoint enqueues or triggers a background processor.

The background processor computes aggregates for the past 24 hours, writes to a summary table, sends a summary email, etc.

Another example is cleaning up expired sessions or logs every hour.

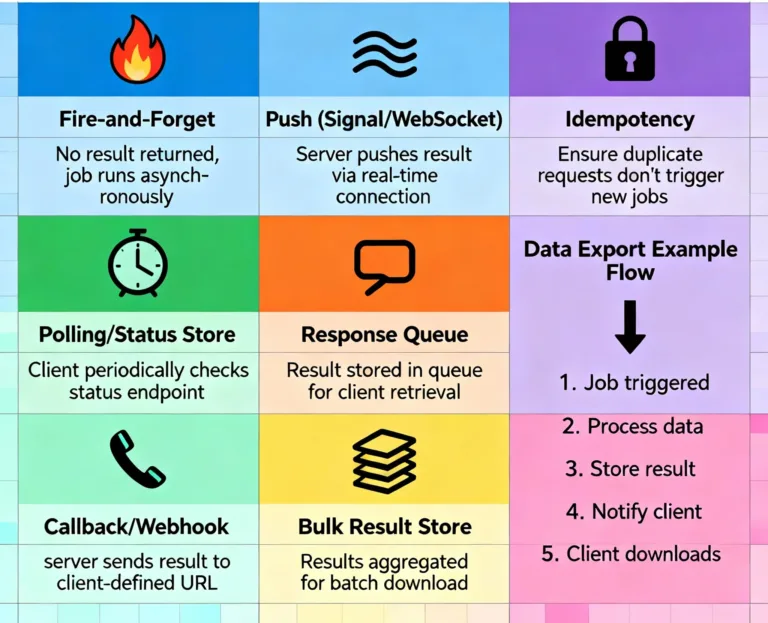

Returning results from background jobs

Because background jobs run asynchronously, often in a different process or machine than the caller/UI, you must design how the job returns status, results, or progress. The UI or caller cannot simply “await” the result like a synchronous function.

Here are patterns and strategies for result or progress communication.

“Fire-and-forget” jobs

In many cases, you don’t need any explicit return to the caller. The job runs, completes its work (e.g. update DB, send email), and that’s it. The job is “fire-and-forget.”

Pros: Simple, no waiting, no coupling.

Cons: The caller doesn’t know when the job is finished (unless it queries separately).

This is ideal when you don’t need immediate feedback.

Polling / status check

If the caller (UI) needs to know when the job is done (or track its progress), you can implement a status indicator:

When initiating the job, create a status record in a shared store (database, table, cache) with an identifier.

The background job updates this status store (e.g. “In Progress”, “50% done”, “Completed”, “Failed”, error message, result data).

The UI or caller periodically polls this status endpoint to get the current state and display to the user.

For example:

| JobId | Status | Progress | Result | ErrorMessage |

|---|---|---|---|---|

| 12345 | InProgress | 30% | — | — |

| 12345 | Completed | 100% | {…} | — |

| 12346 | Failed | — | — | "Timeout" |

Advantages: Simple, intuitive, works in many scenarios.

Considerations:

Polling too frequently wastes resources; too infrequently delays UX.

You need timeouts or fallback if job never completes.

UI must handle intermediate states gracefully.

Callback / webhook / push notification

If possible, instead of polling, you can notify the caller (or interested client) when the job completes. Options:

HTTP callback / webhook: The background job, upon completion, calls a callback endpoint (supplied earlier) to inform “job complete with result.”

SignalR / WebSocket / push notification: For web or mobile apps, the background job can push a notification via WebSocket or SignalR to update the UI.

Message queue response: The background job can publish a “JobCompleted” event to a response queue. The caller listens (or polls) that queue for replies.

Email / SMS / in-app notification: The result is communicated using external notification.

Advantages: More real-time, no excessive polling.

Challenges:

The caller (or UI) must be ready to receive the notification (be online, listening).

Secure callback endpoints carefully.

If notification fails, what fallback (e.g. store in status store).

Bulk result store

For jobs producing substantial output (e.g. a report file, CSV, image, PDF), the result may be stored in shared storage (blob storage, a results database) and the status table stores a pointer (e.g. URL, file path). The UI can fetch or download the result when ready.

Idempotency and result replay

Because of retries, failures, or duplicates, you should design result-handling to be idempotent: repeated updates or notifications should not corrupt state or produce duplicate side effects.

Example of returning results via polling + callback

Suppose a user requests a data export report via UI:

UI sends a request to backend API

/requestExport, which:Creates a new “export job” record in DB (status = “queued”),

Returns jobId to client (e.g.

exportJob=7890),Enqueues a background job with jobId and parameters.

UI polls

/exportStatus?jobId=7890periodically (e.g. every few seconds) to get status and progress.Once status is “Completed,” the status record contains a

downloadUrlto a CSV file stored in blob storage.UI shows “Download” link to user.

Optionally, background job could also call a webhook/notification to push to user when done.

Thus the UI doesn’t block waiting, and the user can see progress or get notified when result is ready.

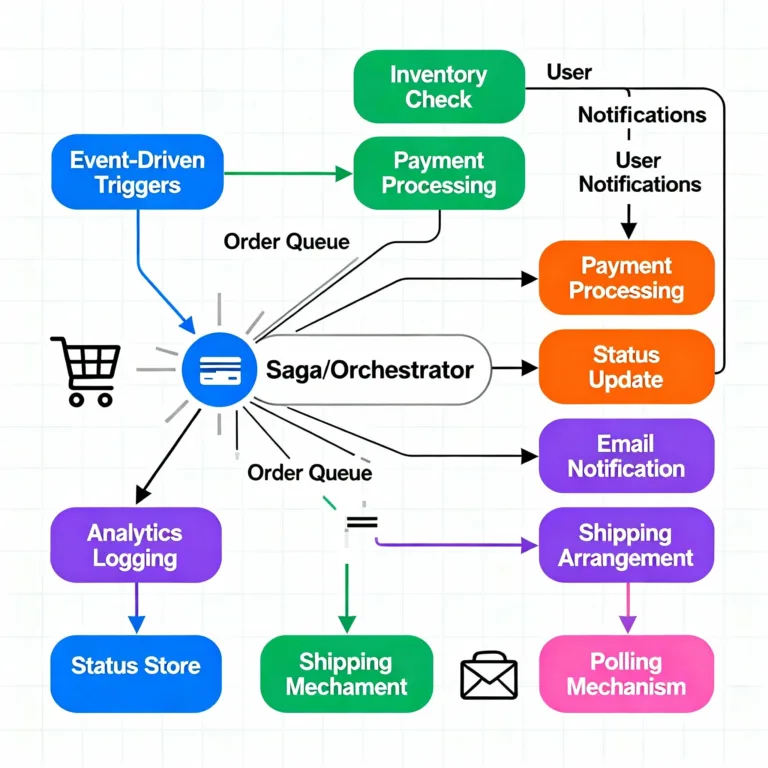

Worked Example: Processing orders in an e-commerce system

To illustrate all these in a cohesive example, let’s design a background job system for order processing in an e-commerce app.

Scenario and requirements

When a user places an order, the system should:

Validate inventory, reserve stock

Charge the payment

Update order status

Send confirmation email

Update analytics / sales leaderboard

Possibly notify external shipping / logistics system

The UI should be responsive: the user should get an “Order received” message immediately without waiting for all these steps.

If any step fails (e.g. payment fails), we must rollback or cancel the order gracefully.

The user can check order status (pending, succeeded, failed).

Architecture using background job patterns

Trigger: event-driven

After UI receives order submission, it:

Writes order details to the orders database (status = “pending”).

Enqueues a message to a queue

ProcessOrderwith order ID and needed metadata.

Background consumers listen to

ProcessOrderqueue.

Workers / orchestration

A worker reads

ProcessOrdermessage, marks in the status table that “order processing started,” then performs the sequence of steps:Step 1 (Inventory): Check if inventory is available; if yes, reserve; if not, mark failure.

Step 2 (Payment): Call payment gateway; if success, go on; if failure, undo inventory reservation.

Step 3 (Order status): Update order record to “Confirmed” or “Failed.”

Step 4 (Email): Enqueue or send confirmation email (this itself could be another background job, e.g.

SendEmail).Step 5 (Analytics): Enqueue or do analytics update in background.

Step 6 (External integration): Enqueue or call shipping API.

Optionally, traits:

Use a Saga / orchestrator to coordinate these steps and handle failures via compensations.

Persist intermediate state so it can resume if the worker crashes.

At each step or at completion, update the status table or send progress messages.

When done, optionally send callback or notification to user (or UI) that order is confirmed.

Returning results

When user places order, client receives back

orderId.Client may poll

/orderStatus?orderId=...to see current status (“Pending”, “Processing”, “Failed”, “Confirmed”).Once status is “Confirmed,” UI can display success and possibly show download or tracking info.

In parallel, background job might push a notification to the frontend via WebSocket or send an email.

This design keeps UI fast, enables retries and failure handling, and cleanly separates concerns.

Why choose event-driven vs schedule-driven?

Event-driven is best when tasks should respond quickly to user or system actions (e.g. send email after registration, process uploaded file).

Schedule-driven is ideal for periodic or maintenance tasks (daily reports, cleanup, batch processing).

Often, systems use both in combination: user actions trigger most jobs, while some tasks (like nightly batch work) run on schedule.

With the principles and examples above, you should have a thorough understanding of how to design, build, and operate background job systems: how to trigger them (event-driven or schedule-driven), how to return results to callers, and how to make them robust, scalable, and maintainable. Happy coding ! ❤️