Scalability in system design

Scalability is the ability of a system, network, or process to handle an increasing amount of work or its potential to accommodate growth. In the context of system design, scalability ensures that a system can grow and perform efficiently as the demand for its services increases.

Why Scalability Matters

- Business Growth: As businesses grow, the demand on their systems increases. A scalable system supports this growth without major redesigns.

- User Experience: Ensures consistent performance for users even during traffic spikes.

- Cost Efficiency: A well-scalable system can optimize resource usage, reducing operational costs.

- Competitive Advantage: Scalable systems can quickly adapt to market changes and user demands.

As a system expands, its performance tends to decline unless adjustments are made to accommodate the increased demands.

Scalability refers to a system’s ability to manage a rising workload effectively by incorporating additional resources.

A truly scalable system is one that can adapt and evolve seamlessly to handle a growing volume of tasks.

In this discussion, we will delve into various methods for system growth and explore common strategies to enhance scalability.

How a System becomes Unstable ?

1.Increase in User Base

The number of users engaging with the system has grown, leading to a rise in the volume of requests.

Example: A fitness tracking app experiencing a surge in downloads after a major marketing campaign.

2.Expansion of Features

New functionalities were added to enhance the system’s capabilities and offerings.

Example: A food delivery app introducing a subscription service for free deliveries.

3.Increase in Data Volume

The amount of data the system handles has expanded due to user activity or data logging practices.

Example: A video streaming service like YouTube accumulating a larger library of video content over time.

4.Increase in System Complexity

The system’s design has evolved to include additional features, scalability measures, or integrations, leading to more components and dependencies.

Example: A previously simple application transitioning into a collection of smaller, independent services.

5.Expansion of Geographic Reach

The system has been extended to cater to users in new locations or countries.

Example: An e-commerce business launching operations and websites in international markets.

Lets see how to scale a system.

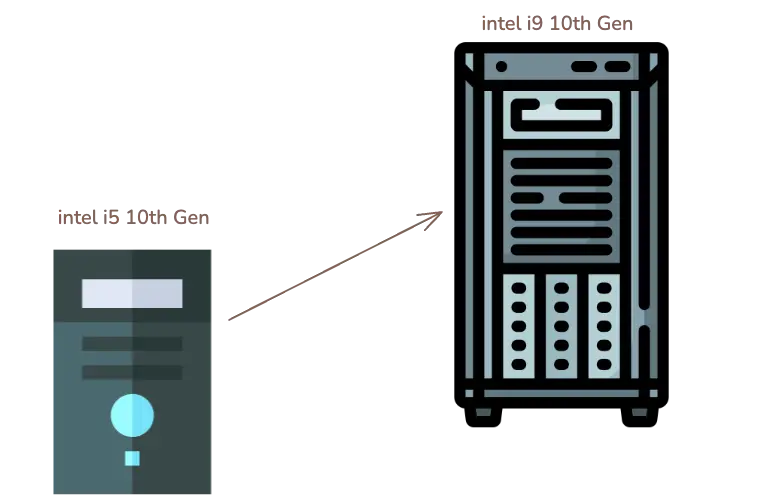

Vertical Scaling

Vertical scaling, also called scaling up, is like giving your computer or server a powerful upgrade to handle more work.

Imagine you have a laptop, and it starts to slow down when you run too many programs.

Instead of buying a new laptop, you decide to make it more powerful by adding more memory (RAM), upgrading the processor (CPU), or increasing the storage space.

How Vertical Scaling Works

When a server (a computer used to run websites, apps, or other services) starts struggling to handle the workload, you can:

Add More RAM

RAM is like the short-term memory of your server. The more RAM it has, the more tasks it can juggle at the same time without slowing down. For example, a server with 8 GB of RAM might struggle with many users, but upgrading to 32 GB allows it to handle more.Upgrade the CPU

The CPU (Central Processing Unit) is the brain of the server. A faster CPU can process tasks quicker. For example, upgrading from a dual-core processor to an eight-core processor means the server can handle multiple tasks simultaneously, much faster.Increase Storage Space

If your server is running out of space to store data, you can add more storage or switch to faster storage like SSDs (Solid State Drives). Faster storage means the server can retrieve and save data much quicker.

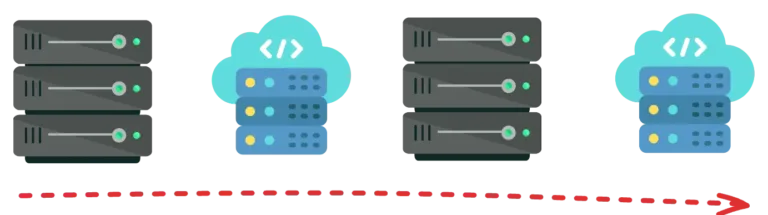

Horizontal Scaling (Scale Out)

Instead of upgrading a single server to make it more powerful (as in vertical scaling), you add more servers to your system.

Horizontal scaling, also called scaling out, is like hiring more workers to handle a growing workload.

These servers work together to share the tasks, making the entire system more efficient and capable of handling more users or data.

A simple Analogy

Imagine you run a bakery. If more customers start coming in, you could hire more bakers (horizontal scaling) instead of just buying a bigger oven (vertical scaling). Each baker handles part of the workload, so you can serve more customers at the same time. If one baker is unavailable, the others can still keep the bakery running.

How Horizontal Scaling Works:

When your application or system needs to handle more traffic or data, you can:

Add More Servers:

Rather than relying on one powerful server, you add more servers that work together. For example, instead of one server processing 1,000 user requests, you could have 10 servers, each handling 100 requests.Distribute the Workload:

A load balancer is used to distribute tasks across all the servers. It ensures that no single server is overloaded while others are idle. This setup allows the system to handle more users efficiently.Keep Adding as Needed:

If traffic grows even more, you can add additional servers. This flexibility makes horizontal scaling ideal for large systems that need to grow quickly.

- E-commerce websites: Sites like Amazon handle millions of users by distributing the workload across thousands of servers.

- Social media platforms: Platforms like Facebook or Twitter rely on horizontal scaling to serve billions of users worldwide.

- Cloud services: Companies like Google and AWS use horizontal scaling to provide reliable and scalable services to their customers.

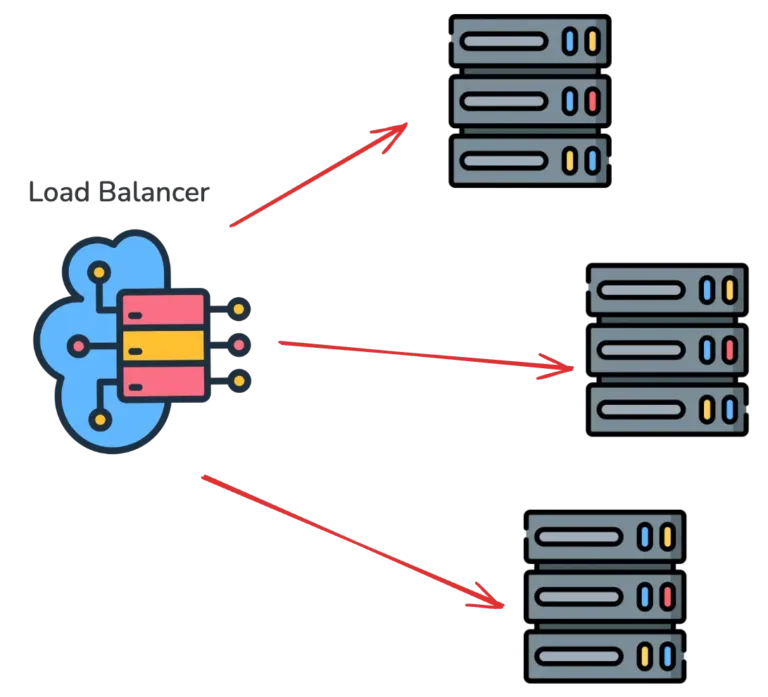

Load Balancing

Load balancing is like a traffic manager for your servers.

When many users or systems send requests to your application, load balancing ensures that the traffic is spread evenly across multiple servers.

This prevents any single server from being overwhelmed, which helps keep your application running smoothly and reliably.

A Simple Analogy

Imagine a busy restaurant with many tables and customers coming in. The restaurant manager (load balancer) assigns each customer to an available waiter (server) to ensure no single waiter is overloaded. If one waiter is unavailable, the manager redirects customers to the other waiters, ensuring everyone is served without delay.

Example

Google makes extensive use of load balancing throughout its global infrastructure, ensuring search queries and traffic are evenly distributed across its vast server farms.

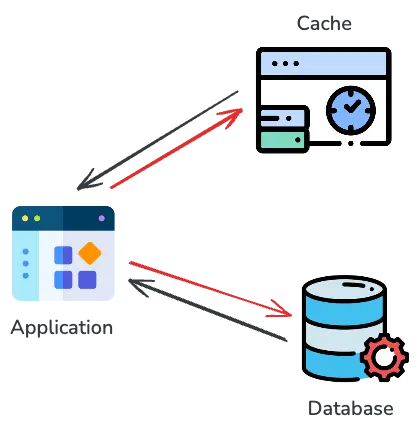

Caching

Caching is like keeping a shortcut to frequently used information.

Instead of repeatedly fetching the same data from a slower source (like a database or server), caching stores it in a fast-access location, like memory (RAM).

This speeds up data retrieval and reduces the workload on your backend systems.

How Caching Works:

Request for Data:

When a user requests data (e.g., opening a webpage or running a search), the system first checks the cache to see if the data is already stored there.Cache Hit or Miss:

- Cache Hit: If the data is found in the cache, it’s quickly returned to the user without involving the server or database.

- Cache Miss: If the data isn’t in the cache, it’s fetched from the original source (e.g., database) and then stored in the cache for future requests.

Subsequent Requests:

The next time the same data is requested, it’s served directly from the cache, saving time and resources.

A Simple Analogy

Imagine you run a library. If people keep asking for the same book, you decide to keep a copy at the front desk instead of going to the shelves every time. This is caching! It saves time and effort while serving visitors more quickly.

When to Use Caching

- Static Content: Images, CSS files, and JavaScript are perfect candidates for caching.

- Frequently Accessed Data: Items like product details, user profiles, or recent search results.

- Expensive Operations: Results from complex calculations or queries that take a long time to execute.

Example

Reddit leverages caching to store popular content, such as trending posts and comments, enabling rapid access without repeatedly querying the database.

Content Delivery Network (CDN)

A Content Delivery Network (CDN) is a system of distributed servers located in different geographic regions.

The primary purpose of a CDN is to deliver static assets—like images, videos, stylesheets, and JavaScript files—to users more quickly by storing and serving these files from servers that are physically closer to them.

This reduces the time it takes for data to travel across the internet, resulting in faster load times and a better user experience.

How CDNs Work:

Content is Cached:

When you use a CDN, your website’s static content (e.g., images, videos, CSS, JavaScript) is copied and cached on multiple servers around the world. These servers are called edge servers.User Requests Content:

When a user visits your website, their request is routed to the nearest edge server instead of the origin server (the main server hosting your website).Fast Delivery:

The edge server quickly delivers the cached content to the user, reducing the distance the data has to travel and speeding up the loading process.Fallback to Origin Server:

If the requested content isn’t available on the edge server (a cache miss), the CDN retrieves it from the origin server, caches it, and then delivers it to the user.

Types of Content Delivered by CDNs

Static Content:

Images (JPEG, PNG, GIF)

Videos (MP4, WebM)

Stylesheets (CSS)

JavaScript files

Dynamic Content (in some cases):

Advanced CDNs can optimize and accelerate dynamic content delivery by using techniques like real-time caching.

APIs:

CDNs can also speed up API responses for applications.

Streaming Media:

Live and on-demand video or audio streams.

A Simple Analogy

Imagine a bookstore with a single location far away. Every time someone needs a book, they have to travel to that store. Now, imagine the store sets up smaller branches in every major city, each stocked with popular books. Customers can now get their books much faster because they don’t have to travel as far. These branches are like CDN edge servers.

Example: How Cloudflare Works as a CDN

Cloudflare is a popular CDN provider. Here’s how it improves website performance:

Global Network:

Cloudflare has servers in hundreds of locations worldwide. When a user requests content, Cloudflare serves it from the closest server.Caching:

Static assets like images, videos, and scripts are cached on Cloudflare’s servers. These assets are delivered instantly from the edge servers.Additional Features:

- DDoS Protection: Prevents malicious attacks from overwhelming your website.

- SSL Encryption: Ensures secure communication between users and the website.

- Content Optimization: Compresses images and files to reduce load times further.

Partitioning

Partitioning is a technique used to divide data or functionality into smaller, more manageable pieces and distribute them across multiple nodes or servers.

This helps to balance the workload, improve performance, and avoid bottlenecks in systems that handle large amounts of data or traffic.

How Partitioning Works

Splitting Data:

Data is divided into smaller “partitions” based on specific criteria, such as a unique identifier (e.g., user ID, product ID, or region). Each partition contains a subset of the total data.Assigning Partitions to Servers:

Each partition is stored on a separate server or node. This ensures that no single server is overloaded with all the data or requests.Routing Requests:

When a user or application requests data, the system determines which partition contains the data and routes the request to the appropriate server.

A Simple Analogy

Imagine a library with millions of books. Instead of storing all the books in one room, the library divides them into sections (partitions) based on genres like fiction, history, and science. Each section is managed by a separate librarian (server). When someone needs a book, they go directly to the relevant section, making the process faster and more efficient.

Example: Amazon DynamoDB

Amazon DynamoDB, a managed NoSQL database service, uses partitioning to handle massive amounts of data and traffic. Here’s how it works:

Partitioning Keys:

DynamoDB uses a partition key to determine where data is stored. For example, if the key is a user ID, the system assigns each user’s data to a specific partition.Scalability:

As data and traffic grow, DynamoDB automatically adds more partitions and distributes data evenly to maintain performance.High Performance:

By spreading the workload across multiple partitions, DynamoDB ensures low latency and fast query times, even under heavy traffic.

Asynchronous communications

Asynchronous communication in software systems refers to handling tasks or processes in the background without making the main application wait.

Instead of executing a task immediately and holding up the application, the task is deferred to a background queue or a message broker.

This ensures that the main application remains responsive, providing a smooth user experience.

Use Cases of Asynchronous Communication

Messaging Systems:

Platforms like WhatsApp, Slack, and email services use asynchronous communication to send and receive messages without freezing the user interface.File Uploads:

When users upload files, the upload task is handled in the background while the application remains responsive.Payment Processing:

E-commerce platforms defer payment verification and processing to background queues to avoid delays during checkout.Email Notifications:

Sending confirmation emails or alerts is often done asynchronously, so the user isn’t kept waiting.Data Processing:

Applications that process large datasets (e.g., generating reports or resizing images) defer these tasks to background workers.

Microservices Architecture

Microservices architecture is a design approach that divides an application into smaller, independent services.

Each service focuses on a specific business function, such as user authentication, billing, notifications, or ride matching.

These services operate independently but work together to form a complete system.

How Microservices Work

Application Split into Services:

The application is broken down into smaller components, each handling a specific function, such as user management, payments, or search.Communication Between Services:

Services communicate with each other through APIs or message brokers. For example, the “ride matching” service in Uber might query the “user preferences” service to match drivers and riders.Independent Deployment:

Each service is deployed separately. If an update is needed for the billing service, it can be rolled out without affecting other services.Load Balancing:

Traffic to each service is managed by load balancers, ensuring optimal performance even under high demand.

Example: Uber’s Microservices Architecture

Uber transitioned from a monolithic architecture to microservices to handle its growing user base and global operations. Here’s how it benefits from microservices:

Independent Services:

- Ride Matching: Matches riders with drivers.

- Billing: Calculates fares and processes payments.

- Notifications: Sends real-time alerts to users.

Scalability:

During peak hours, Uber can scale its “ride matching” service to handle increased demand without affecting other services.Global Operations:

Uber’s microservices are designed to handle region-specific features and regulations, making it easier to adapt to new markets.Rapid Development:

Teams can work on new features, such as loyalty programs or driver incentives, without waiting for updates to the entire system.

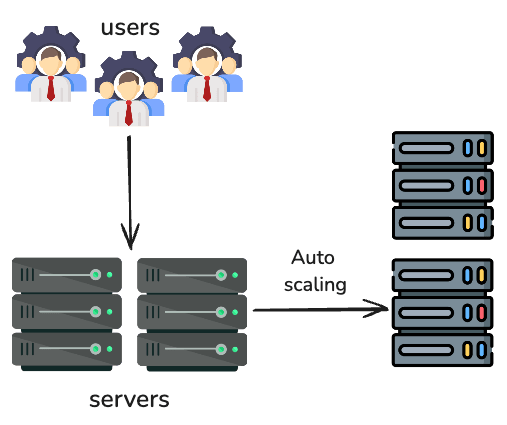

Auto Scaling

Auto-scaling is a cloud computing feature that automatically adjusts the number of active servers or resources based on the current workload or traffic demand.

It ensures that the system can handle sudden spikes in traffic without manual intervention and scales down during low usage periods to optimize costs.

How Auto-Scaling Works

Monitoring Metrics:

Auto-scaling monitors key performance indicators (KPIs) like CPU usage, memory usage, or network traffic to assess the system’s current load.Scaling Triggers:

When a metric exceeds a predefined threshold (e.g., CPU usage > 80%), auto-scaling adds more servers or resources. Similarly, if the load decreases (e.g., CPU usage < 30%), it reduces the number of active servers.Dynamic Adjustments:

Resources are added or removed dynamically, ensuring that the system always has the right capacity to handle the workload.Integration with Load Balancers:

Load balancers distribute traffic evenly across all active servers, ensuring efficient use of resources during scaling.

Example: AWS Auto Scaling

Amazon Web Services (AWS) provides an Auto Scaling feature to automatically adjust resource capacity for applications hosted on its platform. Here’s how it works:

Monitoring Metrics:

AWS Auto Scaling monitors metrics like CPU utilization, memory usage, or request rates using Amazon CloudWatch.Scaling Policies:

Users define scaling policies, such as:- Add two servers if CPU usage exceeds 80%.

- Remove one server if CPU usage drops below 30%.

Dynamic Scaling:

Based on the policies, AWS Auto Scaling adjusts the number of EC2 instances (virtual servers) to match the current load.Cost Efficiency:

By scaling resources dynamically, AWS Auto Scaling ensures optimal performance at the lowest possible cost.

Multiple region deployment

Multi-region deployment involves deploying an application across multiple data centers or cloud regions worldwide.

This approach reduces latency for users by hosting the application closer to their geographical location and improves redundancy, ensuring high availability even if one region experiences an outage.

How Multi-Region Deployment Works

Geographical Distribution:

The application is hosted in multiple data centers or cloud regions spread across different locations.Traffic Routing:

A global load balancer directs user requests to the nearest data center based on the user’s location, minimizing latency.Data Replication:

Data is replicated across regions to ensure consistency and availability. Techniques like eventual consistency or strong consistency are used, depending on the application’s requirements.Failover Mechanism:

If one region goes offline due to a failure, traffic is automatically redirected to other regions, maintaining uninterrupted service.

Example: Spotify’s Multi-Region Deployment

Spotify uses multi-region deployments to deliver a seamless music streaming experience to millions of users worldwide. Here’s how it benefits from this architecture:

Global User Base:

Spotify’s users are spread across continents. By deploying its services in multiple regions, Spotify ensures users experience minimal latency, regardless of their location.Redundancy:

If a data center in one region goes down, Spotify’s services remain available through other regions.Data Replication:

Spotify replicates user data (like playlists and preferences) across regions, ensuring that users can access their information instantly, no matter where they are.

In system design, scalability is the cornerstone of building robust and future-proof systems. It ensures that a system can handle growth in users, data, or workload seamlessly, without compromising performance or reliability. Happy coding ! ❤️